In the last blog, we discussed the challenges in managing APIs at scale in a Kubernetes environment. We also discussed how deploying a Kubernetes Ingress Controller or an API gateway can help you address those challenges.

In this blog, we will briefly touch upon some of the similarities and differences between an API gateway and Kubernetes Ingress. We will also discuss a unique approach offered by Kong for the end-to-end lifecycle API management (APIM) in Kubernetes.

API Gateway and Kubernetes Ingress Controller: Common Capabilities

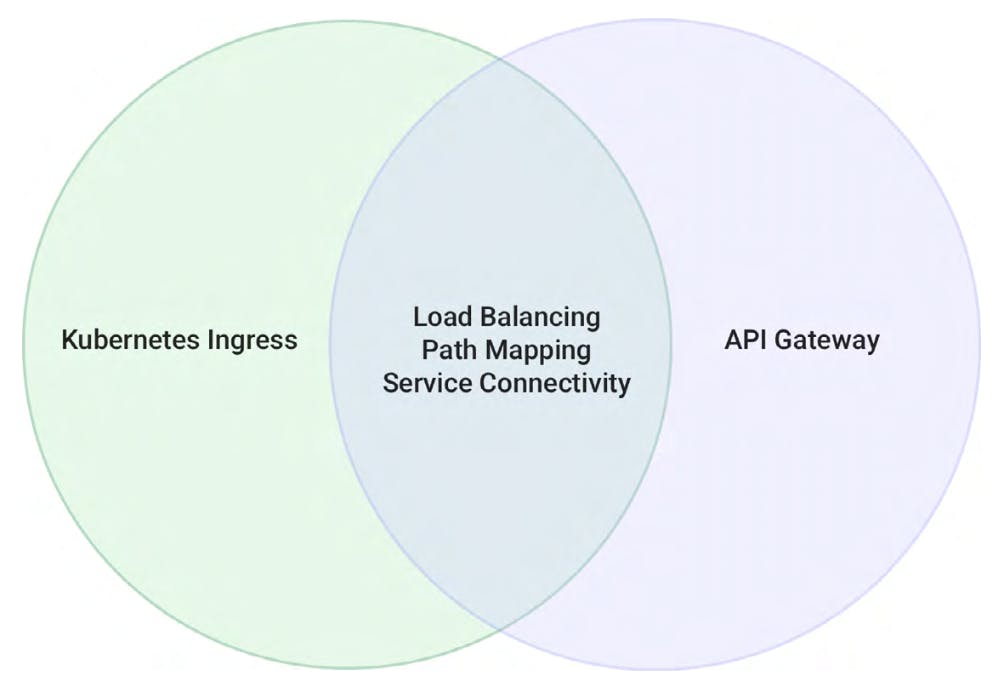

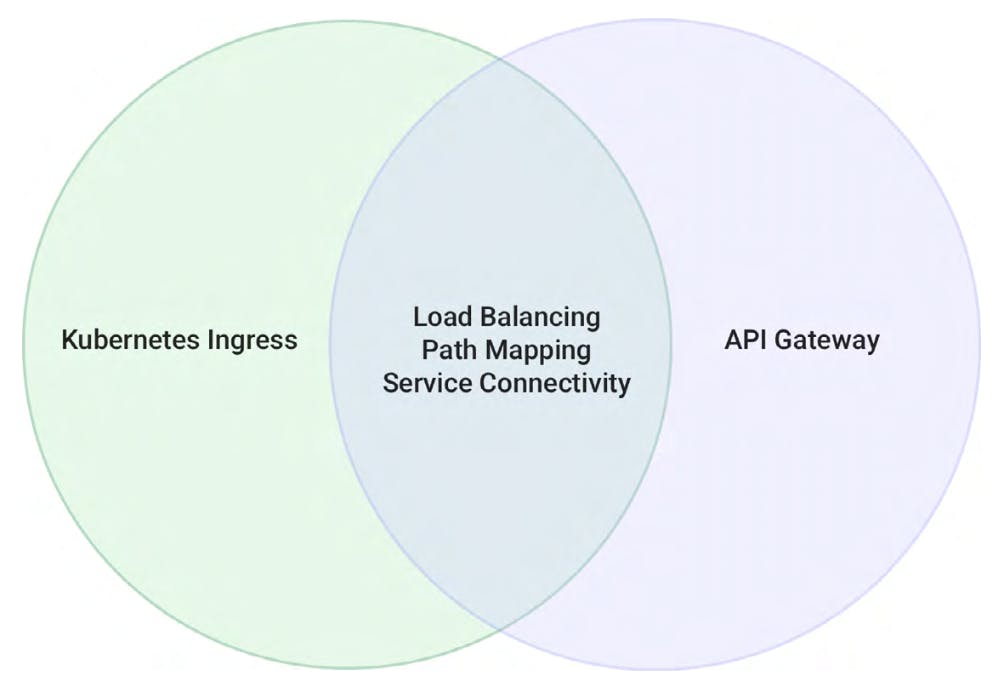

A microservices application needs to be both scalable and performant to qualify as production-ready. Take the example of an on-demand food delivery application. It not only needs to handle a large number of orders around meal times but also needs to handle these requests efficiently to deliver a delightful customer experience. Load balancing and traffic routing is critical to efficiently manage this volume of API requests. This is where an API gateway or a Kubernetes Ingress Controller is necessary. Some of the major capabilities that are common between these technologies are:

- Load balancing to track and handle incoming requests

- Path mapping to direct each request to the desired destination

- Service connectivity to ensure that the request gets the right response and all the information needed is gathered and delivered in the best possible way

Diagram 1: Overlap between Kubernetes Ingress and API Gateway

API Gateway and Kubernetes Ingress Controller: Differences

A microservices application exposes data and resources via APIs, making end-to-end APIM a required capability. Providing load balancing and traffic routing are essential, but they are very basic features provided by both Kubernetes Ingress Controller and API gateways. APIM takes a more holistic approach, taking into consideration the API development process, documentation and testing. In production, APIs also must be secured and monitored in order to protect them from vulnerabilities and proactively monitored for anomalies. Lastly, when offering APIs as a product, an API developer portal is necessary to onboard developers, register applications and to manage credentials to improve API consumability.

When it comes to Kubernetes Ingress Controller versus an API gateway, the former can only provide load balancing and traffic routing capabilities. On the other hand, in addition to simplifying API traffic management, an API gateway is well integrated with the process of full lifecycle APIM, enabling teams to create, publish, manage, secure and analyze their portfolio of APIs.

Download this eBook to learn more about the differences between API gateway and Kubernetes Ingress.

Kong for Kubernetes

Kong, Gartner Magic Quadrant 2021 leader in industry-leading full lifecycle API capabilities, provides microservices with its Kubernetes-native solution, Kong Ingress Controller (KIC), that combines the benefits of the Kong API gateway and the Kubernetes Ingress Controller. Before discussing the benefits of KIC, let’s discuss what it means to be Kubernetes-native.

Kubernetes-native tools and technologies are designed to integrate seamlessly with Kubernetes and be interoperable with native Kubernetes tools, such as kubectl. There is a great article on a Kubernetes-native future that defines Kubernetes-native as: "It can extend the functionality of a Kubernetes cluster by adding new custom APIs and controllers, or by providing infrastructure plugins for the core components of networking, storage, and container runtime. Kubernetes-native technologies can be configured and managed with kubectl commands, can be installed on the cluster with the Kubernetes's popular package manager Helm, and can be seamlessly integrated with Kubernetes features such as RBAC, Service accounts, Audit logs, etc."

Organizations adopting Kubernetes need an enterprise-grade ingress solution to enable native management, security and monitoring of traffic entering their Kubernetes clusters. KIC is a leading Kubernetes-native ingress solution that provides end-to-end APIM, robust security, ultra-high performance and 24×7 support. The Kong Ingress Controller provides enterprises the following benefits:

- Natively Manage APIs in Kubernetes: Manage your ingress through kubectl and CustomResourceDefinitions (CRDs) backed by an Operator that automatically reconciles the state of your ingress.

- Designed for Automation: Enable APIOps via declarative config to ensure the speed of software delivery is fast and undisturbed.

- Control Access Across Clusters: Leverage a Kubernetes namespace-based RBAC model to ensure consistent access controls without adding overhead.

- Plug Into the CNCF Ecosystem: Instantly integrate with CNCF projects such as Prometheus and Jaeger.

- Enhanced API Management Using Plugins: Use a wide array of plugins, or write a custom one, to monitor, transform and protect your traffic.

To learn more about the Kong Ingress Controller, please refer to our documentation.

Enroll in this Kong learning lab that provides hands on keyboard steps to demonstrate how to get started with Kong for Kubernetes and its ingress controller.