This article was written by Jeremy Justus and Ross Sbriscia, senior software engineers from UnitedHealth Group/Optum. This blog post is part two of a three-part series on how we've scaled our API management with Kong Gateway, the world's most popular open source API gateway. (Here’s part one and part three.)

We understand that this post's title may seem a little controversial. When we announced that our new API gateway solution might be an open source product, we got many questions from voices across the company. The questions were all variations on one theme:

How can a large enterprise have confidence in an open source API gateway used for mission-critical applications?

To answer this question, we focused on three ideas to prove the power of open source platforms.

1. Cultivate Internal Experts

To understand the value of internal experts, you must first think about how innovation happens. Innovation with software is the result of expertise and inspiration. Inspiration is a bit tricky to pin down, so let’s focus on where expertise comes from in both a closed source and open source model.

Closed Source Model

In a closed source model, you get "rented" experts who respond to specific requests. Rented experts can only react to your problems. They're usually not given by their company to innovate.

Closed source product experts are not familiar with your environment, integrations or customizations. A lack of familiarity is terrible for two reasons. First, yes, it does hamper innovation. And second, in a live troubleshooting scenario, it decreases the efficiency. The experts will need to familiarize themselves before they can be useful.

Because closed source products are opaque, your team may have a limited understanding. A lack of knowledge could make it difficult to produce effective and efficient integrations.

Open Source Model

In an open source model, we’re cultivating "internal" experts. Experts can leverage their skills to benefit the community, solution and its integrations. Internal experts understand the specifics of the environment in which they work. And the product itself is entirely visible.

So how do we go about cultivating these internal experts? That's where Kong community participation comes in.

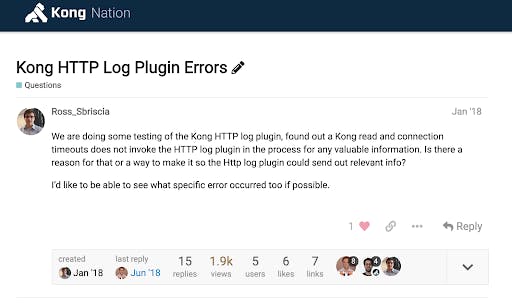

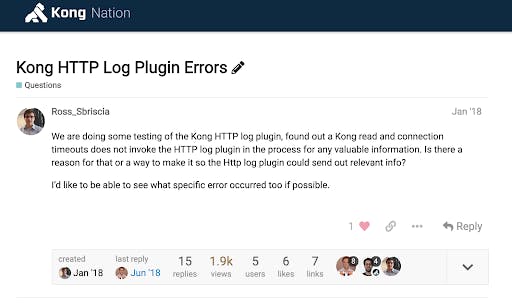

Our first interaction with Kong is an excellent example of how an open source community can cultivate internal experts to benefit the entire space. One of our internal customers was having issues with the gateway. They were receiving some HTTP 502s during their testing. But the weird part was they couldn't find these transaction logs.

When our team took a look, we found the results in our logs, but they were in the standard out logs. We were receiving a transport layer failure. Specifically, a connection reset when we were trying to route to the customer's upstream API service. The customer took this information and tried to diagnose the issue with their deployment further.

Still, we were a little concerned. We wanted to have logs of these failures. So we decided to make a post on Kong Nation. Since we never worked with an open source community, we weren’t sure what to expect when we did this. We weren’t sure if we would get a response in a couple of weeks or a couple of months. And if we did get a response, we weren't sure if it would be intelligible or helpful at all.

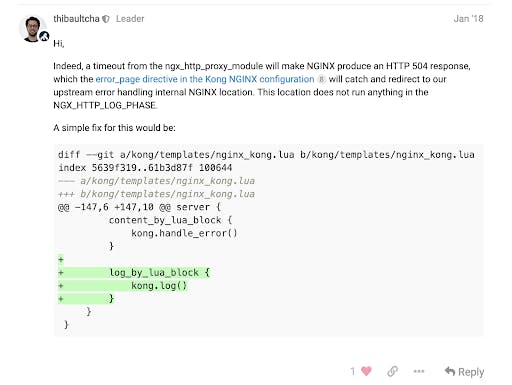

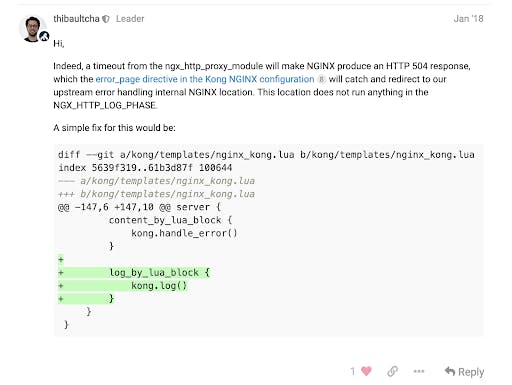

We were pleasantly surprised when one of Kong's principal engineers responded with a suggested code snippet on the same day.

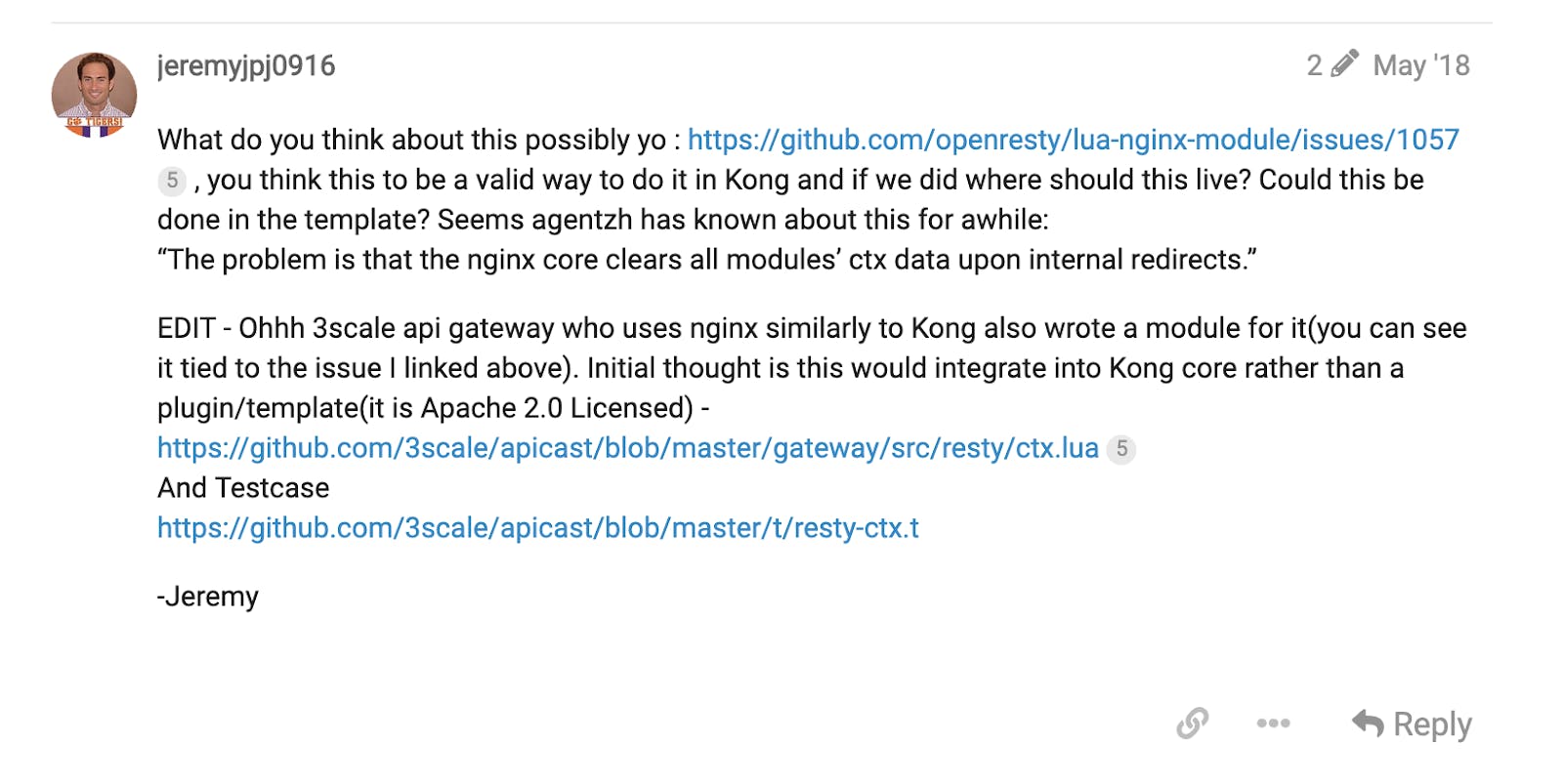

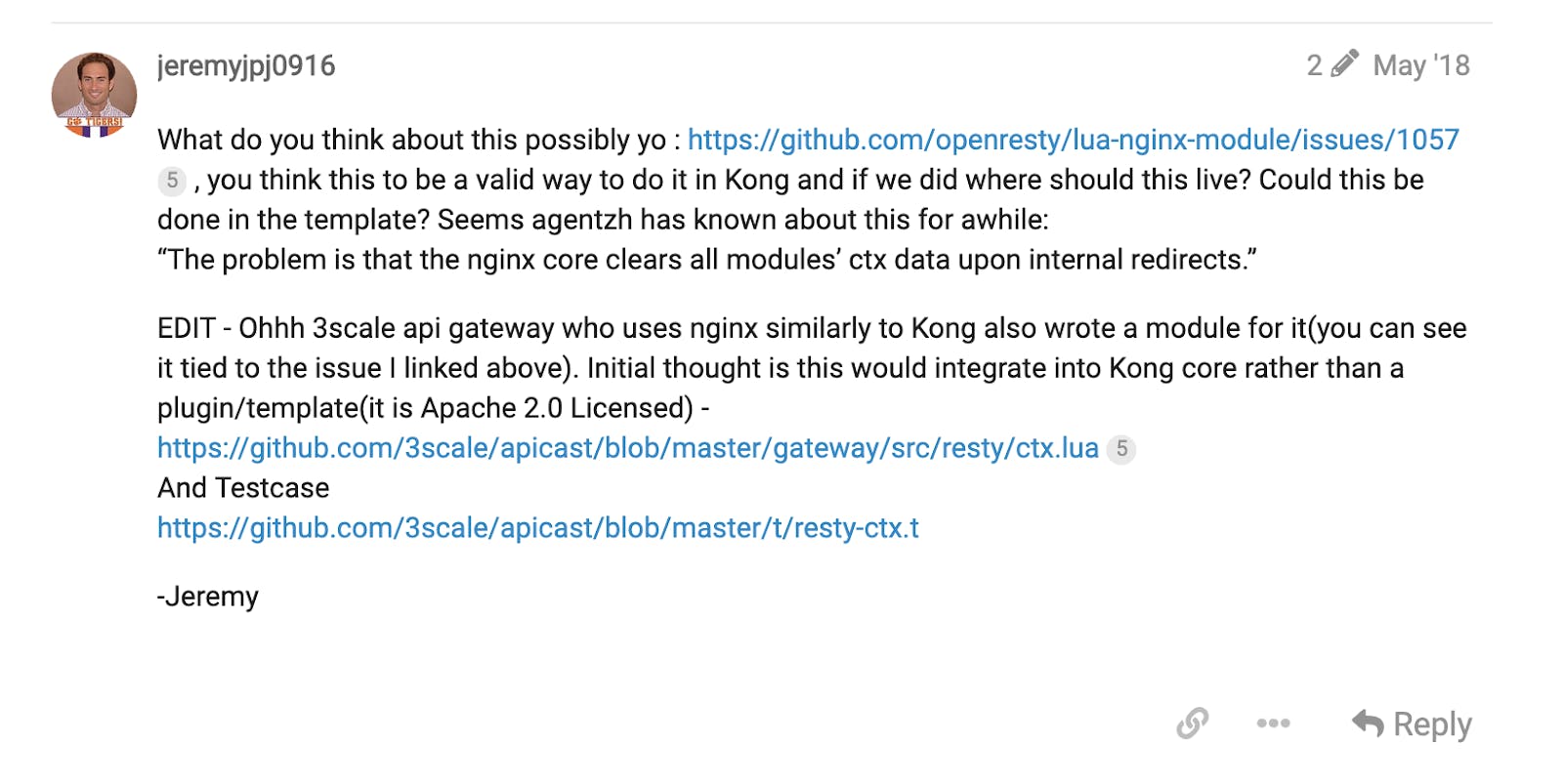

We tried out his suggestion, but it didn’t work. Undaunted, we made another post on Kong Nation, describing what we tried in as much detail as possible and included all our sources. The community responded again. Their response gave us a better idea of what to investigate. We found something. So we made another post.

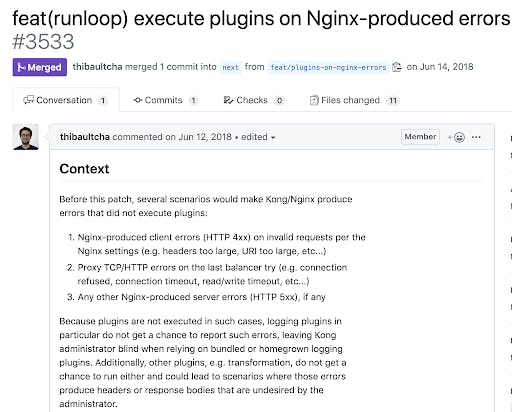

Another Kong engineer came back and said they'll work on a solution that incorporates our suggestion.

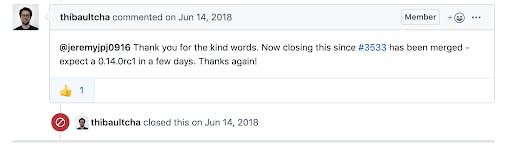

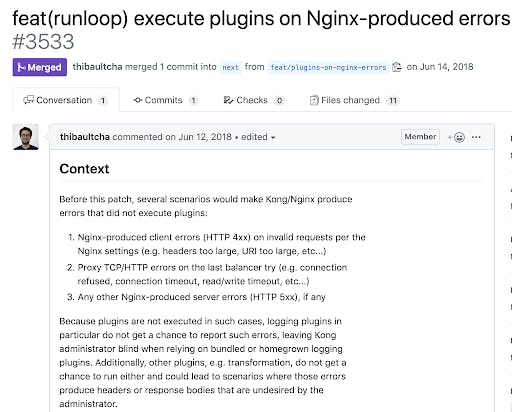

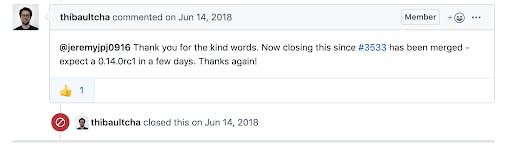

Not too long after that, we saw a PR being made and merged by Kong, which contained a solution that adopted our suggestion. Kong told us to expect this in an upcoming release candidate in a couple of days. We were thrilled at this point.

Why did our first investigation of the problem yield such a different result from the second investigation? What changed? It turns out that what changed, at least for us, was the nature of the problem. It went from being in a closed source mindset - there’s something wrong with this Kong thing - to when we’re going through these internal redirects, we’re losing NGINX context.

We likely wouldn’t have cultivated much expertise if this happened with a closed source vendor.

The next thing we realized we needed to do is to contribute back to the community to get the most out of open source products. Let’s look at a scenario where we ran into something, which led us to contribute to Kong's core.

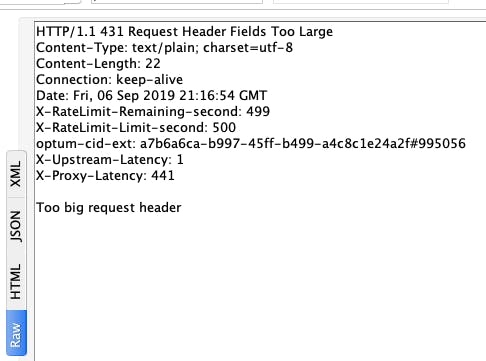

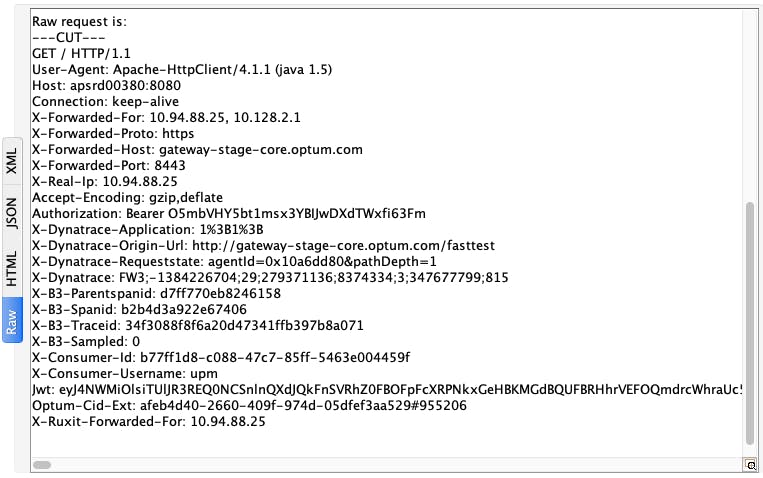

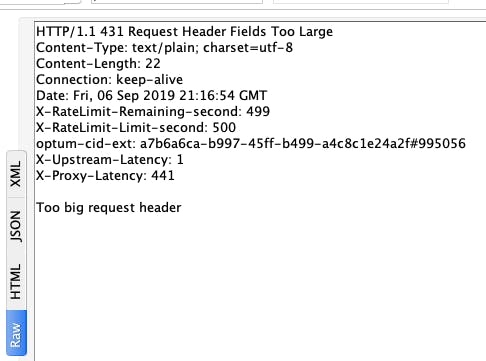

A customer reached out with an issue. "Something’s down today. I'm making API calls, and I’m seeing HTTP 431s. I tested my API directly. It’s working fine. What’s going on?”

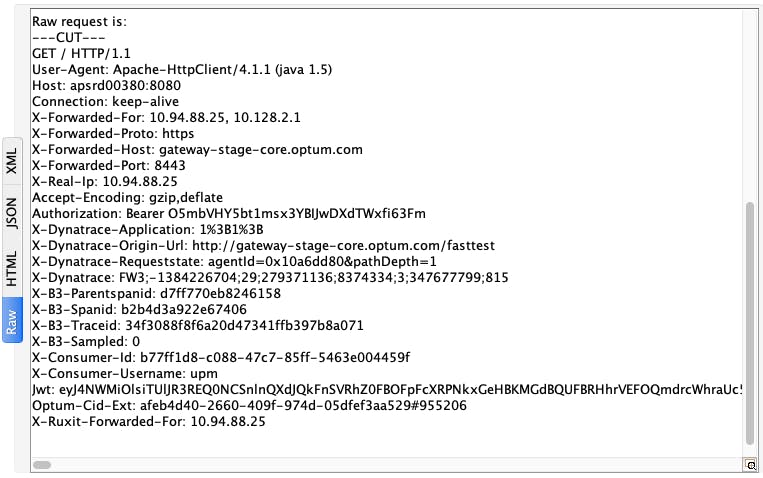

We looked into it and realized an HTTP 431 meant some headers that are too big are getting sent back to your web server. It's reaching the header buffer limit size and rejecting the transaction. With even more digging, we realized that there’s the ACL plugin in Kong that, by default, will include something called X user groups. So with this header in mind and our architecture, there's a route resource for every Kong API proxy we have. That route resource has a UUID. We use those UUIDs to inform how customers have access to different proxies. As a large enterprise, we have a lot of proxies, say hundreds or thousands. That explains why the header could get filled with hundreds or thousands of UUIDs.

With this knowledge, we raised a Git issue to Kong. A Kong engineer gave a thumbs up for that change and implemented it in the ACL plugin.

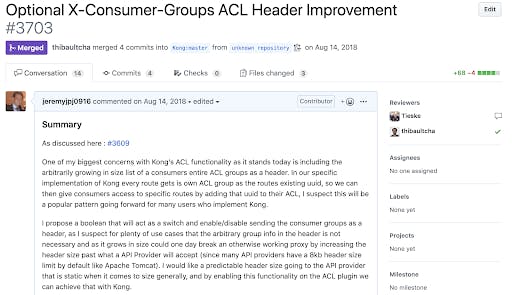

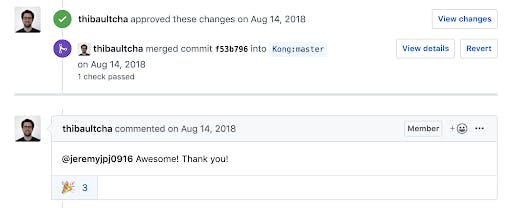

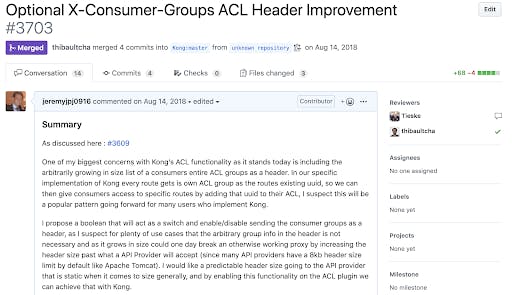

By this point, we understood Kong's code base, so we decided to figure out where I could make that change in the plugin.

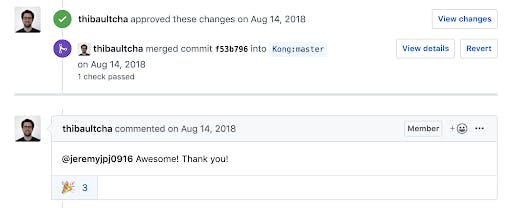

It was pretty cool to be able to commit something back to a codebase that could benefit people. But even better, since Kong is an open source API gateway, every change that goes in has lots of eyes on it.

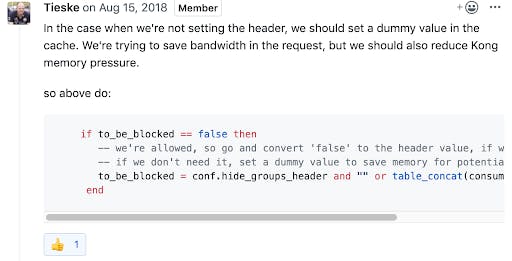

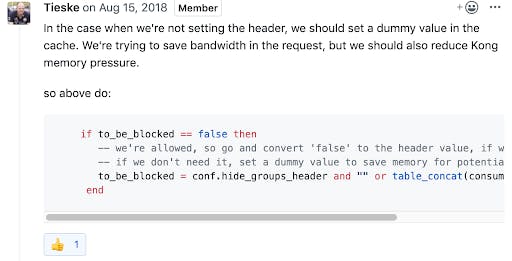

Another member from the Kong team reviewed my work and took it even further. He said, "You stopped the header from passing to the backend. But down in the Lua level, there’s a variable getting set to all those UUIDs. But if we’re enabling your configuration not to set the header to send to the backend, we can send it to an empty stream and save on Lua memory pressure as API traffic is going through Kong." He gave me a little code snippet to test since Kong already merged my other PR.

Kong merged both of these PRs on the same day. The turnaround time was phenomenal. It’s one of the driving reasons open source is gaining such popularity in the space. You don’t see such kind of turnaround times in closed source vendor models.

End result? Better Kong Gateway and a happy backend API.

Embrace Open Source for a Healthy Enterprise

Let’s wrap up by coming back to the question with which we started. As a large enterprise, how can we have confidence in an open source API gateway for mission-critical applications?

- Cultivate internal experts to innovate and improve the solution itself and also all of its integrations within your environment.

- Take part in the community to share knowledge and expertise, both from yourself with the community and vice versa. Doing this will provide an avenue for solving problems that you might not be comfortable solving on your own yet.

- Contribute back to the community. There’s no better way to hone your expertise than by getting direct feedback on your work. And the community benefits from this as well.