This article was written by Jeremy Justus and Ross Sbriscia, senior software engineers from UnitedHealth Group/Optum.

As part of the UnitedHealth Group (UHG), Optum optimizes healthcare technology, and one of our important missions is to provide the tech infrastructure for this Fortune 7 healthcare giant. UHG has over 300,000 employees, thousands of APIs, and countless integrations and external systems. It's safe to say that a lot happens in our environments.

This blog post is part one of a three-part series on how we've scaled our API management with Kong Gateway, the world's most popular open source API gateway. (Here’s part two and part three.)

Our Closed Source API Gateway Was Not Scalable

Before switching to Kong, our primary API gateway solution was a closed source vendor product. It had been the principal solution for almost four years at that point. We noticed several stability, scalability and performance issues with the closed source platform. The solution was complicated in some cases, and impossible in others, to innovate around to the point where we had trouble enabling DevOps. Even some of our internal customers, the API providers within Optum, complained about our API performance. The solution was also very costly, which to us meant the product:

- Was pricey

- Required quite a lot of infrastructure

- Needed a large operations team

We Decided to Seek Out an Open Source API Gateway

As our group was considering replacing the solution, a considerable organizational change was going on at Optum. The company was bringing on more open source solutions. Previously, UHG saw open source as a risk.

Because of this change in mindset, we ended up with selection criteria for our new API gateway solution that sought an open source product instead of a proprietary solution. We were interested in:

- The extensibility that an open source product could provide us

- Eliminating the licensing costs associated with our closed source solution

- A cloud native system that could support a modern CI/CD pipeline

- Decreasing recovery time in incidents

- Achieving better scalability

- A more performant solution

- A more resource-efficient model

Kong Gateway is a high-quality product that keeps DevOps in mind.

We took some key steps to implement Kong into our older, established enterprise.

1. Gain the Approval Within Our Network

In our internal portal, we developed formal documentation of the application and its capabilities. That way, other teams and people within our company could learn how to use Kong Gateway open source.

2. Address Security Requirements

We were explicitly concerned with malicious actors. We already had a web application firewall solution to integrate as part of our ingress controller into the API gateway architecture.

3. Iterate With Policymakers on a New Flow

We could cut gateway hops in half with just one gateway hop between clients and our services, thus improving latency out of the box.

4. Establish Central API Gateway Patterns

We wanted a singular OAuth token generation endpoint that all clients could leverage via Kong for authentication. Our previous gateway solutions all leveraged a single client token generation endpoint, which was better for documentation and customer authentication.

We worked with the Kong config template to tweak the buffer sizes and improve TCP and socket connection management. After that, we wanted to make sure that we would offer this service to UHG's APIs.

6. Ensure Reliability

We needed high availability and disaster recovery baked into our architecture. Early on, we had about three rudimentary alerting telemetry and monitoring solutions for the API gateway. Now, we’re integrated with close to 10 or more logging and alerting solutions. As a result, we have unparalleled visibility into the gateway at runtime.

We Built Our Internal Customer Ecosystem on Top of Kong

Once we incorporated Kong’s API gateway in production, we began to modernize our operations on top of it. We had no ecosystem around the gateway we were offering internal customers.

Start With Documentation

We needed to offer this gateway service internally as a product to our customers. That meant taking a docs-first approach. Our docs have full details on how to leverage the gateway. Our docs free up developer time to focus on the code. It enables customers to integrate with our application and build their own developer culture around our product. They can review the docs and then innovate around what we’re offering.

Improve Customer Support Ops

At first, we had an email distribution list that we would use to work with customers. When we had four to five customers, it was all right to communicate through email to create proxies, authorize consumers and manage ad-hoc requests. As we got more and more consumers, that became quite the nightmare. People were responding to the wrong email chains and responding to the same email at the same time. It just didn’t work out very well.

We iterated to develop a first-in, first-out work ticket queue. Customers had hardly any visibility into the progress of their tickets. The problem was that a human was making the request, and another human was trying to process that request.

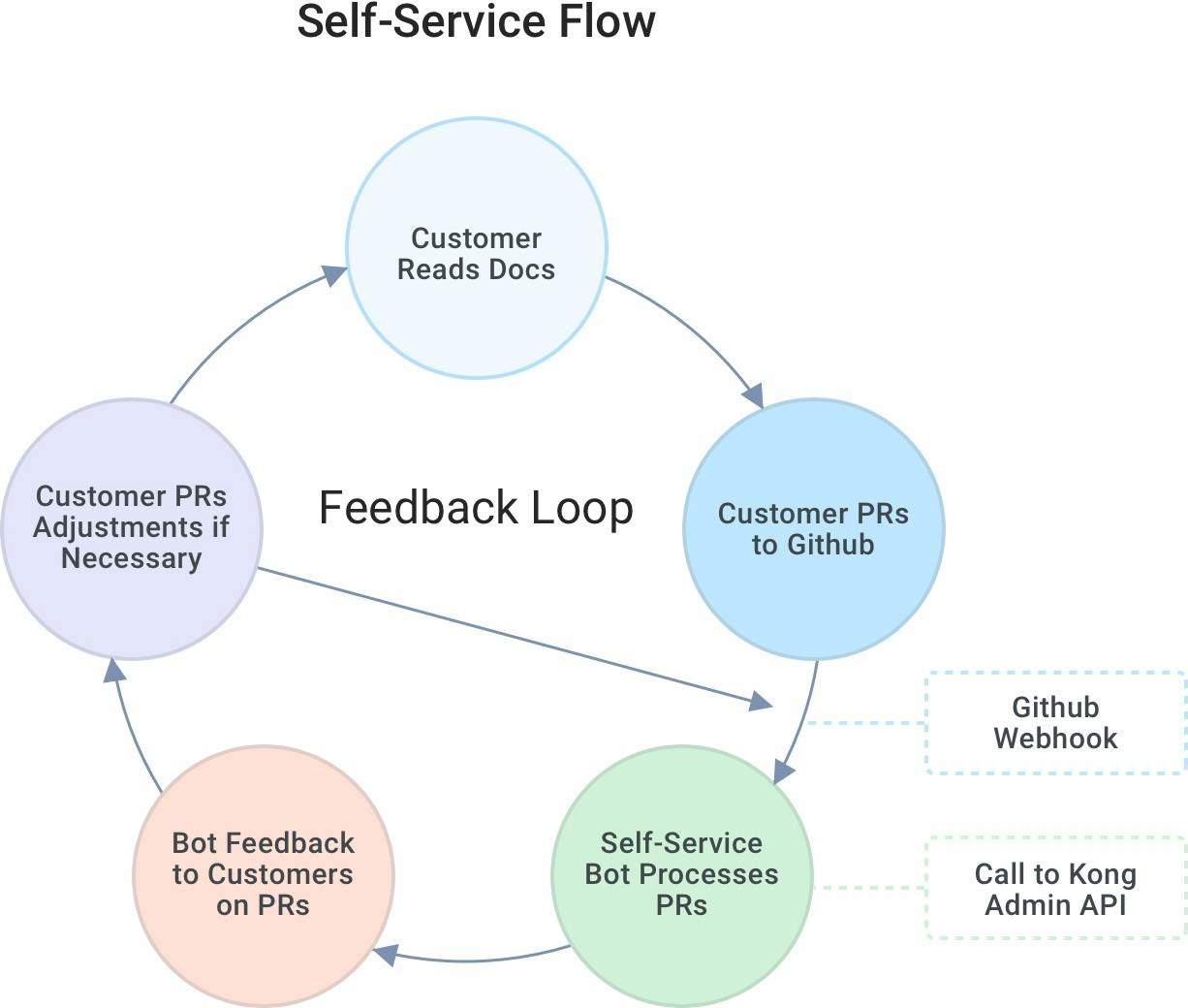

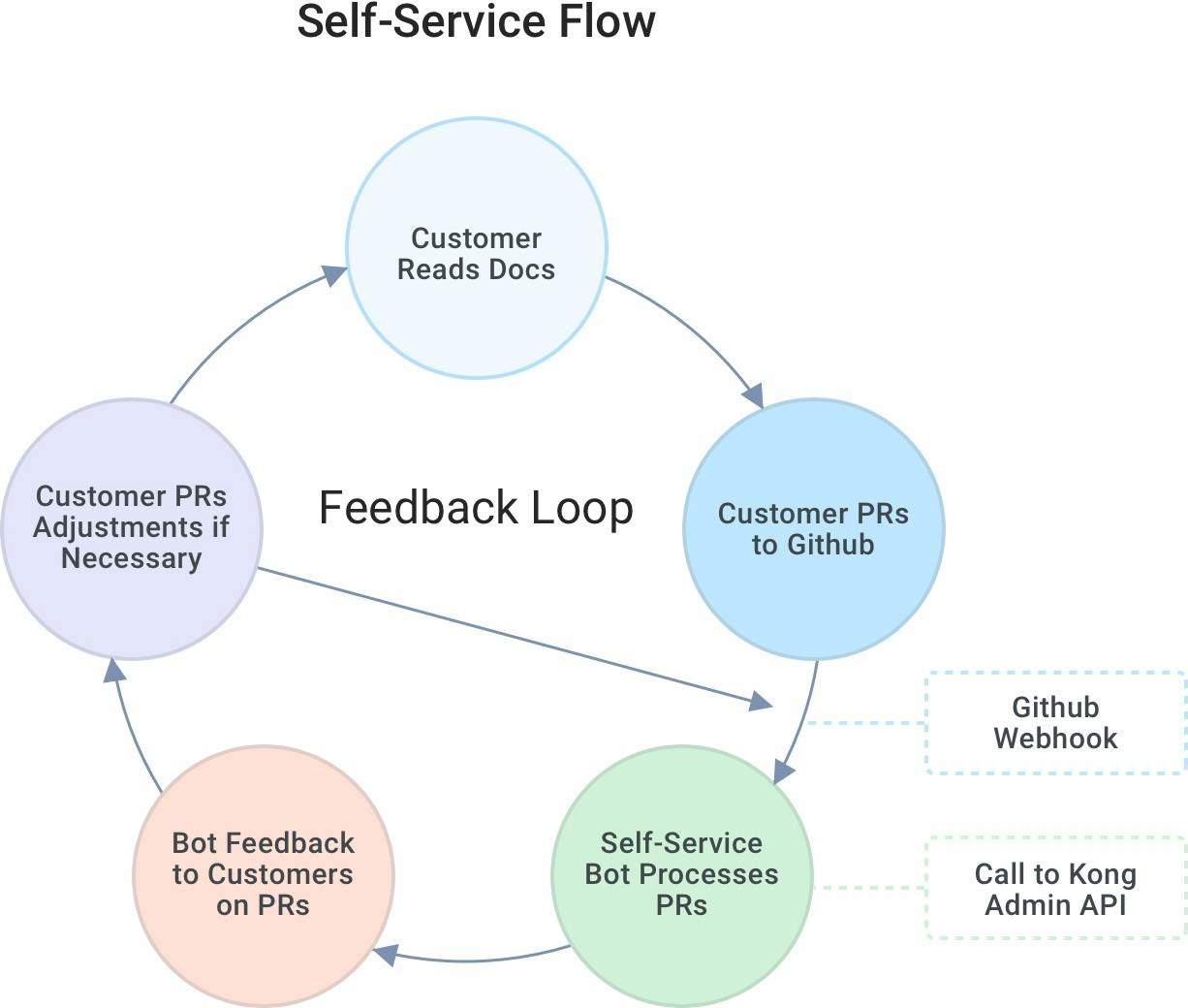

After one more iteration, we ended up with today's solution: a GitHub self-service model. With this solution, customers can commit PRs into a GitHub repo. The solution kicks off an intelligent agent via a webhook. We then process all those resources against the Kong Admin API to create what the customer needs.

Customers still need to read the docs to understand what to do on their own, but after the customer reads the docs on leveraging the gateway, they would then make a PR to GitHub. That will cause a GitHub webhook to kick-off to our agent to review those resources submitted by customers. If the resources show a post is valid through our taxonomy and governance program, it’ll call the Kong Admin API to produce the proxies and consumers they want to create. Then, it’ll comment on that PR. If the PR merged successfully, it'll say so and post the resources to prove it. If there are problems, it’ll also comment on the PR. And it provides a charming, little feedback loop for customers because they can then continuously improve their request until it’s perfect and gets merged in and processed without our team lifting a finger.

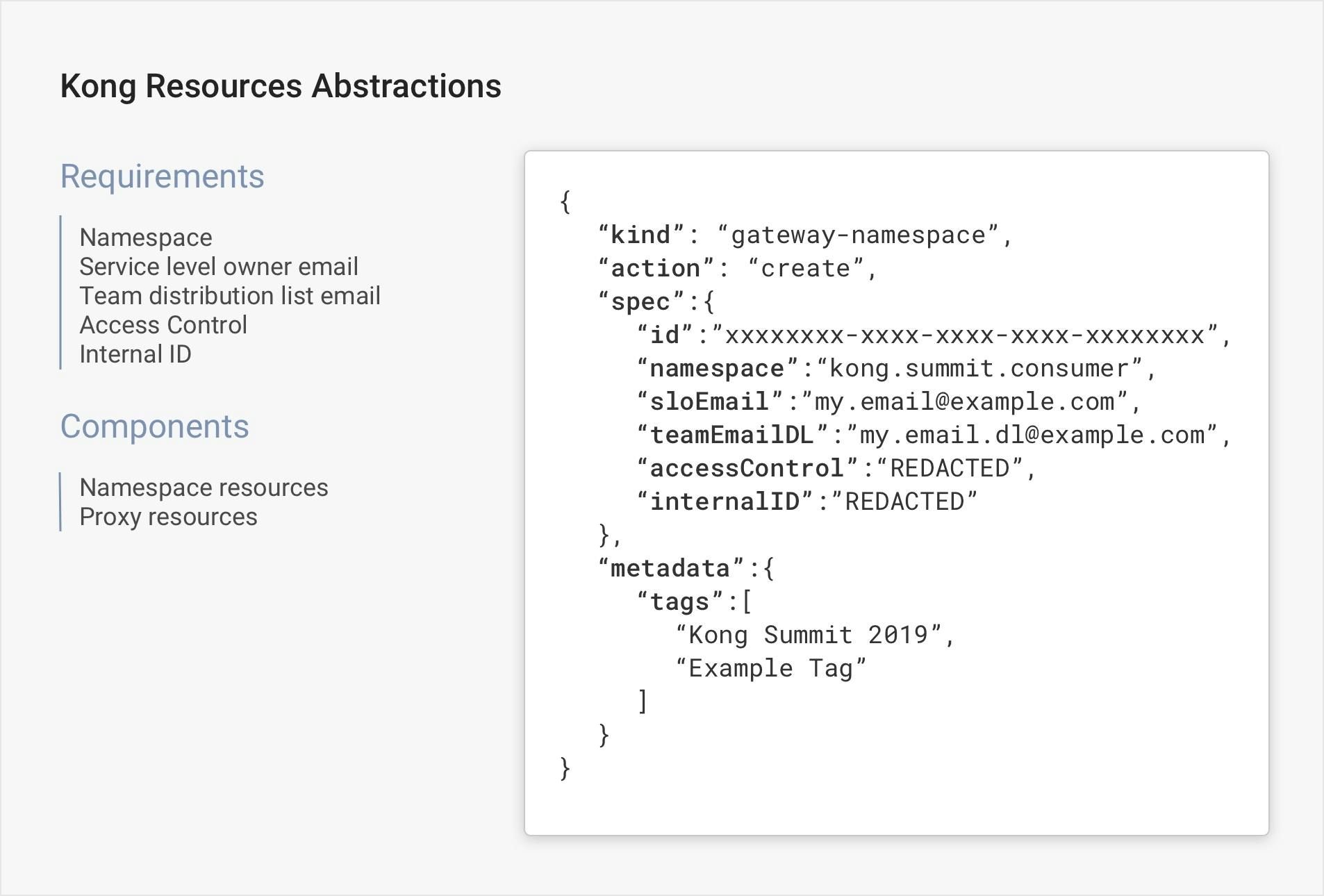

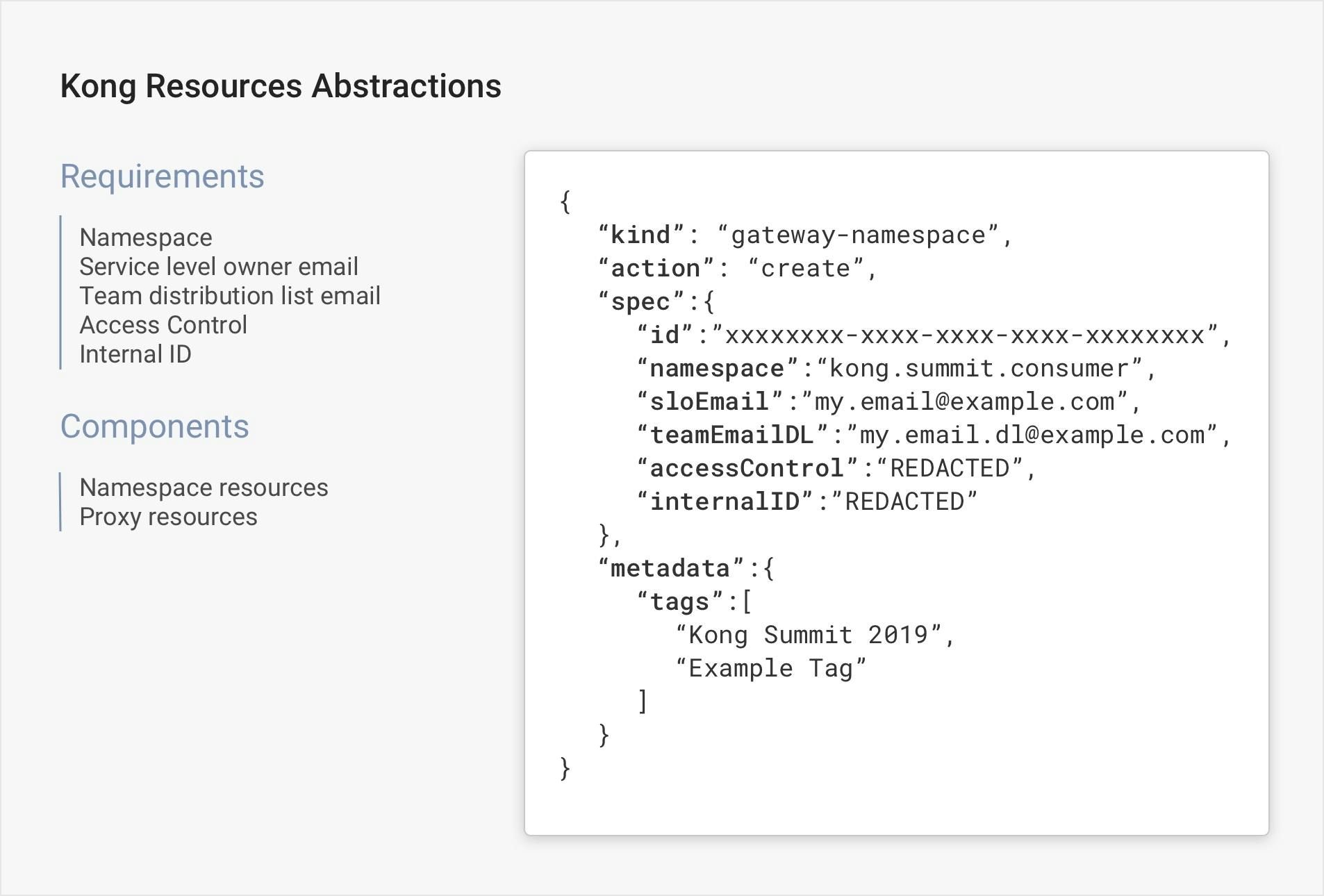

The technology behind this includes some high-level self-service resources that we worked with on this model. We have the Namespace resource, which boils down to virtually a Kong consumer and then credential payers for calling the proxies. It comes down to Kong username, a couple of contact details and access control that hooks into internal company access systems. Then, we have a proxy resource, which boils down to creating a Kong service, a Kong route and any plugin that the consumer would need. The consumer doesn’t have to know the underlying technologies with which we’re working. If you understand an API gateway, you would be able to use that intuitively.

Kong Gateway Enables Innovation at UnitedHealth Group

With the self-service adoption we developed on top of Kong’s API gateway, we have over 300 unique users leveraging this internally, making 2,000 interactions every month. So that equals 2,000 times we didn’t have to manually talk to a customer and work with them to process their proxies and resources. That frees up a lot of developer time.

We like the native GitHub integration where you can see who changed what resources, when and why. Our self-service tooling integrates with Prometheus and Grafana to create dashboards with alerting. That way, API providers can know when their services are failing as soon as they start failing.

Even though we have many APIs, consumers and transactions, we’re confident that Kong will be able to support our cloud native journey.

With Kong, we’ve seen an 85 percent reduction in gateway overhead compared to our previous proprietary solution. Kong is also 90 percent more resource-efficient. In the above graph, you can see the results of three separate comparison tests. In the blue, we have a test against an API. It has to be a Golang API-it’s pretty fast with an average response time of six milliseconds. In the orange, we see the same API with a Kong proxy in front of it. The average response time is 17 milliseconds. When you look at the skyscraper in the chart (112 milliseconds), you can see how much better Kong performs than our previous solution.

Lower Costs

Going from a proprietary license solution to Kong Gateway eliminated our licensing fees, which is a good thing. Since Kong is more extensible, we could engineer DevOps enabling tools around it, reducing our operation staffing by 85 percent.

Fewer Resources

Kong is much more resource-efficient, meaning we can run it with less hardware at the same capacity. With Kong, we reduced our API management service cost by 95 percent. When we started with Kong, for almost two years, we were:

- Supporting the operations of a 300,000-person company

- Pursuing all of our engineering goals

- Getting monitoring and alerts set up

- Iterating and testing every time a new version of Kong came out

2020 and Beyond With Kong

Kong and Optum have seen some remarkable growth in adoption within the cloud native space. In 2019, our open source, Kong-based gateway platform hosted about 1,900 proxies and handled 375 million transactions per month. In 2020, we experienced a ten-fold increase in both metrics to more than 11,000 proxies and 4.5 billion transactions per month. That works out to about 150 million per day.