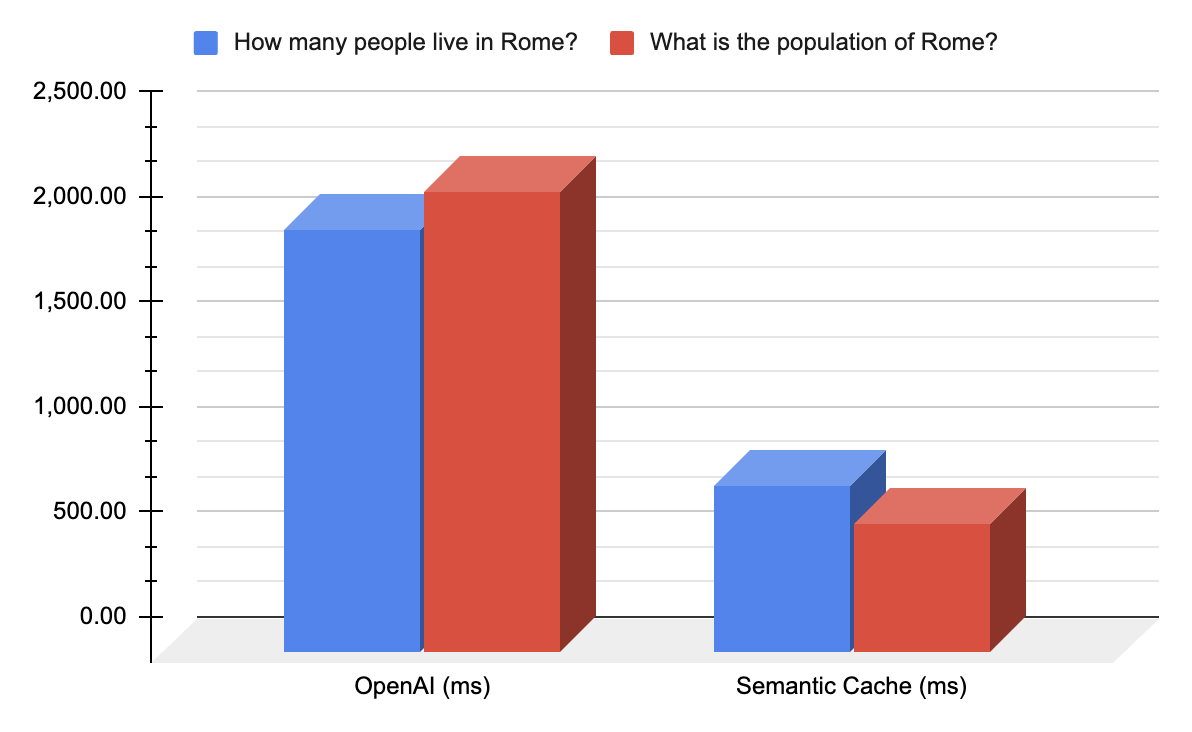

With semantic caching, Kong AI Gateway can accelerate all GenAI traffic significantly. The lower the value, the lower the latency.

The results of this benchmark showcase that every end-user experience that is built with GenAI could be delivered 3-4x faster with Kong’s AI Gateway, which is pretty neat. In some cases, the performance improvements exceed 10x.

Installing the semantic cache plugin is as easy as installing a regular Kong Gateway plugin. This is an example configuration:

Of course, there are many more configuration options, but this really shows how easy it is to use it.

Introducing AI Semantic Prompt Guard

Kong AI Gateway already supports the ability to create guardrails of allow-lists and deny-lists to block specific prompts via the “AI Prompt Guard” plugin. We're further expanding on this capability with a new “AI Semantic Prompt Guard” plugin.

Unlike the traditional Prompt Guard plugin that was leveraging regex to identify the patterns, this new plugin can semantically block the intent and meaning of the prompt, whether it matches a specific keyword or not.

For example, we can block “all political content” from being discussed in the AI Gateway, and that will work regardless of the actual words being used in the prompt.