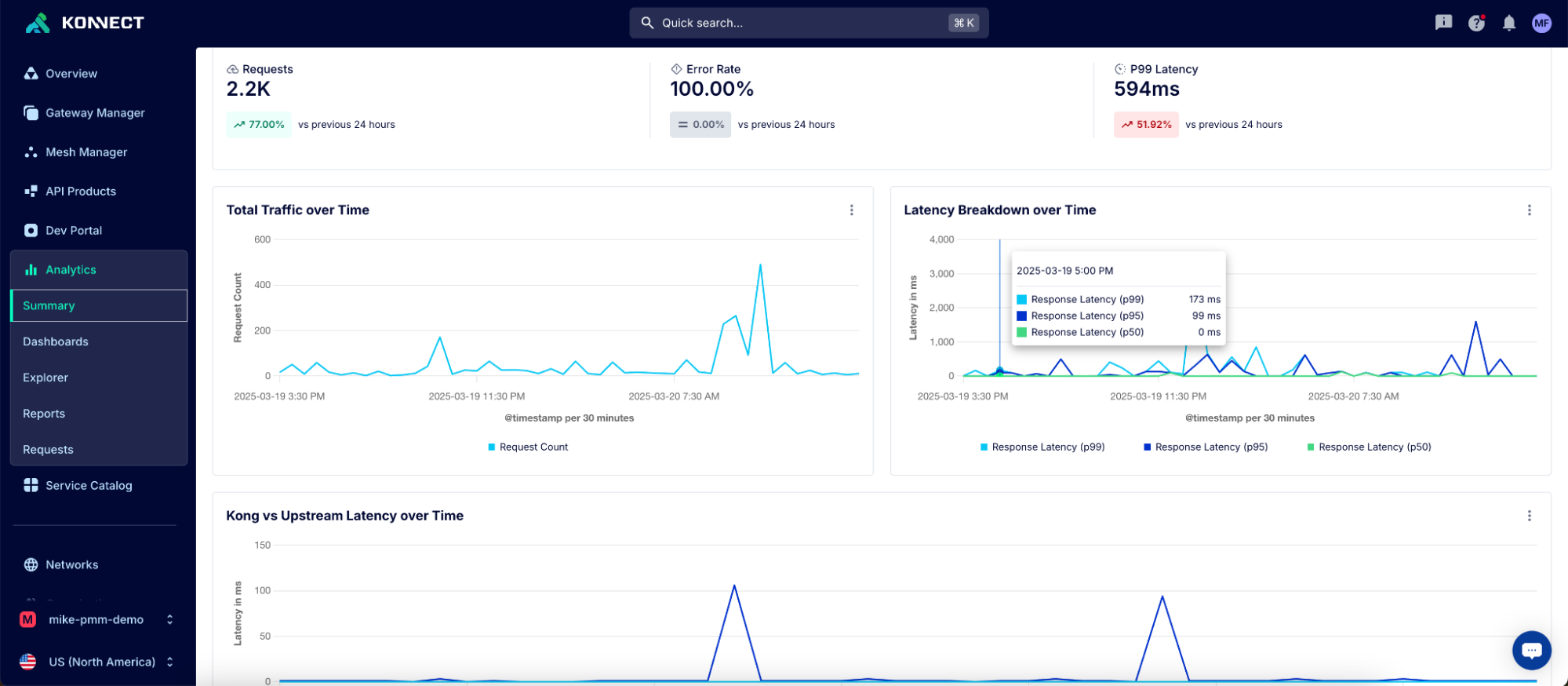

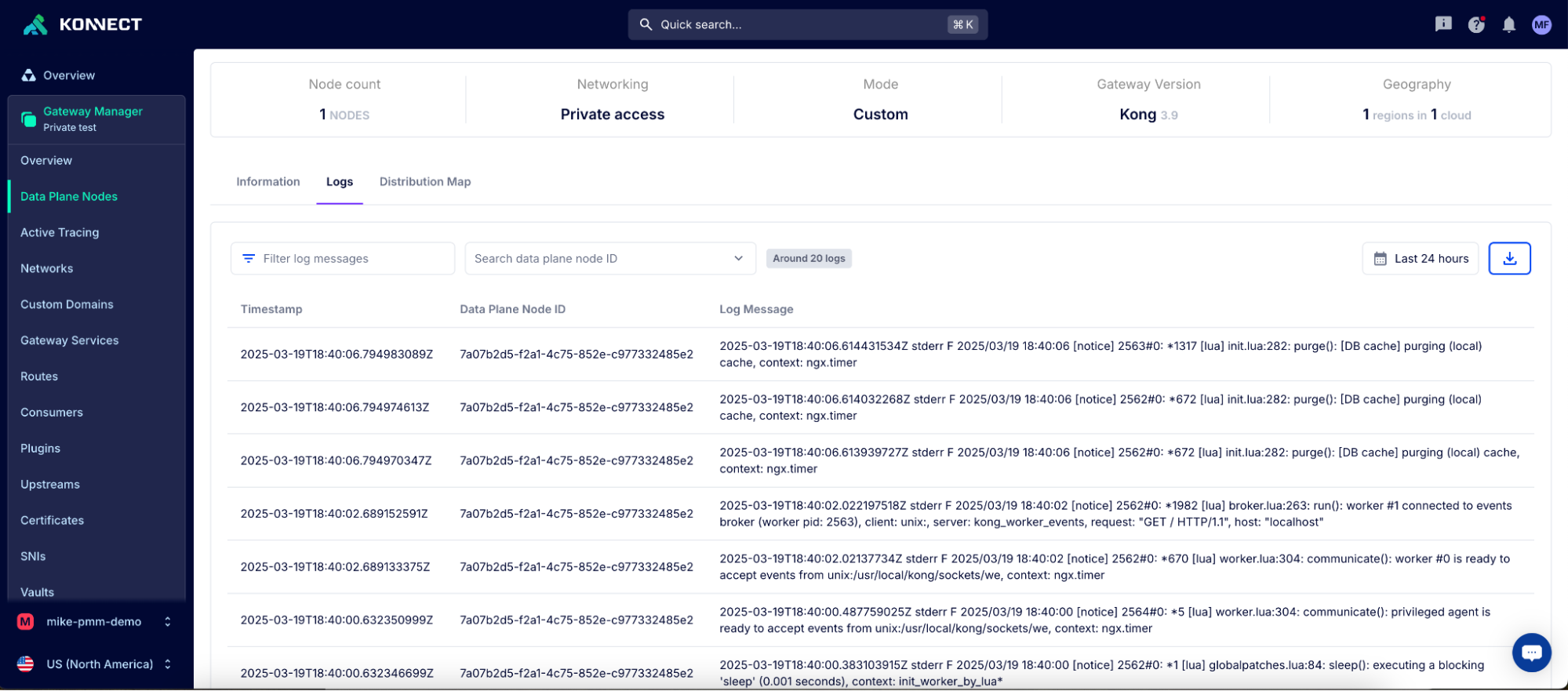

This is an essential tool for reducing mean time to resolution as teams in Konnect can quickly pinpoint whether an issue is due to anything from a custom plugin to an upstream service to DNS resolution.

APIOps and migrations

The last, but certainly not least, piece of core DCGW functionality we’ll be looking at today is APIOps and automation. Now, when many people hear automation, they immediately think of increased efficiency and faster development cycles. And while this is certainly a benefit, the core driver behind APIOps is stronger governance.

APIOps reduces the human error evident in any click-ops approach through consistent, repeatable, and auditable automation pipelines, and Kong DCGWs' support for APIOps is best in class across dedicated APIM vendors and CSPs.

APIOps with Kong also have the added benefit of dramatically simplifying migrations. We’ll touch on that a bit at the end.

There are four main ways to build out your APIOps in Kong:

- Admin API

- decK

- Terraform provider

- Kong Operator

The details

Let’s first break down the two main approaches to APIOps: imperative and declarative.

The imperative approach involves defining step-by-step instructions to modify the API configuration directly. This method, often seen in CLI commands, scripts, or manual updates, provides more granular control but can be harder to maintain in complex environments. This approach can be enabled through Kong’s Admin API and it’s the approach to APIOps most gateway vendors provide.

In contrast, the declarative approach focuses on defining the desired state of the API configuration, where you specify what the end result should look like — such as your routes, services, and policies — and the system automatically handles the steps to achieve that state. While both approaches have their use cases, the declarative model is generally favored for its efficiency and alignment with modern DevOps practices, thereby offering better predictability, version control, and scalability.

While many gateway vendors offer little to nothing to support declarative APIOps, Kong supports it in three distinct flavors: the decK CLI tool (short for declarative configuration), an officially supported Kong Terraform provider, and the Kong Operator with support for managing all Konnect entities as CRDs. This means that regardless of your preferred deployment environment, CI/CD infrastructure, and broad approach to APIOps, Kong’s DCGWs can seamlessly integrate and be managed as code.

Finally, let's quickly touch on how Kong’s APIOps tooling makes migrations dead simple. If you’re already using Kong, decK makes it a breeze to dump the configuration from an existing control plane and immediately sync it to your new control plane running DCGWs. If you’re coming from another solution, decK can convert your OpenAPI specifications to Kong’s declarative configuration format, Terraform manifests, or Kubernetes CRDs. This gives you the power to build out a workflow to quickly start using DCGWs with your existing APIs.

Conclusion

Now, it’s important to remember that DCGWs are just one offering with Kong Konnect’s federated API gateway model. It’s dead simple to manage a mixture of gateway deployment options so each team/business unit/development environment can use what’s best for them.

But clearly, any team looking to benefit from faster time to market, easier scalability, reduced operational overhead, and all the associated cost savings by switching to DCGWs, the time is clearly now.