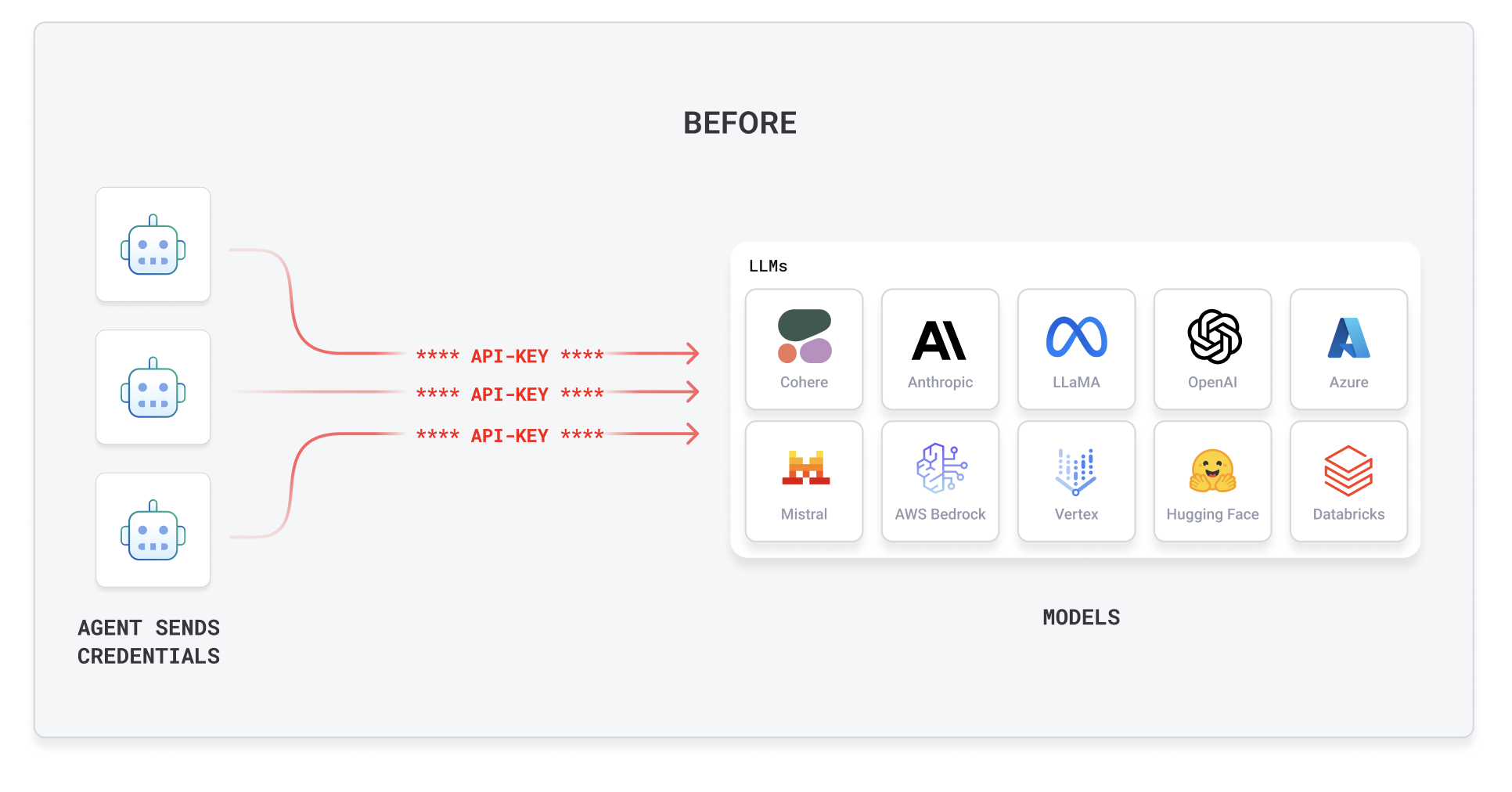

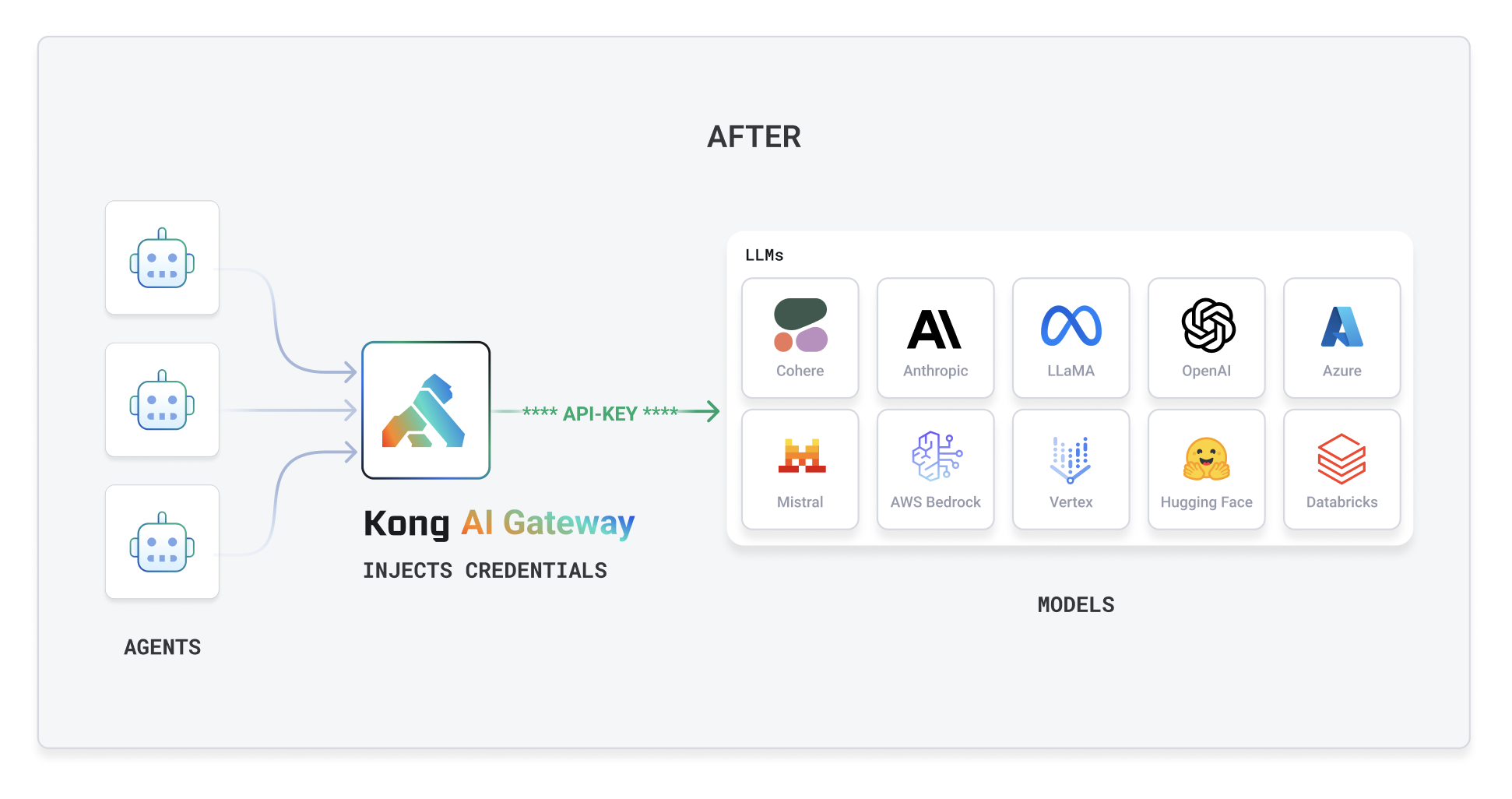

If you're a builder, you likely keep sending your LLM credentials on every request from your agents and applications. But if you operate in an enterprise environment, you'll want to store your credentials in a secure third-party like HashiCorp Vault or IDP provider and have the infrastructure inject the credentials for you dynamically. By doing so, you'll have:

✅ No leaks of credentials into agents

✅ Strong governance of LLM credentials in secure storage

✅ Ability to switch credentials on the fly without having to redeploy your agents

In this case, the infrastructure that secures the communication between the agents and the LLMs is an AI gateway, and the capability that allows you to manage and configure this behavior is our Kong Konnect AI Manager.

Today, we're introducing the ability to configure dynamic authentication using a third-party like HashiCorp Vault in our AI Manager product.