For the DevOps-averse developer, lambdas are heaven. They can focus on writing self-contained and modularized pieces of code, deploying these functions for on-demand execution without being concerned about resource management or infrastructure. Lambda execution, however, can be tricky. Serverless security with the AWS API Gateway can feel daunting, especially when all you want to do is call a simple function as an API endpoint. For this, there’s the ease of Kong Gateway.

This article and video will walk through how to set up Kong Gateway with the AWS Lambda plugin. Our mini-project will go through the following steps:

- Build a simple serverless function in Node.js, which processes a request’s header, query parameters and body, and then responds with JSON.

- Deploy our serverless function to AWS Lambda.

- Obtain the credentials we need to invoke our Lambda.

- Configure Kong Gateway to listen for requests on a specific, local path.

- Add the AWS Lambda plugin to Kong so that requests to that path invoke our Lambda and return the invocation result.

To walk alongside, you’ll need the following:

- An AWS account (our resource usage will likely be free tier).

- Basic familiarity with JavaScript and Node.js.

- AWS CLI installed on your local machine.

Kong Gateway installed on your local machine. Follow the getting started instructions in the Kong Documentation.

Before we dive in, let’s cover some core concepts.

AWS Lambda

Years ago, the AWS Lambda service was synonymous with the term serverless. It represented one of the first mainstream offerings of a Function-as-a-Service (FaaS). With AWS Lambda, developers simply need to upload the code for a function.

Whenever you need to execute the function, AWS Lambda provisions the resources needed to execute the function and then de-provisions those resources when the serverless function execution is complete. Developers no longer need to be concerned with resource management because AWS Lambda handles it behind the scenes. AWS Lambda currently supports Node.js, Ruby, Python and several other languages.

Serverless Security

When it comes to deploying serverless code, security is a critical concern, and AWS offers some security best practices to keep in mind. Though there’s no longer a server that needs to be kept secure, the key concern is properly securing the invocation and updating of serverless function code.

Only those with proper authorization, whether that be a service or a human user, should be allowed to invoke or update serverless code. In the AWS space, this means establishing proper IAM policies and attaching them either to service-linked roles or to individual users. For AWS Lambda, the pertinent operations to lock down securely include InvokeFunction and UpdateFunctionCode.

Kong Gateway

Kong Gateway is a lightweight open source API gateway that sits in front of your upstream services. Easy to configure and quick to deploy, Kong Gateway serves as a central gatekeeper for routing all incoming requests to their intended destinations, whether that be an upstream API server, a third-party service, a database or even a function deployed to AWS Lambda.

As a thin layer between your user clients and your upstream services, the API gateway is further extended with countless Kong plugins to handle authentication, authorization, traffic control, security and more.

Step 1: Write Our Function

For our mini-project, we’ll work with a basic Lambda function written for Node.js. Taking our cues from the AWS documentation on sample function code, we craft the following code:

In short, our function responds with a status code, a simple header and a body with a message. We’ll expand on this function in a later step, making use of the incoming request’s headers, query string parameters and body. For now, though, this is good enough to get started.

Step 2: Deploy Function to AWS Lambda

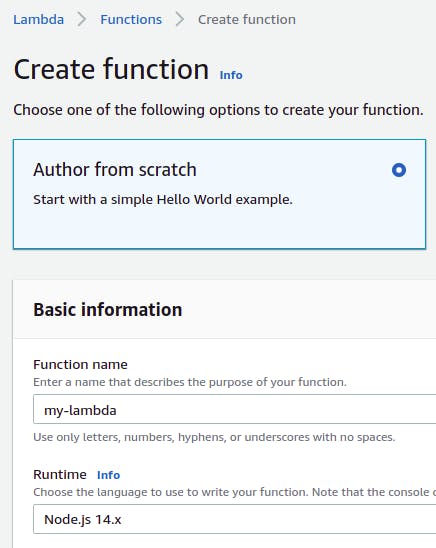

Next, we deploy our function code to AWS Lambda. We navigate to Lambda services in the AWS Console, ensuring we’ve selected a region for deployment. For the remainder of this example, we’ll use the us-west-2 region. Click on Create function.

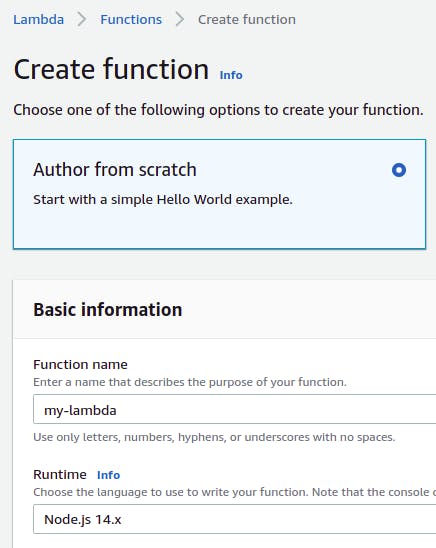

We’ll author our function from scratch, choosing a name for our function (my-lambda) and selecting a Node.js runtime (Node.js 14.x).

With our Lambda created, we navigate to Code source and double click on index.js. Then, we paste in our function code from above.

Click on Deploy.

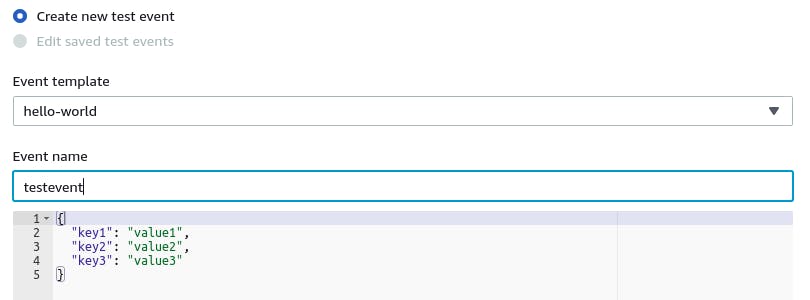

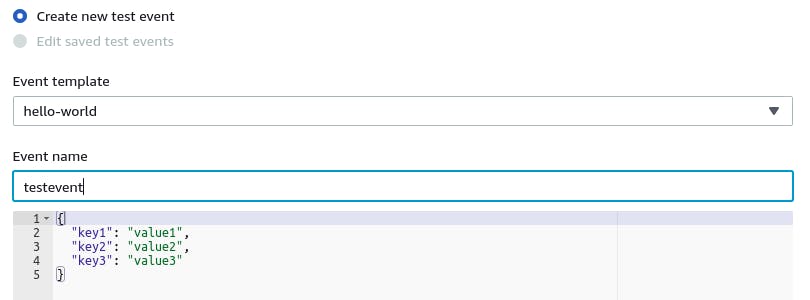

Just to make sure our function works, we can test an invocation call directly in our console. Click on Test and then on Configure Test Event. Use the default “hello world” template. Name your test event, keeping the provided default body. Click on Create.

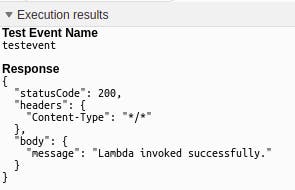

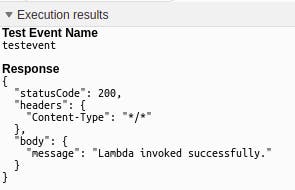

Now, with your testevent selected, click on Test. The execution results show the result of our function call一a status code, headers and a body with a message.

Now that we deployed our simple Lambda, we want to invoke our function remotely. Let’s head to the command line on our local machine.

Step 3: Obtain Credentials for Invoking Lambda

Of course, not just anybody should be allowed to invoke your Lambda. We touched on the serverless security need to ensure that we have properly locked down the ability to invoke our function and update our function code. For our present example, we’ll focus our concern on function invocation.

We need to provide credentials for an AWS IAM user that has permission to invoke this Lambda. This is clear when we use the AWS CLI to try to invoke our Lambda function without any credentials:

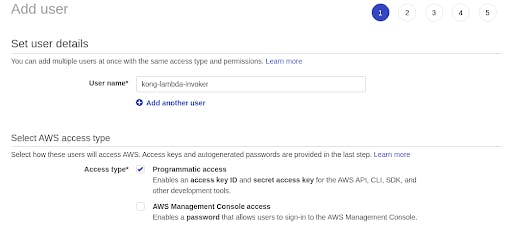

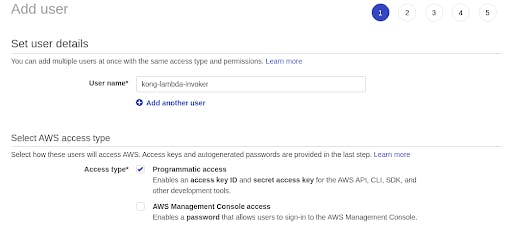

Our first step is to create an IAM user. In the AWS Console, navigate to the IAM service and then to Users. Create a new IAM user with a name of your choice, and give that user programmatic access. We’ll call our user kong-lambda-invoker.

Step through the permissions, tags and review, going with the defaults. We’ll create the user with no permissions to start with, then iterate from there. Click on Create User. Now, you have an “Access key ID” and a “Secret access key” for your new user.

Back at the command line, let’s set up these credentials. We paste in the values from our IAM user creation result, and we also specify our region (us-west-2) and our default output format (json):

Now that we’ve configured our AWS credentials, we try to invoke our function from the command line once again:

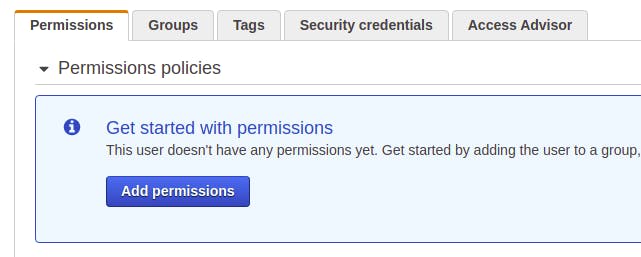

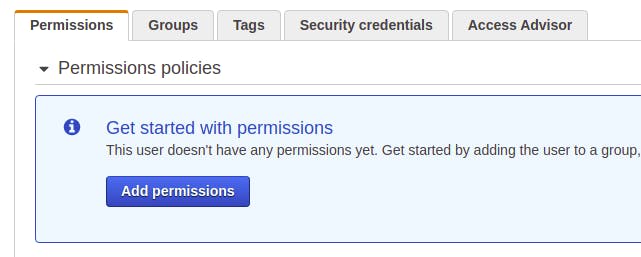

Progress! We are still unable to invoke our function, but this time, the reason is different. Our user, kong-lambda-invoker, just doesn’t have the permissions to invoke our function. Let’s provide those permissions. Back at AWS IAM, we select our user and click on Add permissions.

Next, click on Attach existing policies directly, and then Create policy. In the policy editor, click on JSON and paste in the following code. Make sure to replace REGION with the region where your lambda is deployed (us-west-2). Also, replace AWS-ID with your AWS account ID.

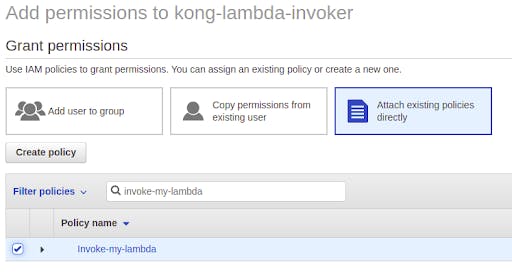

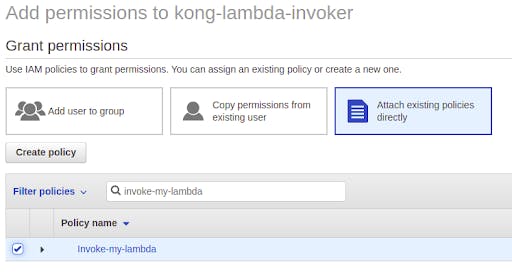

Click through the remaining policy creation sections, accepting all the defaults. Give the policy a name (for example, Invoke-my-lambda). Now that we created the lambda invocation policy, we’ll return to our IAM users and Add permissions for the kong-lambda-invoker user.

We Attach existing policies directly and then find the policy we just created. With the policy selected, we click through the remaining steps and attach the permissions to our user.

With a user and a policy in place, we can return to our command line and invoke the function again. It might take a minute or two for AWS to make the permissions changes to your user. Soon after, however, here is our result:

It works! We look at the output file, response.json, and see our 200 status and initial body with a message. With our Lambda function deployed and our credentials obtained, it’s time to configure Kong.

Depending on your serverless environment, the steps for installing Kong to your local machine will vary. Once the installation is complete, we have several configuration steps to follow.

Set Up Declarative Configuration

To use the AWS Lambda Plugin, we can run Kong in db-less mode, meaning that we simply need to write a declarative configuration file that the system reads when we start up Kong. First, we’ll create a project folder and generate the boilerplate configuration file:

Next, we’ll configure Kong’s startup behavior to use that kong.yml for its configuration. To do this, we need to copy Kong’s kong.conf.default template file, name the copy as kong.conf, and then edit it:

Now, let’s edit kong.conf to make a few changes.

Above, we add an upstream service (called my-service). This upstream service simply points to a site used for HTTP status code testing, which will return a 200. Then, we create a route (called my-lambda-route) that listens for requests on the /lambda path, forwarding those requests to our upstream service.

Start Kong

With our declarative configuration file in place, we start up Kong:

Test With a Curl Request

We can use curl to send a request, seeing if Kong listens on the path correctly and forwards the request to the upstream service successfully:

Excellent. Kong is up and running. Next, we’ll make some modifications to our kong.yml file, adding in the AWS Lambda plugin.

Step 5: Add AWS Lambda Plugin to Kong

In its simplest form, the AWS Lambda plugin can be enabled on a route, completely removing the need for a route to be associated with an upstream service. Kong listens for requests on that route’s path, and then it invokes the remote AWS Lambda function with the parameters from that request. This is the approach that we’re going to take here.

Replace the contents of your kong.yml with the following. Make sure to use your IAM user’s access key ID and secret access key.

You’ll notice that we have removed our upstream service. Our route is no longer associated with a service since requests will go to the Lambda invocation instead. We have added a plugins section with our aws-lambda plugin. Note that aws-lambda is not an arbitrary name but refers specifically to the AWS Lambda plugin bundled into Kong.

We can associate our plugin with our route (my-lambda-route) and the Lambda function we’ve deployed at AWS. We include our IAM user’s credentials, deployment region and function name. Next, we configure the plugin to forward the request’s body, headers and URI to the Lambda invocation, which we’ll see in the function call’s event object shortly after modifying our function code. First, though, we’ll restart Kong and check that our Lambda is properly invoked.

Kong is up and running, invoking our Lambda as expected. Now, let’s update our Lambda function code to access the request header, query string parameters and body. Back in the AWS console for my-lambda, replace the code in index.js with the following:

Click on Deploy to deploy the updated code. We’ll use Insomnia to send requests and examine the results.

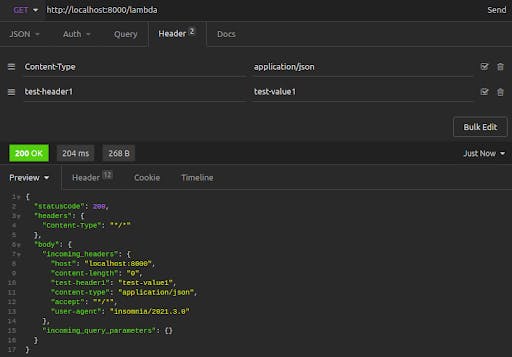

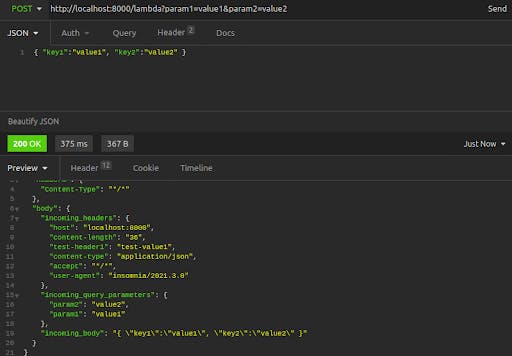

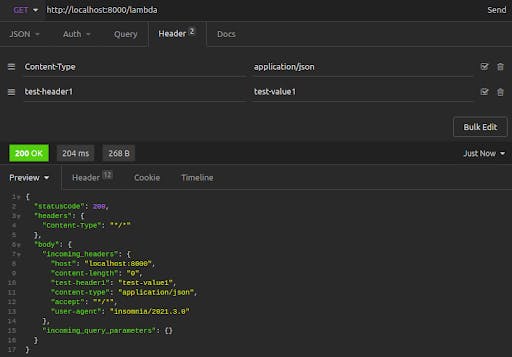

First, we make a basic request to our Kong proxy and path, with no parameters or body. We set a few additional headers of our own (including test-header1, with the value test-value1). We send the request to http://localhost:8000/lambda and receive the following response:

The resulting response body shows incoming_headers, which are the original headers that the Insomnia client sent to our Kong proxy, now forwarded to the Lambda invocation. These include the values that we explicitly set in Insomnia.

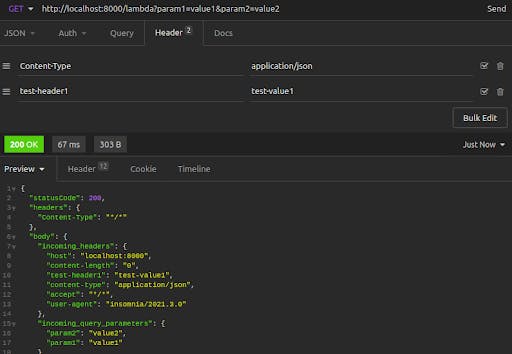

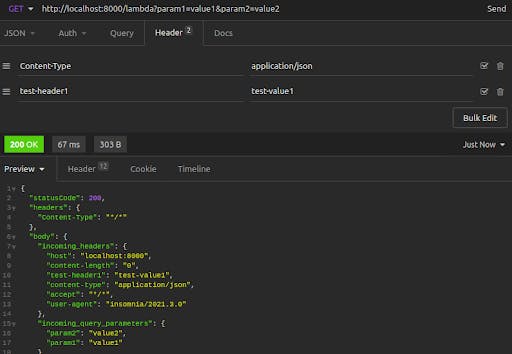

Next, we send another request, this time adding query string parameters onto our request URL.

The result shows our query string parameters echoed back to us in the incoming_query_parameters key of the response body.

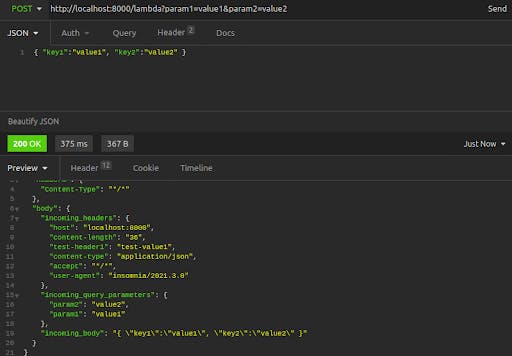

Lastly, we send a POST request and attach a JSON body, verifying that our Lambda function receives the body data.

In this final request, we see the incoming_headers, the incoming_query_parameters and the incoming_body, all populated correctly.

Our Lambda function works, and our Kong plugin to invoke our Lambda function works, too. This opens the doors to countless possibilities and usage patterns.

Other Use Cases

Asynchronous Calls With invocation_type=Event

The plugin default sets the invocation_type for our Lambda call to RequestResponse. This is a synchronous call that waits for the function execution to complete and return a result. Another common invocation pattern for AWS Lambda functions is the asynchronous invocation, which initiates or triggers a process without waiting for a response. For this, you can configure the plugin with invocation_type=Event.

Within Kong, there’s tremendous potential for custom flows through the chaining of plugins. One such use case combines the Request Transformer plugin with the AWS Lambda plugin. Both plugins can be associated with a route. Then, all requests to that route can first have their requests transformed—perhaps additional headers or body data are added in a format easily consumable by the Lambda function—before being forwarded to the Lambda invocation.

Serverless Security Made Easy With Kong's AWS Lambda Plugin

With the completion of this walk-through under your belt, you can invoke your own Lambda functions through Kong’s AWS Lambda plugin. Your use case might dictate using the plugin with other plugins for powerful request handling, or you might use this route and plugin alongside other routes and upstream services managed by Kong.

Kong’s built-in plugin removes the complexity of Lambda serverless function execution. Developers simply start with deploying a function. Then, to ensure they follow serverless security best practices, they set up an IAM user (or service-linked role) with the appropriate permissions to invoke that function and update its code.

With serverless security in place, they then write a few configuration lines to tell Kong to use the plugin for invoking that function. The simplicity of using AWS Lambda—which made writing and deploying modular code pleasantly carefree for developers—just leveled up.

Once you've finished setting up serverless security with the AWS Lambda plugin, you may find these other tutorials helpful:

Have questions about serverless security or want to stay in touch with the Kong community? Join us wherever you hang out:

⭐ Star us on GitHub

🐦 Follow us on Twitter

🌎 Join the Kong Community

🍻 Join our Meetups

❓ ️Ask and answer questions on Kong Nation

💯 Apply to become a Kong Champion