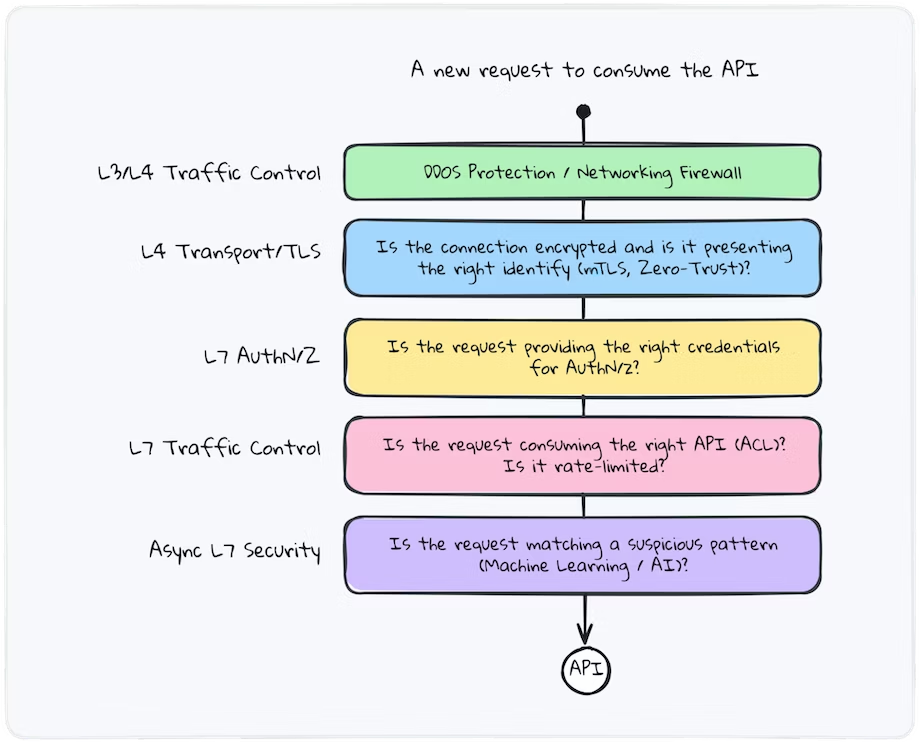

The multi-layered approach to API security.

As the name suggests, layered security refers to the practice of implementing multiple levels of cybersecurity to handle a multitude of potential network attacks.

Think about a company like an onion. At the center is a company’s valuable information and company stability. To get to the center of the onion, the external layers would need to be peeled back one by one. The more layers surrounding the center of the onion, the harder it is to get to. These onion layers represent the multiple levels of cybersecurity. With strong enough layered security in place, a company's information and inner workings are impossible to access.

Layered security for threat protection

What sort of threats does a layered security approach help protect against?

- DDoS attacks — A DDoS (distributed denial of service) attack aims to overwhelm APIs and make them unavailable. A layered defense can use tools like web application firewalls, rate limiting, and load balancing to mitigate these attacks.

- Data breaches — APIs can expose sensitive data that needs to be protected. A layered approach applies controls like authentication, authorization, encryption to safeguard data.

- Malware infections — Malicious actors may try to exploit APIs to plant malware. A layered security strategy scans for vulnerabilities, monitors for anomalies, and isolates compromised components.

- Injections — Attackers may inject malicious code or commands into API calls. Input validation, sanitization, and sandboxing can help prevent successful injections.

- Broken authentication — Flaws in authentication logic can enable account takeover or credential stuffing. A layered model enforces strong, multi-factor authentication across all API access points.

- Excessive usage — Unmetered API usage can facilitate denial of service and brute force attacks. Applying rate limiting protects against excessive calls.

A layered API security strategy combines multiple controls at different levels to provide defense in depth against common API threat vectors. Continue on for a deeper dive into each of these.

Potential threats protected against with layered security

Now that we have established the solution, we should also go over what specifically we are protecting against. Here is a concise list of all major threats and how they could be protected against using layered security:

- DDoS attacks create an overwhelming amount of internet traffic to render all API services useless. Due to the sheer amount of influx, the APIs are overwhelmed and can no longer serve their protective purpose. To prevent this form of attack, a layered security system can implement multiple tools such as web application firewalls, rate limiting, and load balancing to mitigate the impact these attacks may have.

- APIs are given a large amount of responsibility in regulating multiple applications, but sometimes valuable information can slip through the cracks. This is known as a data breach, which is when a person’s or company’s valuable data is either leaked or taken without consent. An easy solution from a layered security perspective is to add controls such as authentication, authorization, or encryption to safeguard data.

- Malware is one of the most common forms of computer viruses and can infect a multitude of technologies. Malicious actors might try to exploit any weaknesses in APIs to plant malware. If not detected early on, this can be used to extract information or give access to people who aren't supposed to be in the system. To prevent this, a layer of security can scan for vulnerabilities in the API system, monitor for anomalies compared to the average usage traffic, and isolate compromised components.

- Related to malware infections (both in meaning and sound) are malware injections. Attackers may inject malicious code or commands into API calls. To prevent this form of attack, security measures such as input validation, sanitization, and sandboxing can be layered among other protocols.

- Sometimes implementing measures to the security layering process can lead to more openings for attacks. While trying to add a layer of security for user authentication, attackers can also break the authentication process to steal information. Using the broken authentication process, attackers are enabled to commit account takeover and credential stuffing. Additional layers can monitor authentication layers and enable strong multi-factor authentication to make sure they are running properly.

- Even overusing your APIs can lead to potential attacks. Excessive API usage can facilitate denial of services and brute force attacks. To solve this issue, users can apply limit rates to protect against excessive calls.

These are a handful of the most common API security issues, but attackers are always finding ways to get the information they want. Security best practice is to implement a layered security system preemptively.

Low-level network security (L3/L4)

The first level of security is known as low-level network security at L3/L4.

This base level is especially important for edge APIs, which are meant to be consumed by third parties outside of the organization. We want to inspect traffic flows using features such as inbound encrypted traffic inspection, stateful inspection, and protocol detection. To achieve this, we can deploy outbound traffic filtering to prevent data loss, help meet compliance requirements, and block known malware communications. The goal is to inspect active traffic flow using features such as stateful inspection, protocol detection, and more. In short, we want to ensure that incoming traffic is legitimate before even thinking about moving to the next layer of security.

While these strategies are required for external API traffic, forward-thinking organizations also implement a tight level of security on this layer for internal traffic to prevent internal malicious actors and bots that could affect the systems. For many leaders in API security, this is perhaps an important distinction to make: we’re protecting ourselves from both external and internal threats. Not implementing the latter creates a vulnerability in the organization since an attacker may be running malicious software internally via other backdoors outside of the API perimeter. But that can now affect the API infrastructure itself. Internal software is not to be trusted, which brings us to the next layer of security.

Zero trust security framework

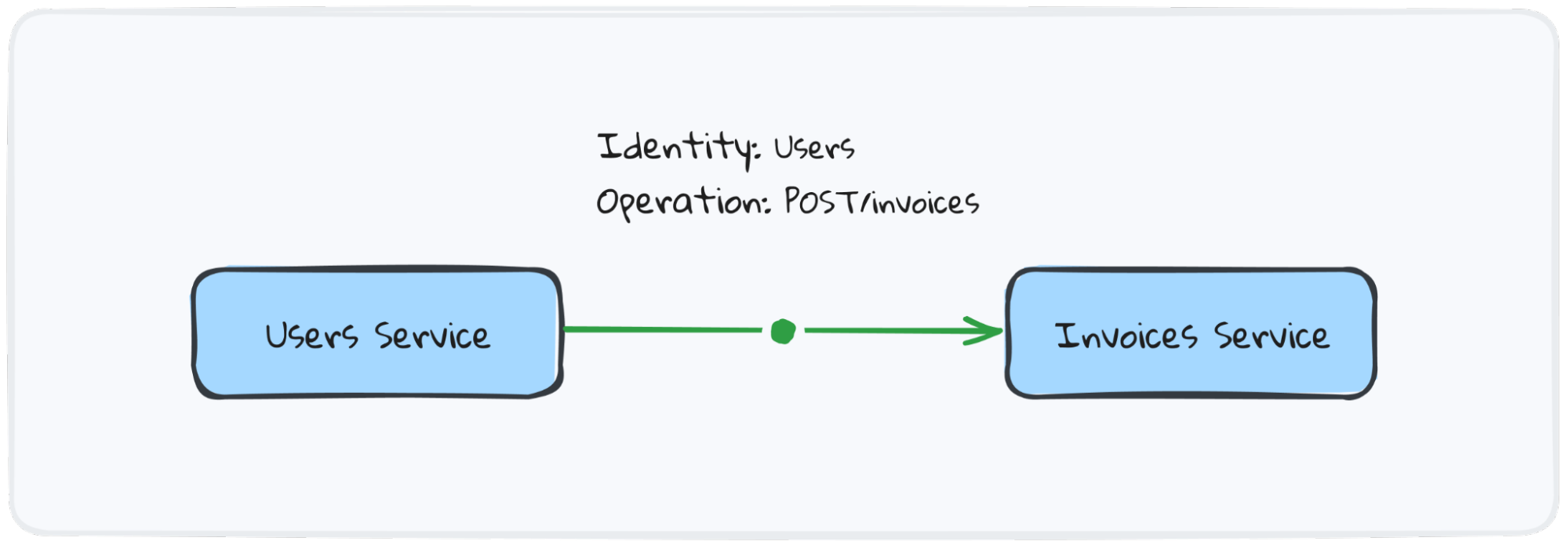

The second layer of security is implementing the concept of zero-trust — to remove the concept of trust in our applications and services. We can’t trust that the client is who they claim to be, and we similarly can’t trust internal or external clients.

Let’s give a practical example: when traveling to a foreign country, typically we need to carry our passport with us to prove our identity at the border. The passport is a document that validates that we are who we claim to be. Without passports, the immigration agents would have no way of knowing if our identity is legitimate — they would have to take our word for it. How long would it take for malicious actors to exploit this system and start impersonating other people? How many criminals would just walk into our country without any possibility to stop them?

The concept of “trust” is fundamentally exploitable — we can’t rely on it. We need to have a practical way to determine the identity of people, hence we need a passport that removes the concept of trust from our immigration process. We need zero trust.

Our APIs are like borders with no immigration control: anyone and anything can make requests to them and easily spoof the identity of other clients to perform malicious operations. In order to secure our “API borders” we need to implement a zero-trust solution that validates the identity of every client. That passport can be an mTLS certificate issued to each instance of any service and validated upon every request.