Kong AI Gateway and Amazon Bedrock

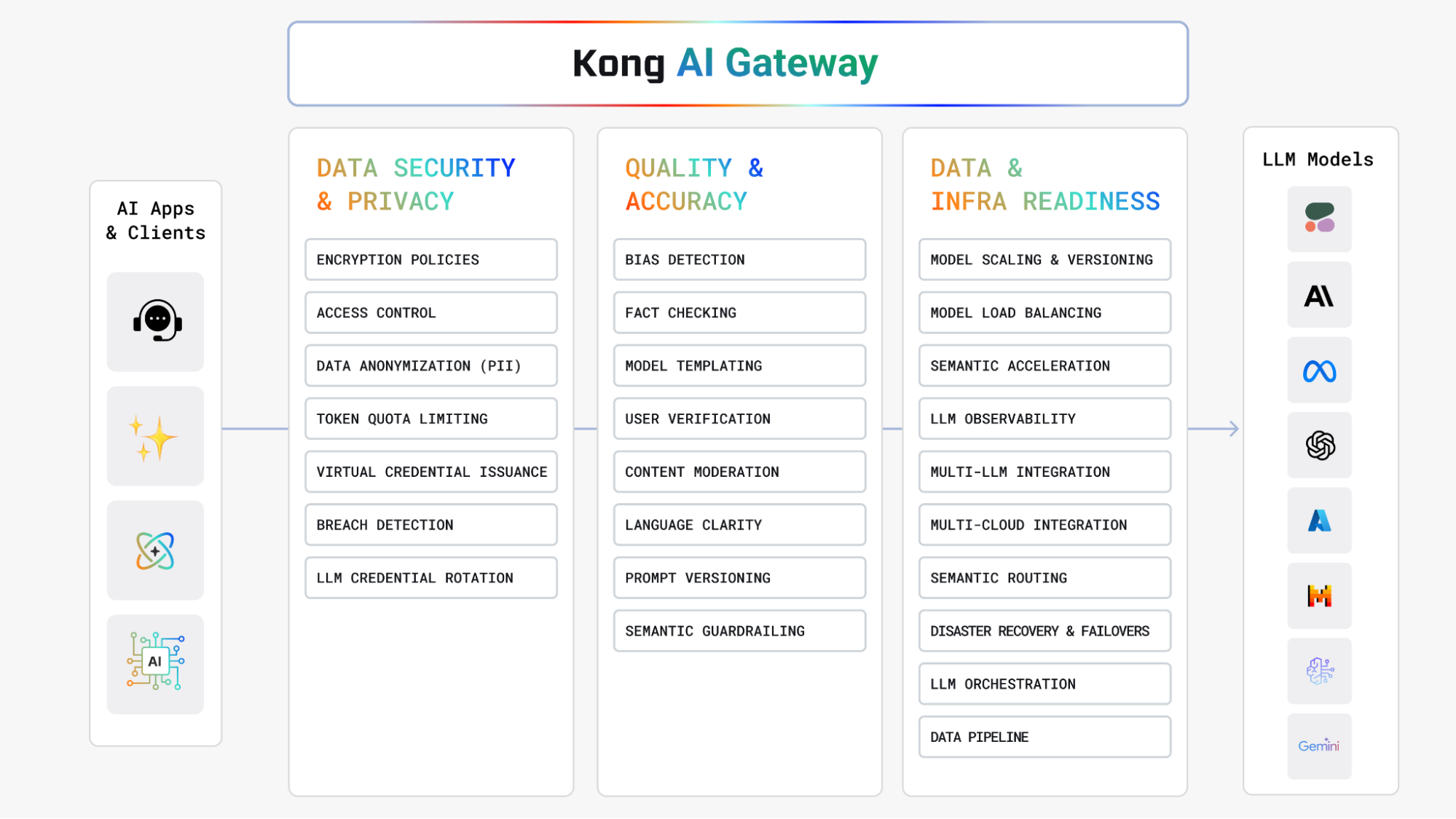

We should get started discussing the benefits Kong AI Gateway brings to Amazon Bedrock for an LLM-based application. As we stated before, Kong AI Gateway leverages the existing Kong API Gateway extensibility model to provide specific AI-based plugins. Here are some values provided by Kong AI Gateway and its plugins:

- AI Proxy and AI Proxy Advanced plugins: the Multi-LLM capability allows the AI Gateway to abstract Amazon Bedrock (and other LLMs as well) load balancing models based on several policies including latency time, model usage, semantics etc. These plugins extract LLM observability metrics (like number of requests, latencies, and errors for each LLM provider) and the number of incoming prompt tokens and outgoing response tokens. All this is in addition to hundreds of metrics already provided by Kong Gateway on the underlying API requests and responses. Finally, Kong AI Gateway leverages the observability capabilities provided by Konnect to track Amazon Bedrock usage out of the box, as well as generate reports based on monitoring data.

Prompt Engineering:

- AI Prompt Template plugin, responsible for pre-configuring AI prompts to users

- AI Prompt Decorator plugin, which injects messages at the start or end of a caller's chat history.

- AI Prompt Guard plugin lets you configure a series of PCRE-compatible regular expressions to allow and block specific prompts, words, phrases, or otherwise and have more control over a LLM service, controlled by Amazon Bedrock.

- AI Semantic Prompt Guard plugin to self-configurable semantic (or pattern-matching) prompt protection.

- AI Semantic Cache plugin caches responses based on threshold, to improve performance (and therefore end-user experience) and cost.

- AI Rate Limiting Advanced, you can tailor per-user or per-model policies based on the tokens returned by the LLM provider under Amazon Bedrock management or craft a custom function to count the tokens for requests.

- AI Request Transformer and AI Response Transformer plugins seamlessly integrate with the LLM on Amazon Bedrock, enabling introspection and transformation of the request's body before proxying it to the Upstream Service and prior to forwarding the response to the client.

Besides, the Kong AI Gateway use cases can combine policies implemented by hundreds of Kong Gateway plugins, such as:

- Authentication and Authorization: OIDC, mTLS, API Key, LDAP, SAML, Open Policy Agent (OPA)

- Traffic Control: Request Validator and Size Limiting, WebSocket support, Route by Header, etc.

- Observability: OpenTelemetry (OTel), Prometheus, Zipkin, etc.

Also, from the architecture perspective, in a nutshell, the Konnect Control Plane and Data Plane nodes topology remains the same.

By leveraging the same underlying core of Kong Gateway, we're reducing complexity in deploying the AI Gateway capabilities as well. And of course, it works on Konnect, Kubernetes, self-hosted, or across multiple clouds.

Amazon Bedrock Foundation Models

This blog post consumes some Foundation Models, including Prompt/Chat and Embeddings Models. Please, make sure you have requested access to the following models:

- meta.llama3-70b-instruct-v1:0

- amazon.titan-embed-text-v1

Implementation Architecture

The RAG implementation architecture should include components representing and responsible for the functional scope described above. The architecture comprises:

- Redis as the Vector Database

- Amazon Bedrock supporting both Prompt/Chat and Embeddings Models

- Kong AI Gateway to abstract and protect Amazon Bedrock as well as implement LLM-based use cases.

Kong AI Gateway, implemented as a regular Konnect Data Plane Node, and Redis run on an Amazon EKS 3.1 Cluster.

The architecture can be analyzed from both perspectives: Data Preparation and actual Query time. Let's take a look at the Data Preparation time.

Data Preparation time

The Data Preparation time is quite simple: the Document Loader component sends data chunks, based on the data taken, and calls Amazon Bedrock asking the Embedding Model to convert them into embeddings. The embeddings are then stored in the Redis Vector Database. Here's a diagram describing the flow:

For the purpose of this blog post, we're going to use the "amazon.titan-embed-text-v1" Embedding Model.

Query time

Logically speaking we can break the Query time into some main steps:

- The AI Application builds a Prompt and, using the same "amazon.titan-embed-text-v1" Embedding Model, generates embeddings to it.

- The AI Application takes the embeddings and semantically searches the Vector Database. The database returns relevant data related to the context.

- The AI Application sends the prompt, augmented with the retrieved data received from the Vector Database, to Kong AI Gateway.

- Kong AI Gateway, using the AI Proxy (or AI Proxy Advanced) plugin, proxies the request to Amazon Bedrock and returns the response to the AI Application. Kong AI Gateway enforces eventual policies previously enabled.

Kong AI Gateway and Amazon Bedrock Integration and APIs

Let's take a look at the specific integration point between Kong AI Gateway and Amazon Bedrock. To get a better understanding, here's the architecture cut isolating both:

The consumer can be any RESTful-based component, in our case will be a LangChain application.

As you can see, there are two important topics here:

- OpenAI API specification

- Amazon Bedrock Converse API and EKS Pod Identity

Let's discuss each one of them.

OpenAI API support

Kong AI Gateway supports the OpenAI API specification. That means the consumer can send standard OpenAI requests to the Kong AI Gateway. As a basic example, consider this OpenAI request:

When we add Kong AI Gateway, sitting in front of Amazon Bedrock, we're not just exposing it but also allowing the consumers to use the same mechanism — in this case, OpenAI APIs — to consume it. That leads to a very flexible and powerful capability when we come to development processes. In other words, Kong AI Gateway normalizes the consumption of any LLM infrastructure, including Amazon Bedrock, Mistral, OpenAI, Cohere, etc.

As an exercise, the new request should be something like this. The request has some minor differences:

- It sends a request to the Kong API Gateway Data Plane Node.

- It replaces the OpenAI endpoint with a Kong API Gateway route.

- The API Key is actually managed by the Kong API Gateway now.

- We're using an Amazon Bedrock Model, meta.llama3-70b-instruct-v1:0.

Amazon EKS Pod Identity

The Konnect Data Plane Node, where the Kong AI Gateway runs, has to send requests to Amazon Bedrock on behalf of the Gateway consumer. In order to do it, we need to grant permissions to the Data Plane deployment to access the Amazon Bedrock API, more precisely the Converse API, used by the AI Gateway to interact with it.

For example, here's a request to Amazon Bedrock, using the AWS CLI. Use "aws configure" first to set your Access and Secret Key as well as the AWS region you want to use then run the command.

It's reasonable to consume Bedrock service with local CLI commands. However, for Amazon EKS deployments, the recommended approach is EKS Pod Identity, instead of simple long-term credentials like AWS Access and Secret Keys. In a nutshell, EKS Pod Identity allows the Data Plane Pod's container to use the AWS SDK and send API requests to AWS services using AWS Identity and Access Management (IAM) permissions.

Amazon EKS Pod Identity associations provide the ability to manage credentials for your applications, similar to the way that Amazon EC2 instance profiles provide credentials to Amazon EC2 instances.

As another best practice, we recommend the Private Key and Digital Certificate pair used by the Konnect Control Plane and the Data Plane connectivity to be stored in AWS Secrets Manager. In this sense, the Data Plane deployment refers to the secrets to get installed.

Amazon Elastic Kubernetes Service (EKS) installation and preparation

Now, we're ready to get started with our Kong AI Gateway deployment. As the installation architecture defines, it will be running on an EKS Cluster.

In order to create the EKS Cluster, you can use eksctl, the official CLI for Amazon EKS, like this:

Note command creates an EKS Cluster, version 1.31, with a single node based on the g6.xlarge instance type, powered by NVIDIA GPUs. That is particularly interesting if you're planning to deploy and run LLMs locally in the EKS Cluster.

Cluster preparation

After the installation, we should prepare the Cluster to receive the other components:

Please, refer to the official documentation to learn more about the components and their installation processes.

Redis installation

As defined in the implementation architecture, Redis plays the Vector Database role. It's going to be deployed and running in a specific EKS namespace and exposed with an NLB.

For its deployment, we're going to be using its Helm Charts. Get started adding its repo:

Expose the Redis Service with an ELB:

Get the ELB hostname with:

You can check Redis Server with a simple command:

Redis' dashboard is also available:

http://a7c63f0c9a740483987398a6c0b6e444-967754848.us-east-2.elb.amazonaws.com:8001

Kong Data Plane installation

The second component to be installed is the Kong AI Gateway. The process can be divided into two steps:

- Pod Identity configuration

- Kong Data Plane deployment

Pod Identity configuration

In this first step, we configure EKS Pod Identity describing which AWS Services the Data Plane Pods should be allowed to access. In our case, we need to consume Amazon Bedrock and AWS Secrets Manager.

IAM Policy

Pod Identity relies on IAM policies to check which AWS Services can be consumed. Our policy should allow access to AWS Bedrock actions so the Data Plane will be able to send requests to Bedrock APIs, more precisely, Converse and ConverseStream APIs. The Converse API requires permission to the InvokeModel action as ConverserStream needs access to InvokeModelWithResponseStream.

Also, we're going to use AWS Secrets Manager to store our Private Key and Digital Certificate pair the Konnect Control Plane and Data Plane used to communicate.

Considering all this, let's create the IAM policy with the following request:

Pod Identity Association

Pod Identity takes a Kubernetes Service Account to manage the permissions. So create the Kubernetes namespace for the Kong Data Plane deployment and a simple Service Account inside of it.

Now we're ready to create the Pod Identity Association. We use the same eksctl command to do it:

The command above is responsible for:

- IAM Role creation based on the IAM Policy we previously defined

- Associating the IAM Role to the existing Kubernetes Service Account

You can check the Pod Identity Association with:

Check the IAM Role and Policies attached with:

Kong Data Plane deployment

The Data Plane deployment comprises the following steps:

- Konnect subscription and Control Plane creation

- Create a Konnect Vault for AWS Secrets Manager

- Private Key and Digital Certification pair creation

- Store the pair in AWS Secrets Manager

- Data Plane deployment

Konnect subscription and Control Plane creation

This fundamental step is required to get access to Konnect. Click on the Registration link and present your credentials. Or, if you already have a Konnect subscription, log in to it.

Any Konnect subscription has a "default" Control Plane defined. You can proceed using it or optionally create a new one. The following instructions are based on a new Control Plane. You can create a new one by clicking on the "Gateway Manager" menu option. Then click on "New Control Plane" and choose the Kong Gateway option. Inside the "Kong Gateway" page type "AI Gateway" for the name and leave the "Self-Managed Hybrid Instances" option set.

Create a Konnect Vault for AWS Secrets Manager

Now, inside the new "AI Gateway" Control Plane, click on the "Vaults" menu option and create a Vault referring to AWS Secret Manager where the Private Key and Digital Certificate pair is going to be stored. Inside the "New Vault" page, define "Ohio (us-east-2)" for Region and "aws-secrets" for Prefix. Click on "Save."

Private Key and Digital Certificate pair creation

Still inside the "AI Gateway" Control Plane, click on "Data Plane Nodes" menu option and "New Data Plane Node" button.

Choose Kubernetes as your platform. Click on "Generate certificate", copy and save the Digital Certificate and Private Key as tls.crt and tls.key files as described in the instructions. Also copy and save the configuration parameters in a values.yaml file.

Store the Private Key and Digital pair in AWS Secrets Manager

Store the pair in AWS Secret Manager with the following commands:

Data Plane deployment

Finally, the last step will deploy the Data Plane. Take the values.yaml file and change it adding the AWS Secret Manager references and the Kubernetes Service Account used by the Pod Identity Association. The updated version should be something like this. Replace the cluster_* endpoints and server names with yours:

Basically, the changes are:

- Since the Private Key and Digital Certificate pair has been stored in AWS Secrets, remove the "secretVolumes" section.

- Replace the "cluster_cert" and "cluster_cert_key" fields with Konnect Vault references and AWS Secret Manager secrets.

- Add the proxy annotation to request a public NLB for the Data Plane.

- Add the deployment section referring to the Kubernetes Service Account that has been used to create the Pod Identity Association.

Use the Helm command to deploy the Data Plane:

Checking the Data Plane

Use the Load Balancer created during the deployment:

You should get a response like this:

Now we can define the Kong Objects necessary to expose and control Bedrock, including Kong Gateway Service, Routes, and Plugins.

decK

With decK (declarations for Kong) you can manage Kong Konnect configuration and create Kong Objects in a declarative way. decK state files describe the configuration of Kong API Gateway. State files encapsulate the complete configuration of Kong in a declarative format, including services, routes, plugins, consumers, and other entities that define how requests are processed and routed through Kong. Please check the decK documentation to learn how to install it.

Konnect PAT

Once you have decK installed, you should ping Konnect to check if the connection is up. In order to do it you need a Konnect Personal Access Token (PAT). To generate your PAT, go to Konnect UI, click on your initials in the upper right corner of the Konnect home page, then select "Personal Access Tokens." Click on "+ Generate Token," name your PAT, set its expiration time, and be sure to copy and save it as an environment variable also named as PAT. Konnect won’t display your PAT again.

You can ping Konnect with:

Kong declarations for the RAG Application

Now, create a file named "kong_bedrock.yaml" with the following content:

The declaration defines multiple Kong Objects:

The declaration has been tagged as "rag-bedrock" so you can manage its objects without impacting any other ones you might have created previously. Also, note the declaration is saying it should be applied to the "AI Gateway" Konnect Control Plane.

You can submit the declaration with the following decK command:

Consume the Kong AI Gateway Route

You can now send a request consuming the Kong Route. Note we have injected the API Key with the expected name, "apikey", and with a value that corresponds to the Kong Consumer, "123456". If you change the value or the API Key name, or even not add it, you get a 401 (Unauthorized) error.

To enable the other Plugins you can change the declaration as "enabled: true" or use the Konnect UI.

LangChain

With all the components we need for our RAG application in place, it's time for the most exciting part of this blog post: the RAG application.

The minimalist app we're going to present focuses on the main steps of the RAG processes. It's not intended to be used other than learning processes and maybe lab environments.

Basically, the application comprises two Python scripts, using LangChain, one of the main frameworks available today to write AI applications including LLMs and RAG. The scripts are the following:

- rag_redis_bedrock_langchain_embeddings.py: it implements the Data Preparation process with Redis and Bedrock's "amazon.titan-embed-text-v1" Embedding Model.

- rag_redis_bedrock_langchain_kong_ai_gateway.py: responsible for the Query time implementation combining Kong AI Gateway, Redis, and Bedrock.

From the RAG perspective, the application is based on an interview Marco Palladino, Kong's co-founder and CTO, gave discussing Kong AI Gateway and our current technology environment.

Data Preparation

The first thing the code does is to download the transcript of the interview in a local file. Then it uses the LangChain Community's DirectoryLoader and TextLoader as well as CharacterTextSplitter Python packages to load and break the original text into chunks.

Next, the code uses the BedrockEmbeddings and Redis packages, also provided by LangChain, to generate the embeddings for each chunk and store all of them in Redis Vector Database's collection named "docs-bedrock". Note we hit Redis through the ELB we provisioned during its deployment. Note also the script forces the index recreation.

Before running the code make sure you have a Python3 environment set and the following packages installed:

- requests

- langchain

- langchain-community

- langchain-aws

- langchain-redis

It's important to note that this Python script assumes you have your AWS credentials set locally in order to connect to Bedrock. Please refer to the Boto3 documentation to learn how to manage them.

You can run the script with:

After the execution, you can check the Redis database using its dashboard or with the following command. You should see 52 points keys:

Python script code

Here's the code of the first Python script:

LangChain Chain

Before exploring the second Python script, responsible for the actual RAG Query, it's important to get a better understanding of what a LangChain Chain is. Here's a simple example including Kong AI Gateway and Amazon Bedrock. Note that the code does not implement RAG, restricted to explore the Chain concept. The next section will evolve the example to include RAG.

The code requires the installation of the "langchain_openai" package.

The most important line of the code is the creation of the LangChain Chain: chain = prompt | llm.

A Chain, the main LangChain component, can be created with LCEL (LangChain Expression Language), a declarative language. In fact, a Chain is responsible for executing a sequence of steps, "chained" together and separated by the Pipe ("|") Operator. All steps of a Chain implement the Runnable LangChain Class.

In our case, the Chain takes the output of the Prompt, passes it to the LLM, which, as expected, sends the prompt to the LLM and gets the response.

Specifically, for the prompt object, it has been created with a {topic} template. So it needs to be informed by the chain.invoke Method.

On the other hand, the llm object actually refers to Kong AI Gateway. As we stated before, Kong AI Gateway supports the OpenAI API specification, meaning that the consumer, in our case the LangChain code, should interact with the Gateway through the standard LangChain ChatOpenAI chat model class.

Here's the line:

Note that the Class refers to the Kong AI Gateway Route. We're asking the Gateway to consume the Bedrock model meta.llama3-70b-instruct-v1:0. Besides, since the Route has the Key Auth plugin enabled, we've added the API Key using the default_headers parameter. Finally, the standard and required api_key parameter should be passed but it's totally ignored by the Gateway.

RAG Query

Now, let's evolve the script to add RAG. This time, LangChain Chain will be updated to include the Vector Database.

First, remember that, in a RAG application, the Query should combine a Prompt and contextual and relevant data, related to the Prompt, coming from the Vector Database. Something like this:

Considering this flow we should implement a parallel processing with both sources. That's what the LangChain's RunnableParallel class does. So, the new Chain would look like this:

And the code snippet with the new Chain should be like this. Fundamentally, the Chain has included two new steps: one before and another after the original prompt and llm steps.

So let's review all of them. However, before jumping to the Chain, we have to describe a bit how it is going to hit the Redis Vector Database to get the Relevant Data coming from it.

retriever

The first line gets the reference to the Redis index we created in the first Python script.

The second line calls the "as_retriever" method of the Redis class so we can start sending queries to Redis with a RedisVectorStoreRetriever object. For example, here's the "retriever" object construction set with "similarity distance threshold" search type:

Check this how-to guide to learn more about Retrievers.

New LangChain Chain

Let's check the steps of the new Chain now.

retriever_prompt_setup

The line calls the RunnableParallel class which launches, in parallel, one task per parameter:

"context": refers to the retriever which is, in our case, the Redis Vector Database.

"question": uses the RunnablePassthrough class which takes the Chain's input and passes it with no updates. The RunnablePassthrough() call basically says the "question" parameter is not defined yet but it will be provided eventually. Check the following how-to guide to learn more about the RunnablePassthrough class

Both RunnableParallel and RunnablePassthrough classes are subclasses of the fundamental Runnable class.

The Chain's flow will proceed only when the two tasks have been completed. The next step, "prompt", will receive both data as follows:

prompt

Considering that it will receive the output of the "retriever_prompt_setup" step, it should be modified. Here it is:

And the template needs to refer to both context and question:

llm

The "llm" step remains exactly the same. It calls the Kong AI Gateway route which is responsible for routing the request to Amazon Bedrock:

StrOutputParser()

The StrOutParser class parses the output coming from the "llm" step in a readable way.

Invoking the Chain

We're now ready to invoke the Chain. Here's one example:

Of course, the response will be totally contextualized considering the data we have in our Vector Database.

LangChain provides a nice tutorial on how to build a RAG application.

Kong AI Gateway Plugins

Once we have the RAG Application done, it'd be interesting to start enabling the AI Plugins we have created.

Kong AI Prompt Decorator plugin

For example, besides the Key Auth plugin, you can enable the AI Prompt Decorator, configured with decK, to see the response in Brazilian Portuguese.

You can use decK again, if you will or go to Konnect UI and look for the Kong Route's Plugins page. Use the toggle button to enable the Plugin.

Kong AI Rate Limiting Advanced plugin

You can also enable the AI Rate Limiting Advanced plugin we have created for the user. Notice that, differently to the AI Prompt Decorator plugin, this plugin has been applied to the existing Kong Consumer created by the decK declaration. If you create another Kong Consumer (and a different Credential), the AI Rate Limiting plugin will not be enforced.

Also note that the AI Rate Limiting Advanced defines policies based on the number of tokens returned by the LLM provider, not the number of requests as we usually have for regular rate limiting plugins.

Python script code

Here's the code of the second Python script:

Conclusion

This blog post has presented a basic RAG Application using Kong AI Gateway, LangChain, Amazon Bedrock, and Redis as the Vector Database. It's totally feasible to implement advanced RAG apps with query transformation, multiple data sources, multiple retrieval stages, RAG Agents, etc. Moreover, Kong AI Gateway provides other plugins to enrich the relationship with the LLM providers, including Semantic Cache, Semantic Routing, Request and Response Transformation, etc.

Also, it's important to keep in mind that, just like we did with the Key Auth plugins, we can continue combining other API Gateway plugins to your AI-based use cases like using the OIDC plugin to secure your Foundation Models with AWS Cognito, using the Prometheus plugin to monitor your AI Gateway with Amazon Managed Prometheus and Grafana, and so on.

Finally, the architect flexibility provided natively by Konnect and Kong API Gateway allows us to deploy the Data Planes in a variety of platforms including AWS EC2 VMs, Amazon ECS, and Kong Dedicated Cloud Gateway, Kong's SaaS service for the Data Planes running in AWS.

You can discover all the features available on the Kong AI Gateway product page, or you can check the Kong and AWS landing page at https://konghq.com/partners/aws to learn more.