When we build software, it's critical that we test and roll-out the software in a controlled manner. To make sure this happens, we make use of available tools and best practices to make sure that the software works as intended. We conduct code reviews, execute all the possible unit, integration, and functional tests, and then do it all again in a staging or QA environment that mimics production as closely as possible. But these are just the basics. Eventually, the proof of the pudding is in the eating… so let’s head to production.

When going to production with new releases there are two methods of reducing deployment risk that, though proven, are often underutilized. The methods are Canary Releases and Blue/Green Deployments. Typically, we'll only use these methods after all other QA has been passed as they both directly work with production traffic.

Now, let’s take a closer look at how Kong can help us.

Canary Releases

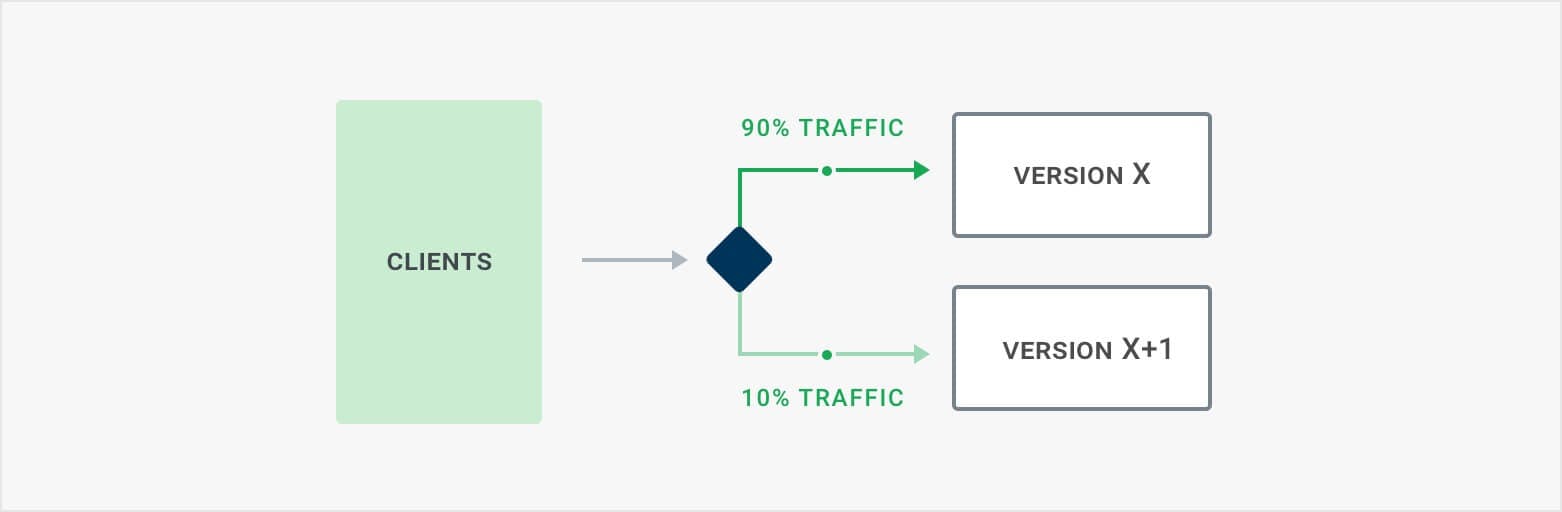

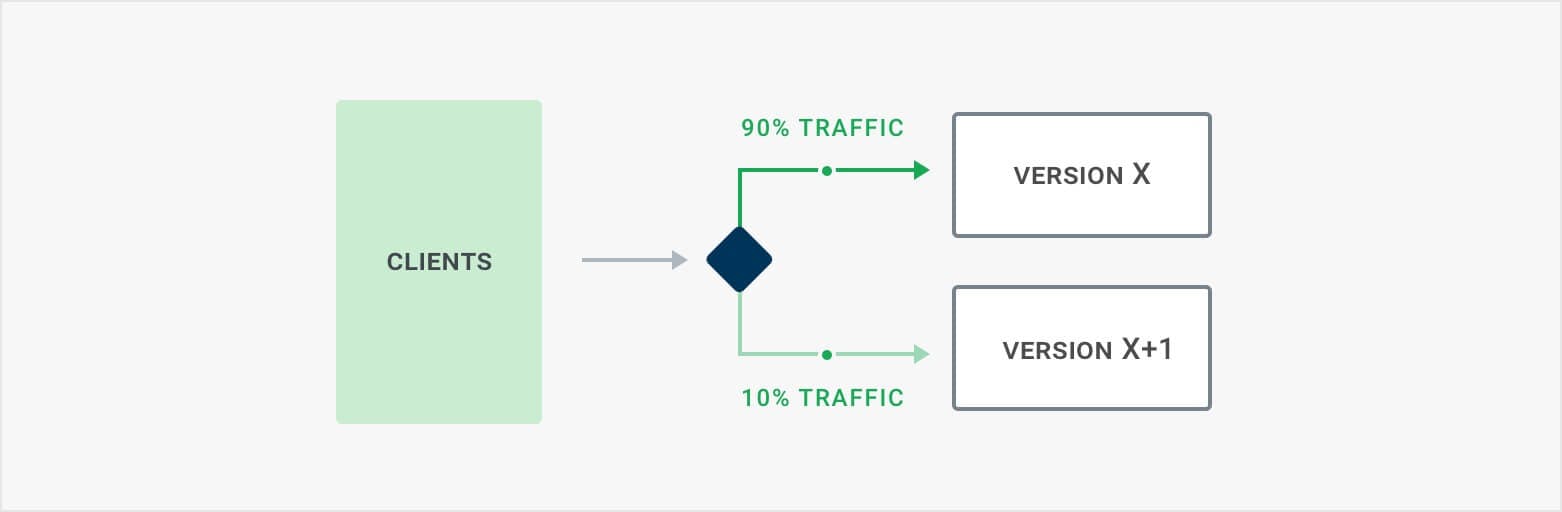

A canary release exposes a limited amount of production traffic to the new version we are deploying. For example, we would route 2% of all traffic to the new service to test it with live production data. By doing this, we can test our release for any unexpected regressions, such as application integrations or resource usage (CPU/memory/etc.) that didn’t show up in our test environments. .

With Kong, we can easily create a Canary release by using Kong’s load balancer features or the Canary Release plugin (Kong Enterprise only).

With Kong Community Edition (CE) we can use Kong’s load balancer (and its Upstream entity) to help us out. Let’s say we have the following configuration:

- An Upstream

my.service, containing 1 Target1.2.3.4 with weight 100, running the current production instance - A Service that directs traffic to

http://my.service/

By adding additional Targets with a low weight to the Upstream, we can now divert traffic to another instance. Let’s say we have our new version deployed on a system with IP address5.6.7.8. If we then add that Target, with a weight of 1. It should get approximately 1% of all traffic. If that service works as expected, we can increase the weight (or decrease the weight of the existing one) to get more traffic. Once we’re satisfied, we can set the weight of the old version to 0, and we’ll be completely switched to the new version.

When things don’t go as expected, we can set the new Target to weight 0 to roll-back and resume all traffic on our existing production nodes.

When using the default balancer settings, it will divert requests randomly. Because of this, consumers of your API may be “flip-flopping” between the new and old versions. This occurs because the balancer scheme is weighted round robin by default. If we configure the balancer to use the consistent hashing methods, however, we can make sure that the same consumers always end up on the same back-end and prevent this flip-flopping.

It’s important to note that this example uses a single Target for each version of the service. We can add more Targets, but manual management becomes progressively more difficult. Fortunately, Kong's Canary Release plugin solves this challenge.

Canary with Kong Enterprise

With the Kong Enterprise-only Canary Release plugin, executing Canary Releases is even easier. In the CE approach above we had to manually manage the Targets for the Upstream entity to route the traffic there. With the Canary plugin, we can set an alternate destination for the traffic, identified by a hostname (or IP address), a port, and a URI. In this case it is easier to not add Targets, but rather create another load balancer specified for the new version of your Service.

Now, let’s say we have the same configuration as above:

- An Upstream

my.v1.service, containing 1 target 1.2.3.4 with weight 100, running the current production instance - A Service that directs traffic to

http://my.v1.service/

The difference here is that we now have a version in the Upstream name. For the Canary Release we'll add another upstream like this:

- An Upstream

my.v2.service, containing 1 Target 5.6.7.8 with weight 100, running the new version - A Canary Release plugin configured on the Service that directs 1% of traffic to host

my.v2.service

Now we can have as many Targets in each Upstream as we want, and only need to control one setting in the Canary Release plugin to determine how much traffic is redirected. This makes it much easier to manage the release, especially in larger deployments.

Besides explicitly setting a % of traffic to route to the new destination, the Canary Release plugin also supports timed (progressing) releases and releases based on groups of consumers. The group feature allows the gradual roll out to groups of people. For example, we can use the group feature to first add testers, then employees, and then everyone. The group feature is/will be available with Kong Enterprise 0.33.

Benefits of the Kong EE Canary Release plugin:

- No manual tracking of Targets

- Canary can also be done on URI, instead of only IP/port combo

- Use versioned Upstreams to make it easier to manage the system

- Release based on groups

Blue/Green Deployments

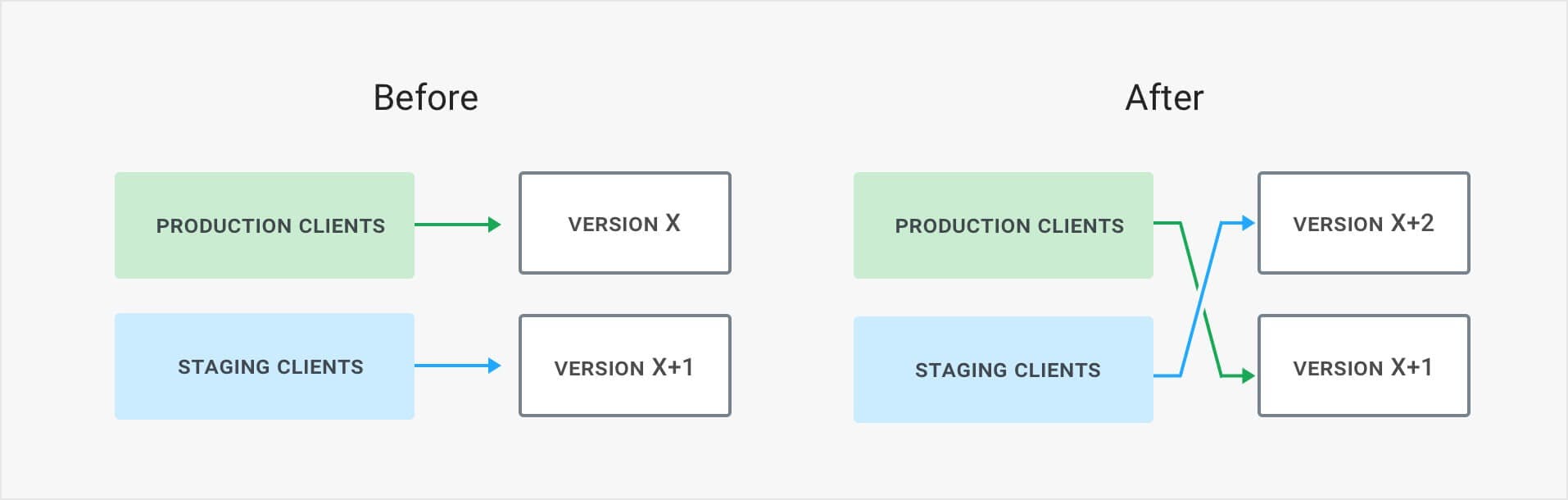

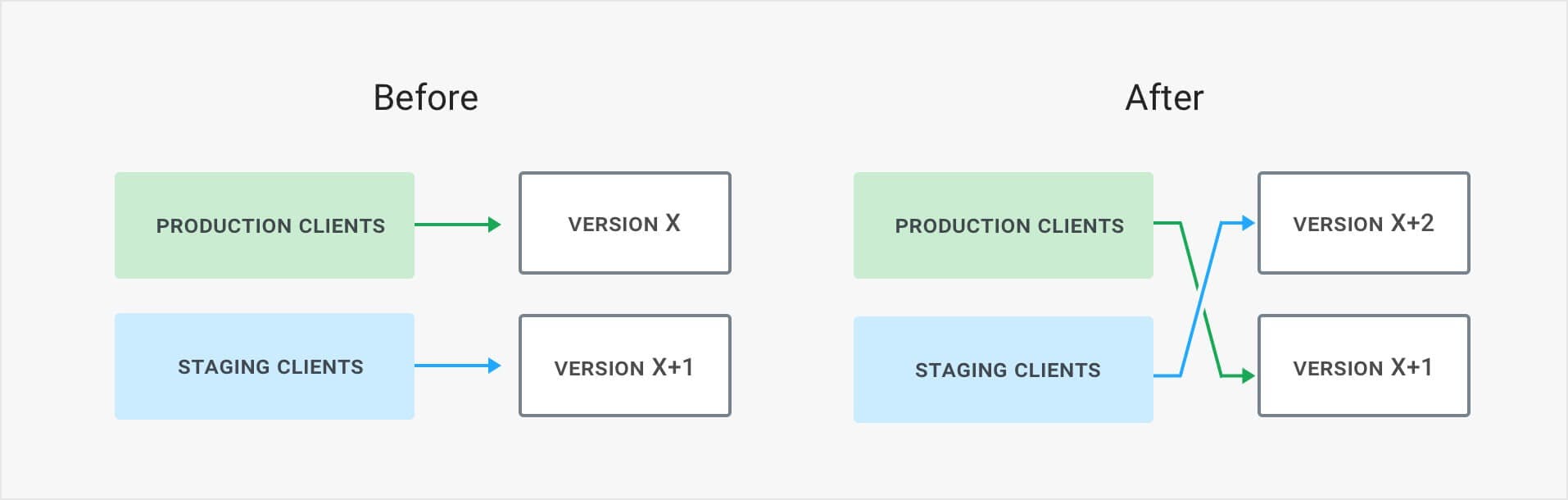

Where a Canary Release can still be considered testing, a Blue/Green release is really a release - an all-or-nothing switch. Blue/Green releases work by having two identical environments, one Blue and the other Green. At any given time, one of them is staging and the other is running production. When a release is ready in staging, the roles of the two environments switch. Now our staging becomes production, and our production becomes staging.

This simple setup is very powerful. It allows us to test everything in staging as it is identical to production. Even after the switch, the staging environment (former production) can hang around for a bit in case something does not work out as planned and we need to quickly roll-back.

With Kong, doing a Blue/Green release is simple. Just create two Upstreams and, when you want to switch traffic, execute a PATCH request to update the Service to point to the other Upstream.

From Here…

The high-level view always seems easy, but the reality is always more challenging. From an application perspective, there are several caveats we need to consider. How do we handle long-running connections/transactions? How do we deal with updated database schemas while (at least temporarily) running the two in parallel?

Using Kong will not make all issues magically disappear, but it will provide you with powerful tools to reduce risk and simplify the release process.

Happy releasing!