Containerization and orchestration are becoming increasingly popular. According to a recent survey conducted by Market Watch, the global container market will exceed $5 billion by 2026. In 2019, that number was under 1 billion. These statistics show that the world is moving more towards containers and orchestration faster and faster each day. One example of this is moving from VM to Kubernetes.

For companies like Papa John's, Kong Gateway supports connectivity across any infrastructure. With Kong, you can keep your apps connected throughout a transition from VM to Kubernetes. After moving the app to Kubernetes, you can manage the Kubernetes ingress with the Kong Ingress Controller. The Kong Ingress Controller delivers API management, ingress security and service mesh configurability.

In this tutorial, you’ll learn a prevalent scenario: moving an app from a VM to a container and running the container in Kubernetes. We'll cover how to containerize your app, no matter which platform hosts it—even if it's on-prem.

Building the Application on VM

For this scenario, you will build a small server using Python's Flask framework. When this server receives a request, it will return a response that says, “Hello World!” The focus of this post isn't on Python or the application. We'll be using it as an example of how to make an existing VM-based app work in a container.

First, within your project's directory, create a subfolder called app. Inside this subfolder, create a file called app.py which:

- Imports the Flask library

- Initializes the Flask constructor and stores it in a variable called app

- Configures a single route that maps root ("/") to a function called home()

- Defines home() to respond with a greeting

- Calls run() to start the server

The server code looks like this:

Your container will also need to install the server's dependencies, which in this case, is just Flask. To do that, we list Flask as a dependency in a file called requirements.txt. The file will be inside of the same directory as the app.py file:

Creating a Dockerfile and Docker Image for the Application

Docker is one of the most popular tools for setting up a containerized image. Docker itself is just a platform to run something called a Dockerfile. A Dockerfile is a list of commands that define how your container should be initialized, configured and run. The Dockerfile creates a container image.

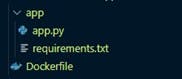

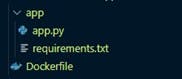

First, create an empty text file in your project directory called Dockerfile—just like that, no extension. The folder structure should look like the screenshot below:

Every Dockerfile starts with a line that imports a base image. Base images come pre-installed with various niceties. You can probably find an image to begin with on the Docker Hub, regardless of your programming language or preferred operating system.

The Dockerfile to containerize the Flask server will use the latest version of Python. The line to define that looks like this:

That's all we need for the initialization step; we can move on to the configuration. Next, we want to create a directory where our server files can go. We also need to install the dependencies from requirements.txt, just as we did on our local machine. Adding the following lines will perform these steps:

In this Dockerfile, copy the files from the app subfolder and place them in a directory in the Docker image called "build." We are then installing the dependencies using pip, which is Python's package manager.

Last, we need to run the server. We can do so by ending the file with the following lines:

Building the Docker Image

To build the Docker image, open a terminal to the directory that contains the “app” directory and the Dockerfile. Run the following command to build the Docker image:

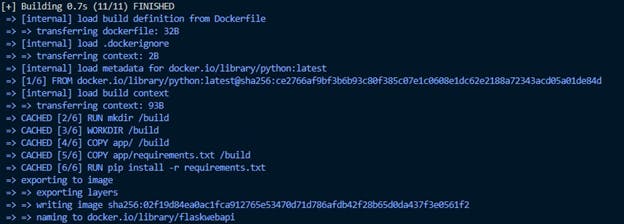

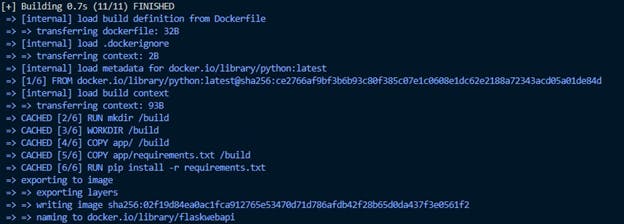

The -t option provides a name, which we can use to refer to the image in the future. After you run the command above, you should see an output in the terminal similar to the screenshot below.

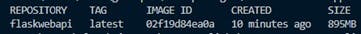

To check and confirm the successful creation of the Docker image, run:

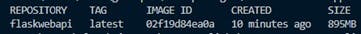

The output should be a table similar to the one below, showing information about the image like name and creation date:

Finally, let's run the image locally to verify that the server works. In the terminal, enter the following command:

Doing so runs the flaskwebapi Docker image we just created and exposes its port 5000 to be accessible from the host machine. When the image is running, open a browser window to http://localhost:5000/hello —you should see a classic “Hello, World!”

Getting the Application Onto a Kubernetes Cluster

Now that we created the Docker image and the server works, it’s time to start thinking about getting the application running on Kubernetes.

The first thing you will need to do is create a Kubernetes cluster. There are many, many platforms out there that offer Kubernetes support, such as Amazon's Elastic Kubernetes Service (EKS) or Google's Kubernetes Engine. These services provide all sorts of configurability on region, resource scaling and more. However, these environments are a bit advanced for this initial attempt at containerizing an app. For this post, we'll use minikube, which provides a system to run Kubernetes locally.

Installing minikube is a breeze. When it's finished, set up your local environment variables by running eval $(minikube docker-env). After that, type minikube start in the terminal. It'll take some time to download and set up Kubernetes, so feel free to hydrate in the meantime. At the end of it all, you should receive this message:

Now, all we should need to do is deploy our image to minikube.

However, before doing that, we need to rebuild our image. Why? Well, we built our image before minikube was installed and configured. Minikube is only able to track local images after configuration. To do so, you can rebuild the image with the same command as before:

There's one more addition we need to make. Every resource in Kubernetes requires a manifest. A deployment counts as a resource because it describes how an image should run. The manifest has a thoroughly documented set of key-value pairs. We won't cover this here today. In this tutorial, we can just copy and paste the one provided below, and minikube will create a deployment:

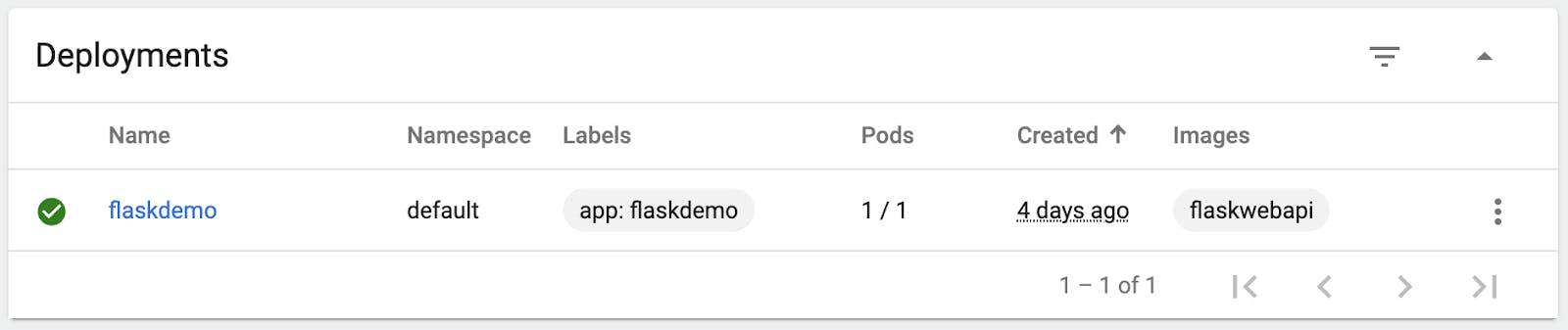

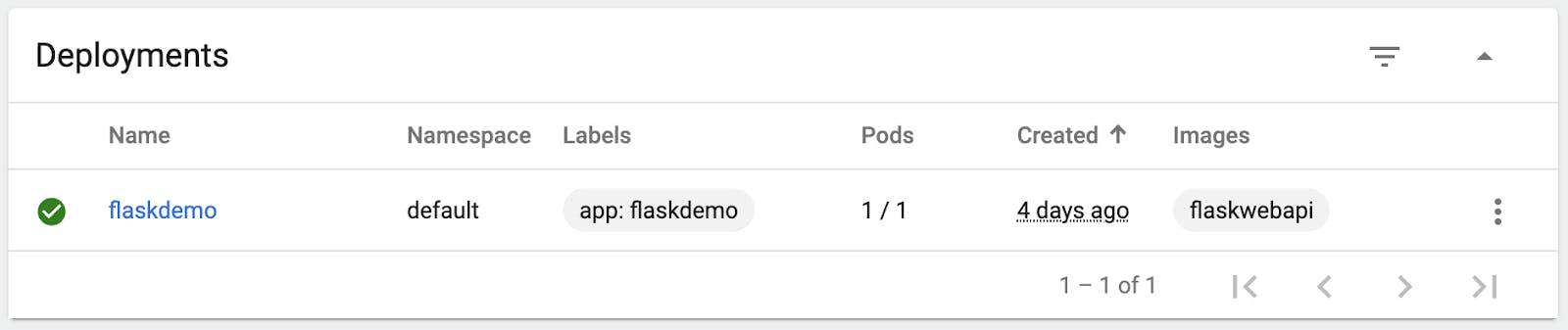

Did it work? minikube comes with a dashboard to show you how your container fleet is performing. Of course, it's a bit much for this little app, but it can be helpful to get acquainted with just how much Kubernetes can do. Run minikube dashboard, and check out the accompanying metrics.

Setting Up the Kong Ingress Controller

With the Flask web app now deployed to minikube, we can continue exploring more Kubernetes best practices locally. That way, we’ll prepare ourselves for when we need to move from VM to Kubernetes in production.

Our next exploration will involve security via ingress. Ingress is like a souped-up firewall, and it allows you to control precisely which HTTP calls are permissible in and out of your cluster.

Kubernetes services are relatively low-level processing, including setting up an ingress controller. Tools like Kong exist to make managing these services much easier.

To get Kong's Ingress Controller running on minikube, we'll need to deploy it to our cluster, just as we did with our image. minikube lets you create deployments via manifests on the web to simplify the deployment process. Run the following command locally to get started:

Let's verify that this all went through:

You should see some metadata, including names, ports and IP addresses like this:

The connection information is specific to the cluster itself. If your external IP is pending, as it is here, that means we need to expose it to our local machine so that we can connect to the ingress service. We can do that with the tunnel (https://minikube.sigs.k8s.io/docs/handbook/accessing/#using-minikube-tunnel) command. In a separate terminal window, run minikube tunnel.

Finally, we can get the hostname and port to connect to this ingress controller using the service (https://minikube.sigs.k8s.io/docs/commands/service/) command:

You should get back a URL like http://127.0.0.1:64035/. The Kong Ingress Controller is now set up and ready to use with your environment.

The final thing to do is create an ingress configuration for our Flask API. Much like with our deployment, we need to define a manifest here. In our example, we'll just expose the Flask server and make it available for external requests:

If you call curl http://127.0.0.1:<port>/hello you should see it return "Hello World."

The ingress manifest is the final arbiter of all the traffic that goes into (and between) your Kubernetes nodes. The rules key, in particular, defines permitted protocols and paths. It also defines who the backend server controlling the response should be. The key is handy if you designed your app as a set of microservices, but you want to expose a single gateway to clients outside the network.

The Path to Becoming Kubernetes Native

Getting an application to run is rarely an easy task, and deploying it onto a platform like Kubernetes for the first time can seem like a lot of effort. After going through the process several times, the sequence generally remains the same. No matter if you're testing Kubernetes locally, on bare metal or in the cloud.

After moving from VM to Kubernetes, managing your Kubernetes environment comes with its own challenges. That’s where services like Kong come in to make management easier, more reliable and faster with a single operating environment for containers, microservices and APIs.

If you have any additional questions, post them on Kong Nation. To stay in touch, join the Kong Community.

Now that you've successfully moved an application from VM to Kubernetes, you may find these other tutorials helpful: