A year ago, Harry Bagdi wrote an amazingly helpful blog post on observability for microservices. And by comparing titles, it becomes obvious that my blog post draws inspiration from his work. To be honest, that statement on drawing inspiration from Harry extends well beyond this one blog post - but enough about that magnificent man and more on why I chose to revisit his blog.

When he published it, our company was doing an amazing job at one thing: API gateways. So naturally, the blog post only featured leveraging the Prometheus monitoring stack in conjunction with Kong Gateway. But to quote Bob Dylan, "the times they are a-changin [and sometimes an API gateway is just not enough]". So, we released Kuma, an open source service mesh to work in conjunction with Kong Gateway. How does this change observability for the microservices in our Kubernetes cluster? Well, let me show you.

Prerequisites

The first thing to do is to set up Kuma and Kong. But why reinvent the wheel when my previous blog post already covered exactly how to do this. Follow the steps here to set up Kong and Kuma in a Kubernetes cluster.

Install Prometheus Monitoring Stack

Once the prerequisite cluster is set up, getting Prometheus monitoring stack setup is a breeze. Just run the following `kumactl install [..]` command and it will deploy the stack. This is the same `kumactl` binary we used in the prerequisite step. However, if you do not have it set up, you can download it on Kuma's installation page.

To check if everything has been deployed, check the `kuma-metrics` namespace:

Enable Metrics on Mesh

Once the pods are all up and running, we need to edit the Kuma mesh object to include the `metrics: prometheus` section you see below. It is not included by default, so you can edit the mesh object using `kubectl` like so:

Accessing Grafana Dashboards

We can visualize our metrics with Kuma's prebuilt Grafana dashboards. And the best part is that Grafana was also installed alongside the Prometheus stack, so if you port-forward the Grafana server pod in `kuma-metrics` namespace, you will see all your metrics:

Next step is to visit the Grafana dashboard (http://localhost:3000) to query the metrics that Prometheus is scraping from Envoy sidecar proxies within the mesh. If you are prompted to log in, just use `admin` for both the username and password.

There will be three Kuma dashboards:

- Kuma Mesh: High level overview of the entire service mesh

- Kuma Dataplane: In-depth metrics on a particular Envoy dataplane

- Kuma Service to Service: Metrics on connection/traffic between two services

But we can do better…by stealing more ideas from Harry's blog. In the remainder of this tutorial, I will explain how you can extend the Prometheus monitoring stack we just deployed to work in conjunction with Kong.

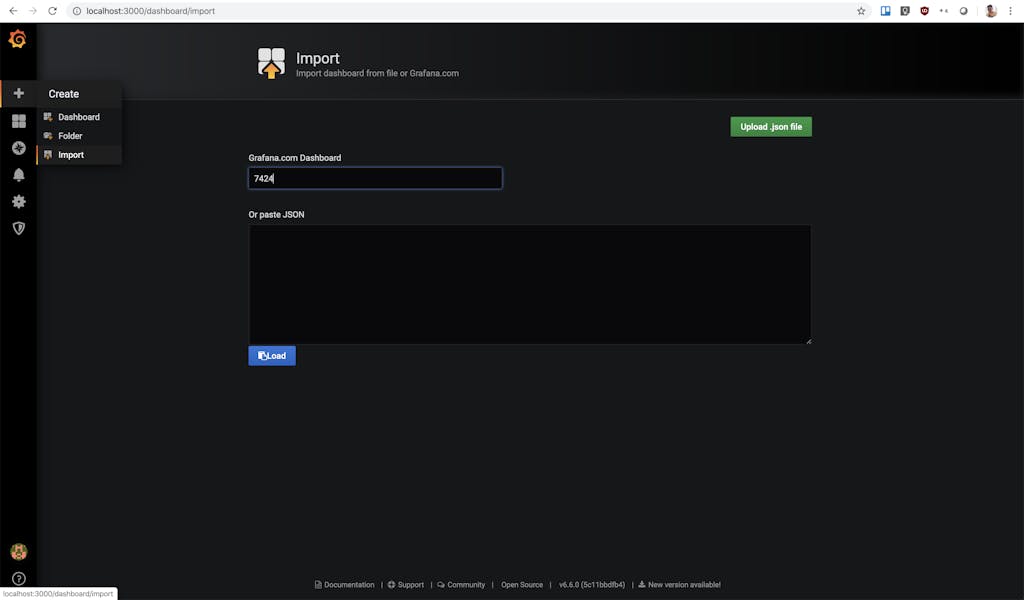

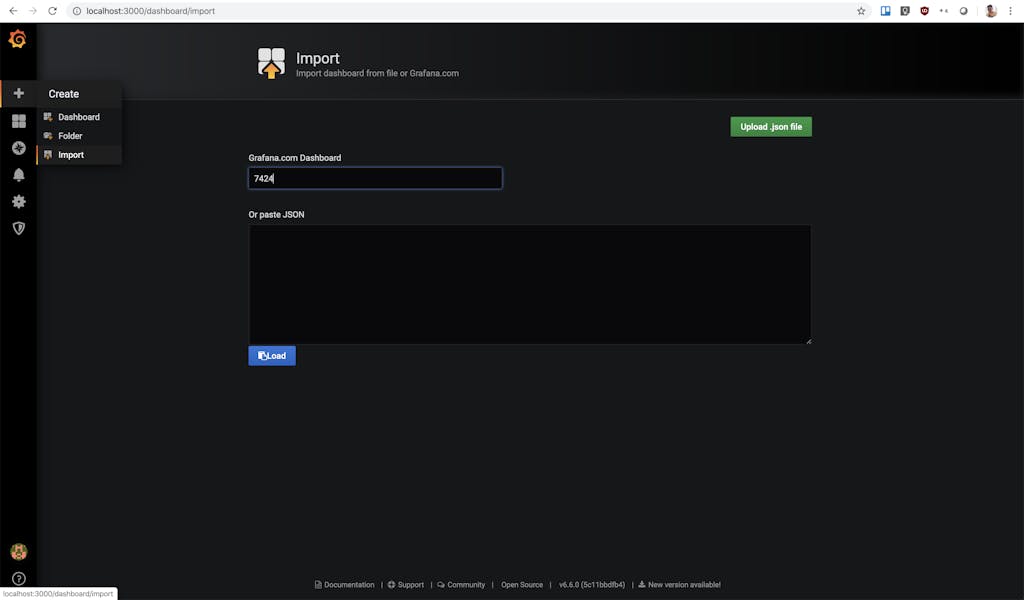

To start, while we are still on Grafana, let's add the official Kong dashboard to our Grafana server. Visit this import page in Grafana (http://localhost:3000/dashboard/import) to import a new dashboard:

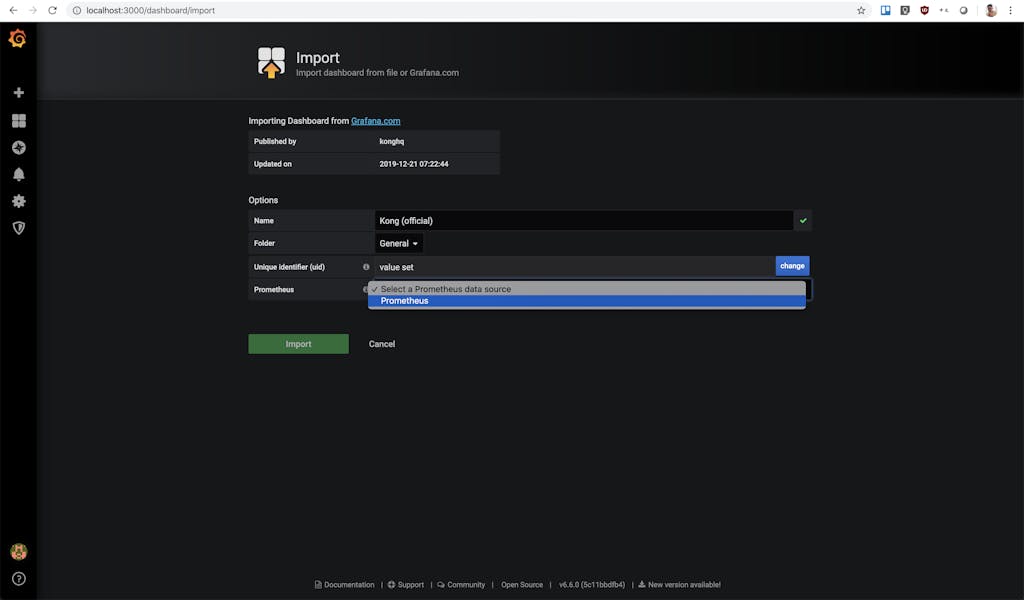

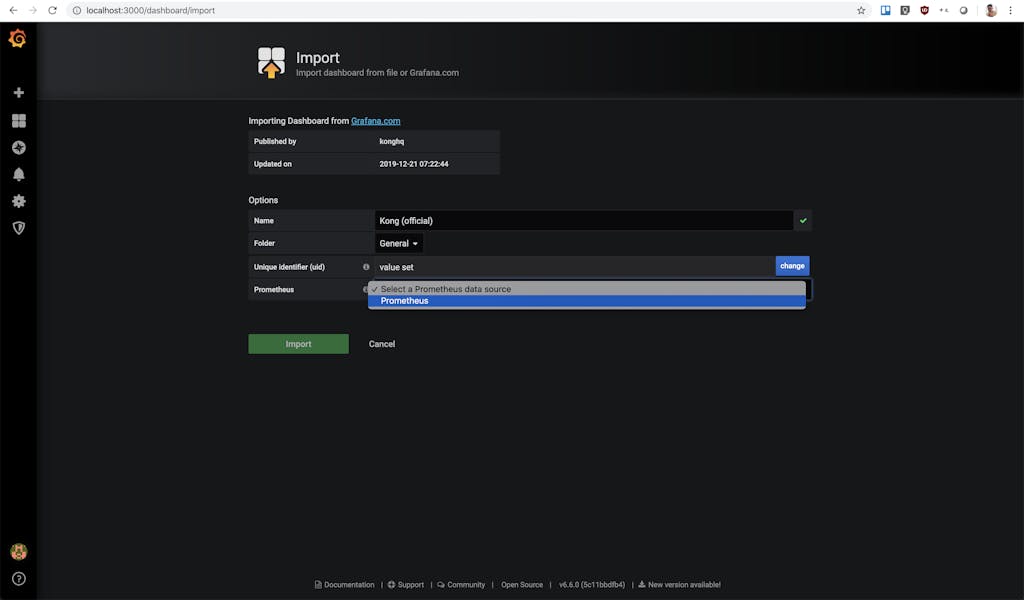

On this page, you will enter the Kong Grafana dashboard ID `7424` into the top field. The page will automatically redirect you to the screenshot page below if you entered the ID correctly:

Here, you need to select the Prometheus data source. The drop down should only have one option named "Prometheus," so be sure to just select that. Click the green "Import" button when you are done. But before we go explore that new dashboard we created, we need to set up the Prometheus plugin on the Kong API gateway.

Enabling Prometheus Plugins on Kong Ingress Controller

We need the Prometheus plugin to expose metrics related to Kong and proxied upstream services in Prometheus exposition format. But you may ask, "wait, didn't we just set up Prometheus by enabling the metrics option on the entire Kuma mesh? And if Kong sits within this mesh, why do we need an additional Prometheus plugin?" I know it may seem redundant, but let me explain. When enabling the metrics option on the mesh, Prometheus only has access to metrics exposed by the data planes (Envoy sidecar proxies) that sit alongside the services in the mesh, not from the actual services. So, Kong Gateway has a lot more metrics available that we can gain insight into if we can reuse the same Prometheus server.

To do so, it really is quite simple. We will create a Custom Resource in Kubernetes to enable the Prometheus plugin in Kong. This configures Kong to collect metrics for all requests proxies via Kong and expose them to Prometheus.

Execute the following to enable the Prometheus plugin for all requests:

Export the PROXY_IP once again since we'll be using it to generate some consistent traffic.

This will be the same PROXY_IP step we used in the prerequisite blog post. If nothing shows up when you `echo $PROXY_IP`, you will need to revisit the prerequisite and make sure Kong is set up correctly within your mesh. But if you can access the application via the PROXY_IP, run this loop to throw traffic into our mesh:

"Show Me the Money Metrics!"

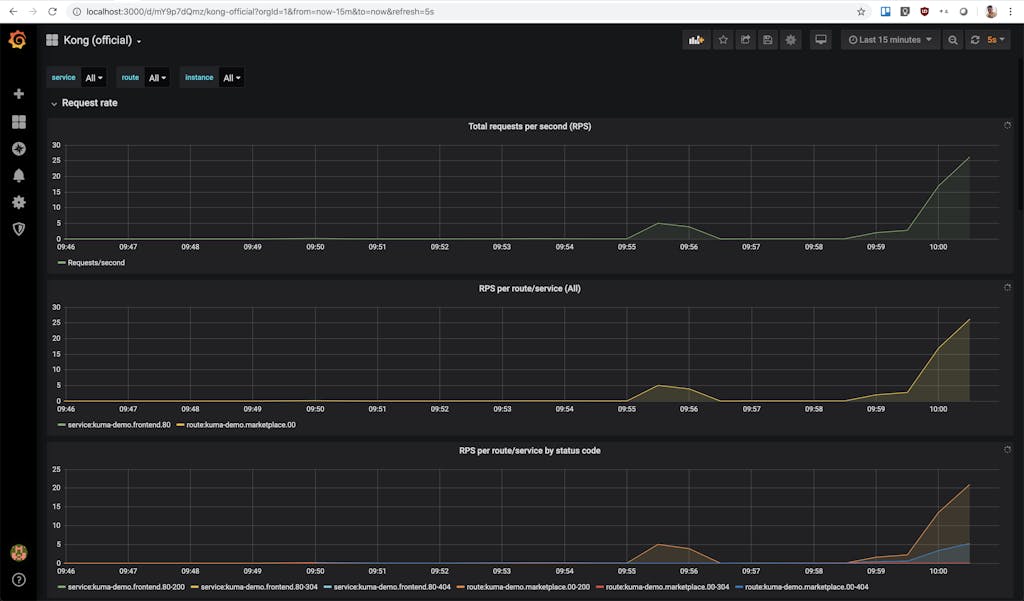

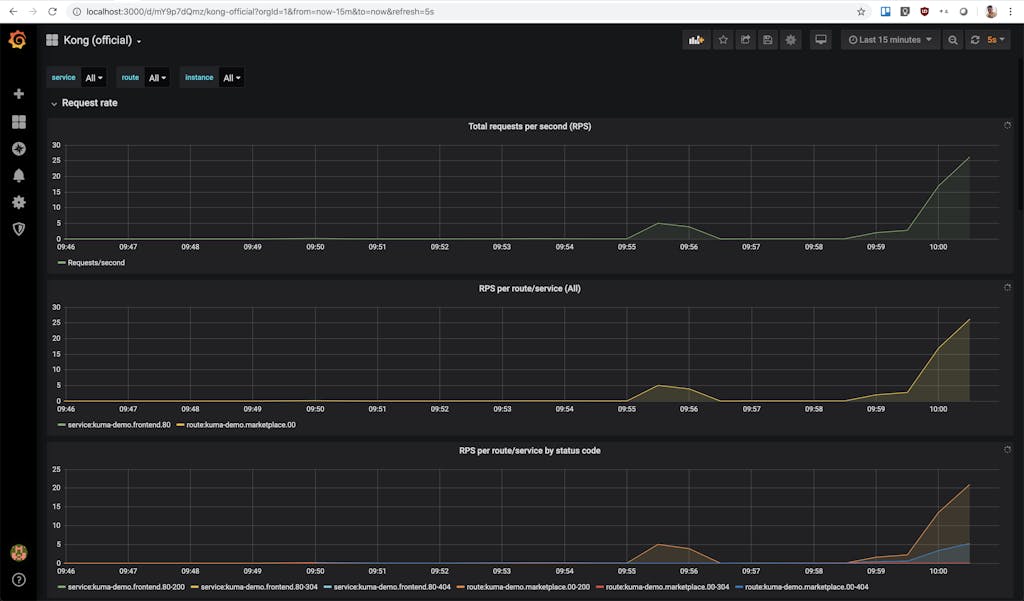

Hell! It’s a family motto. Say it with me, Jerry Harry. SHOW. ME. THE. METRICS. Go back to the Kong Grafana dashboard and watch those sweet metrics trickle in:

You now have Kuma and Kong metrics using one Prometheus monitoring stack. That's all for this blog. Thanks, Harry, for the idea! And thank you for following along. Let me know what you would like to see next by tweeting at me at @devadvocado or emailing me at kevin.chen@konghq.com. Until then, be safe and stay home!