Build Agentic Infrastructure and Production-Ready AI Workflows with Kong AI Gateway.

Expose, secure, and govern LLM and MCP resources via a single, unified API platform.

Make your AI initiatives secure, reliable, and cost-efficient

Use the same Gateway to secure, govern, and control LLM consumption from all popular AI providers, including OpenAI, Azure AI, AWS Bedrock, GCP Vertex, and more.

Track LLM usage with pre-built dashboards and AI-specific analytics to make informed decisions and implement effective policies around LLM exposure and AI project rollouts.

Save on LLM token consumption by caching responses to redundant prompts and automatically routing requests to the best model for the prompt.

Automatically generate MCP servers that are secure, reliable, performant, and cost-effective by default.

Watch Kong AI Gateway in action

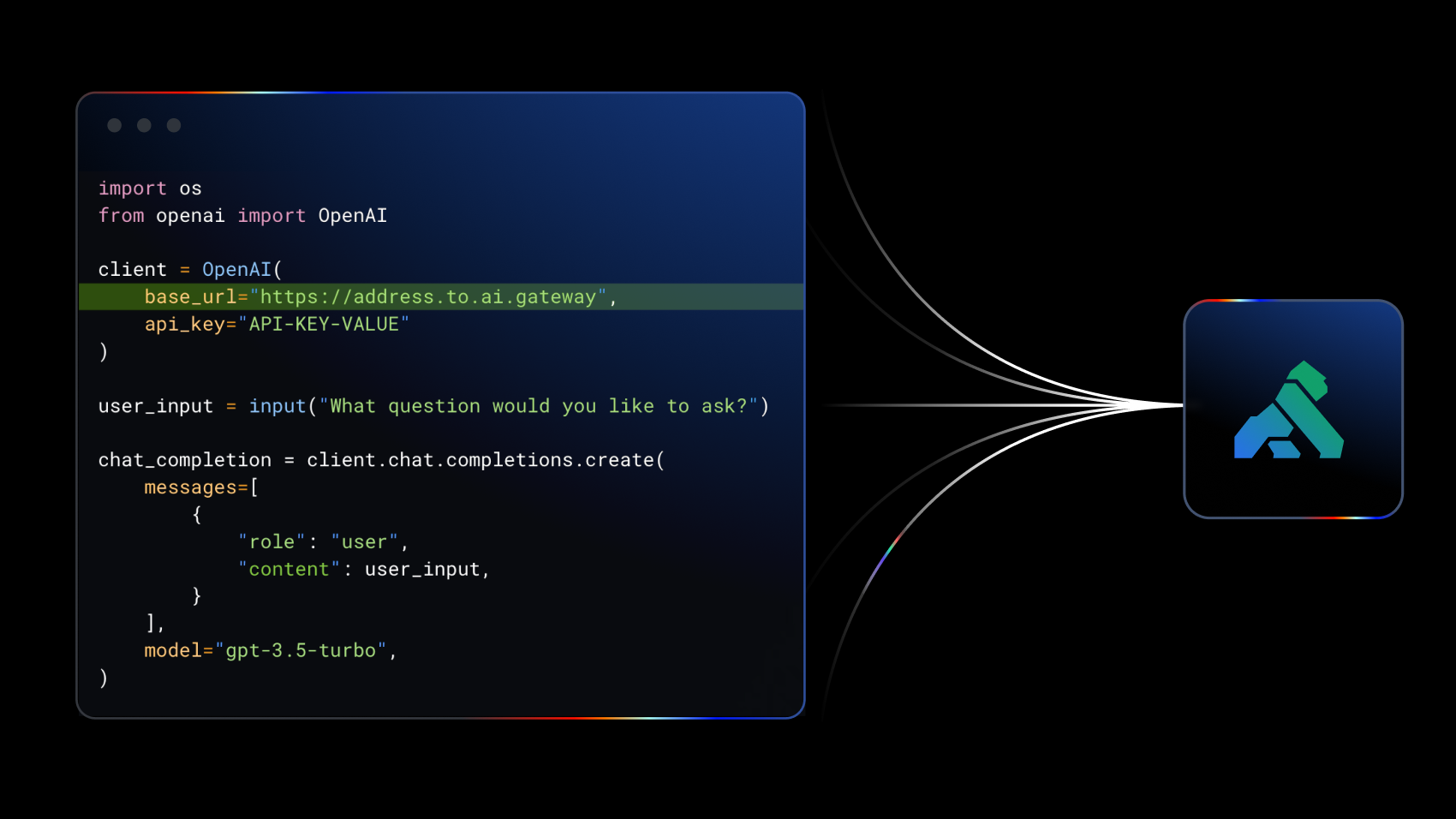

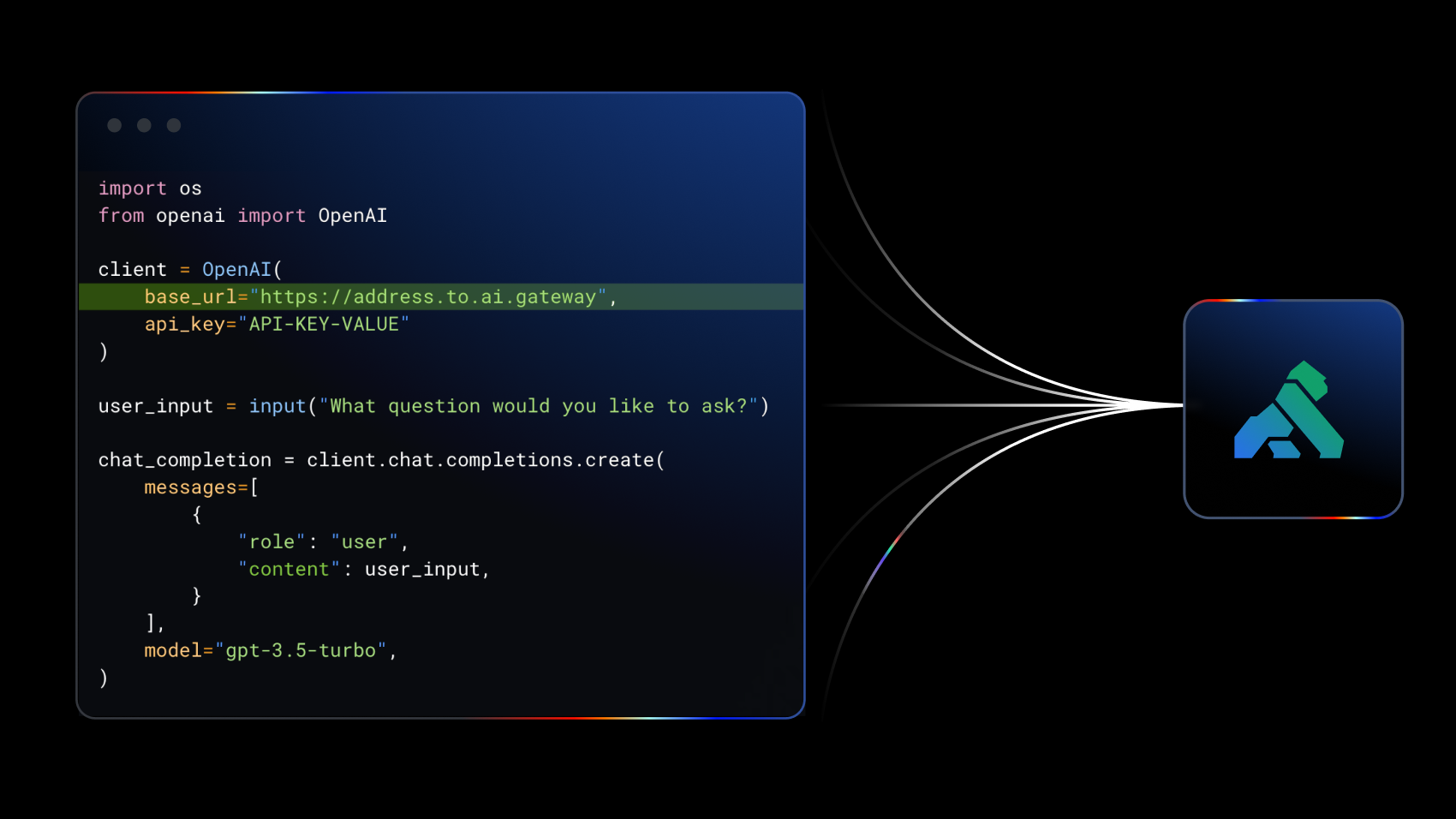

Make your first AI request

Build new AI applications faster with multiple LLMs, AI security, AI metrics, and more.

No-code AI on request

Power all existing API traffic with AI without writing code with declarative configuration.

No-code on AI response

Transform, enrich, and augment API responses with no-code AI integrations.

Secure your AI prompts

Implement advanced prompt security by determining what behaviors are allowed or not.

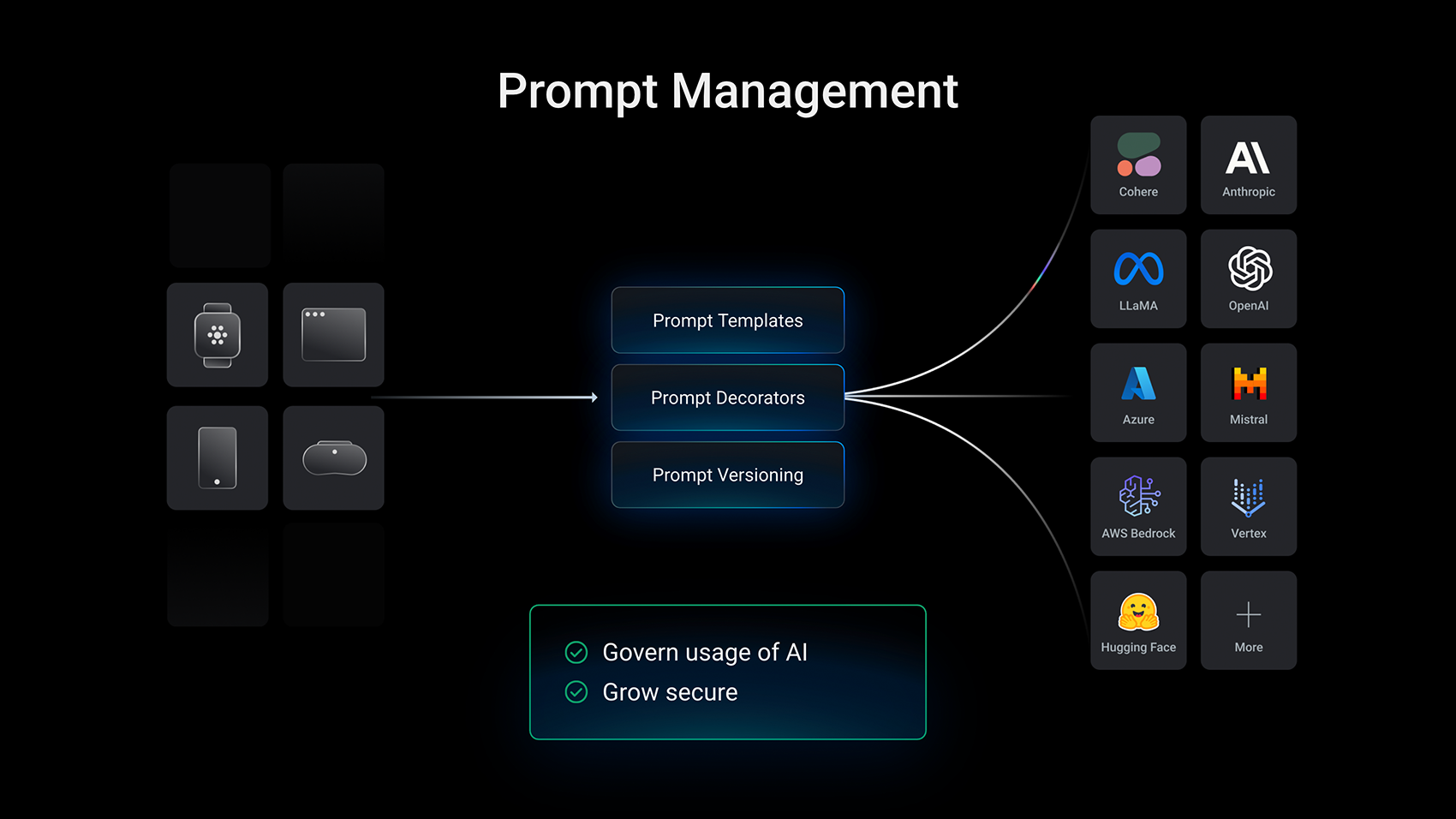

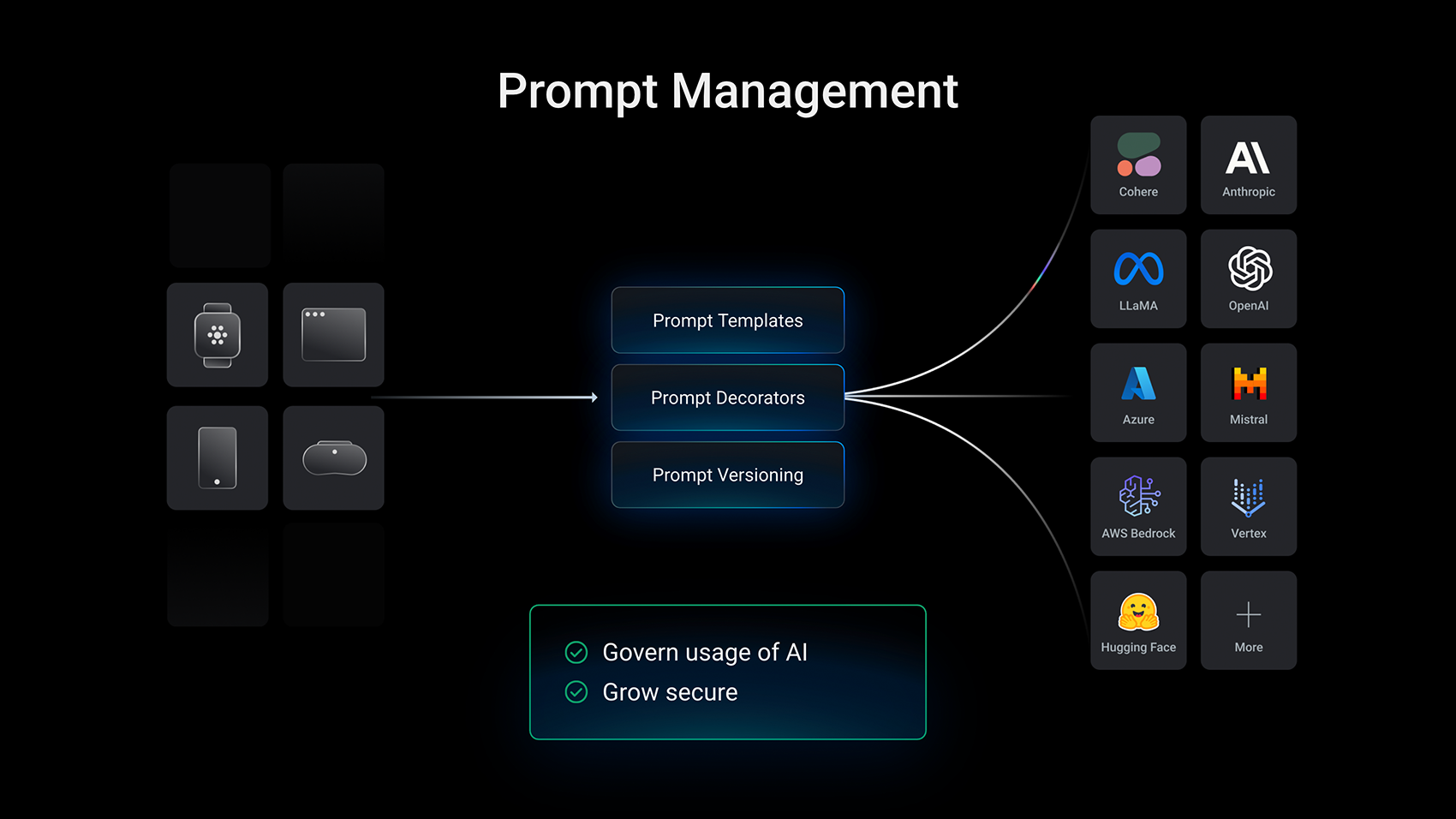

Build prompt templates

Create better prompts with AI templates that are compatible with the OpenAI interface.

Build AI contexts better

Centrally manage the contexts and behaviors of every AI prompt for security and more.

Harness the full potential of AI.

The agentic era demands agentic infrastructure

Govern the entire AI lifecycle with Kong Konnect LLM and MCP infrastructure.

Enforce advanced LLM policies

- Make LLM traffic more efficient with semantic caching, routing, and load balancing.

- Protect resources and ensure compliance with semantic prompt guards, PII sanitization, and more.

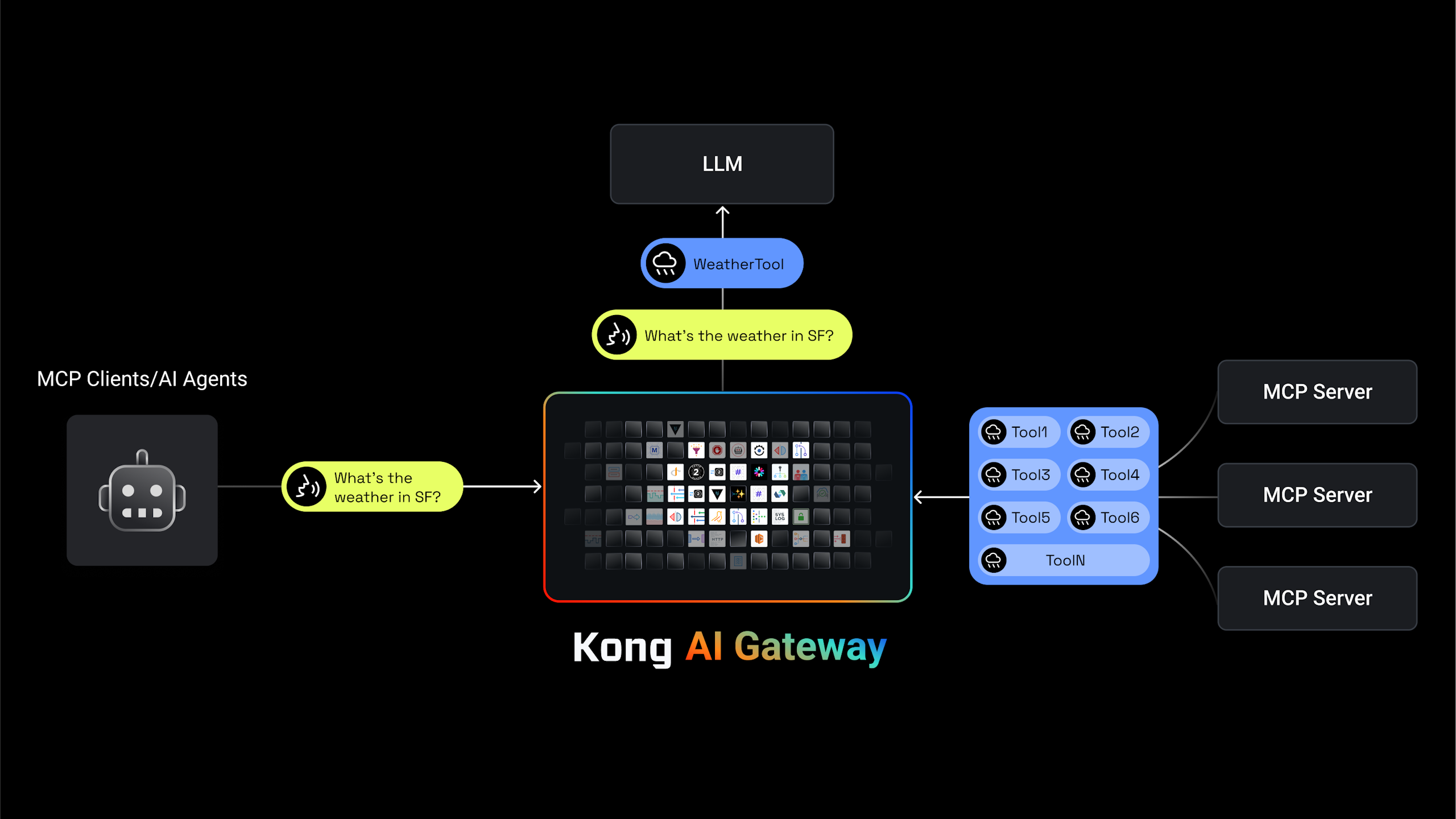

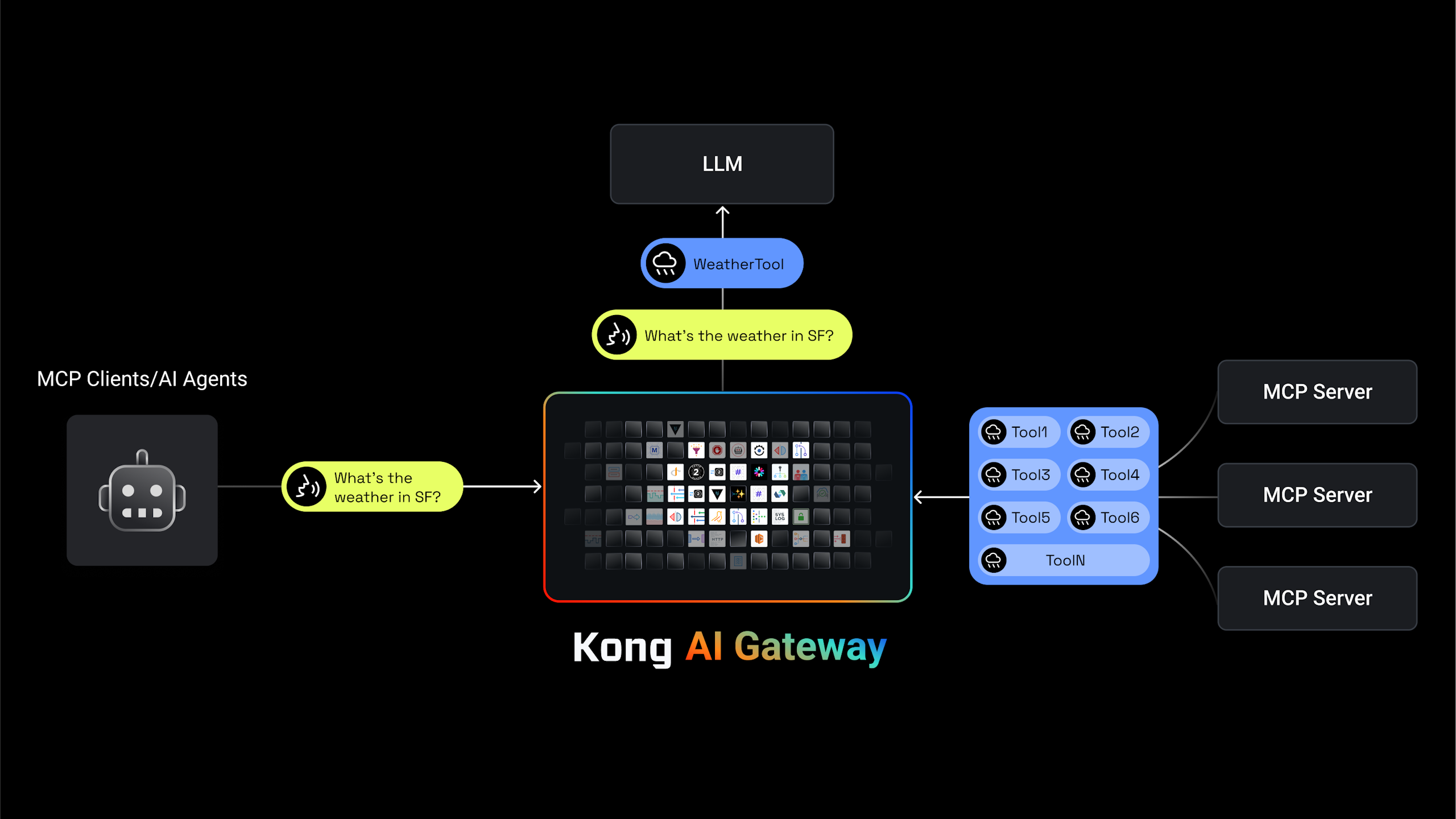

Solve the hardest MCP problems

- Secure all MCP servers in one place with Kong’s dedicated MCP authentication plugin

Capture information around the tools, workflows, prompts, etc. that comprise interactions between MCP clients and servers

Automatically generate secure MCP servers from Kong-managed APIs using centrally defined best practices

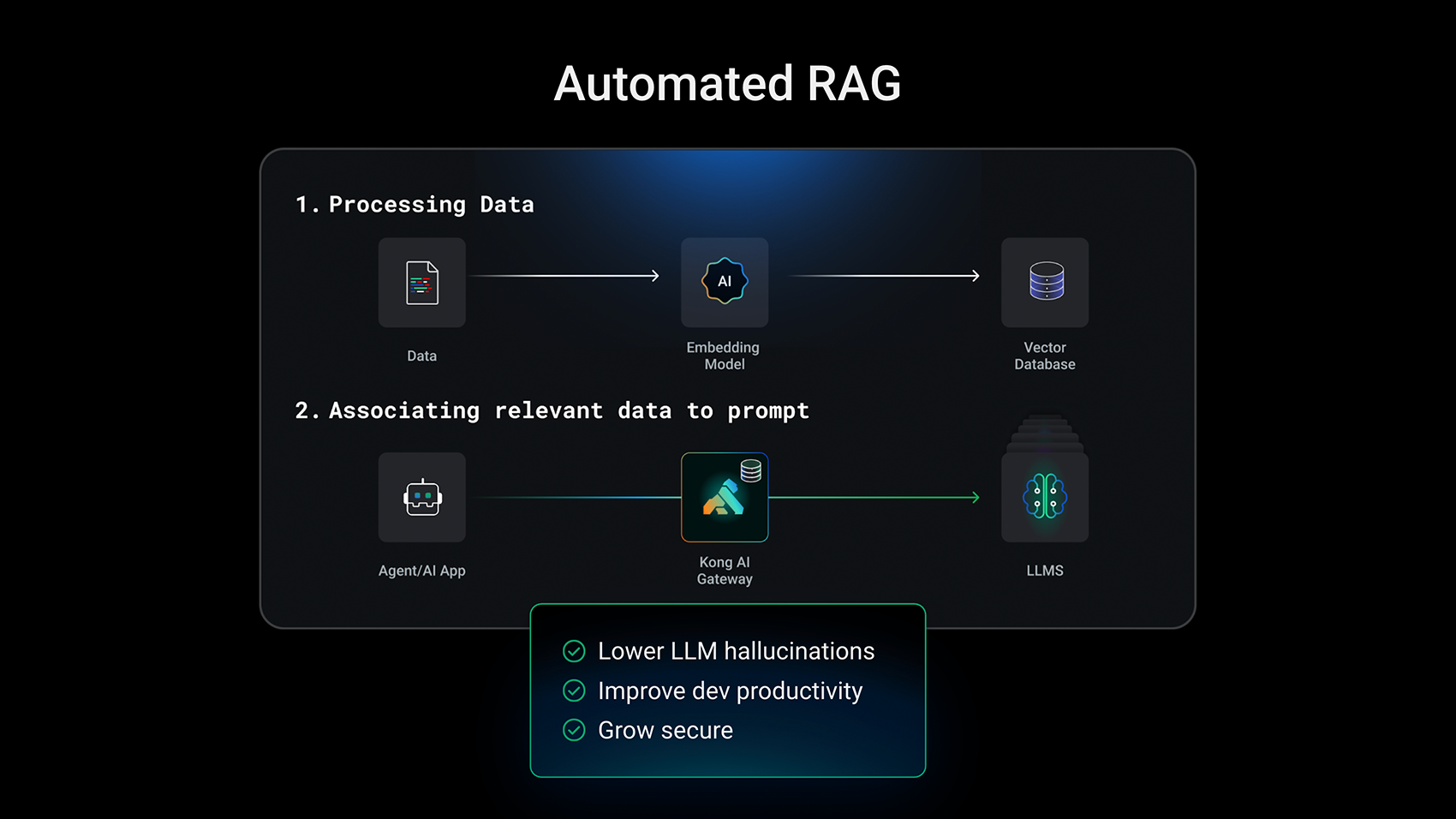

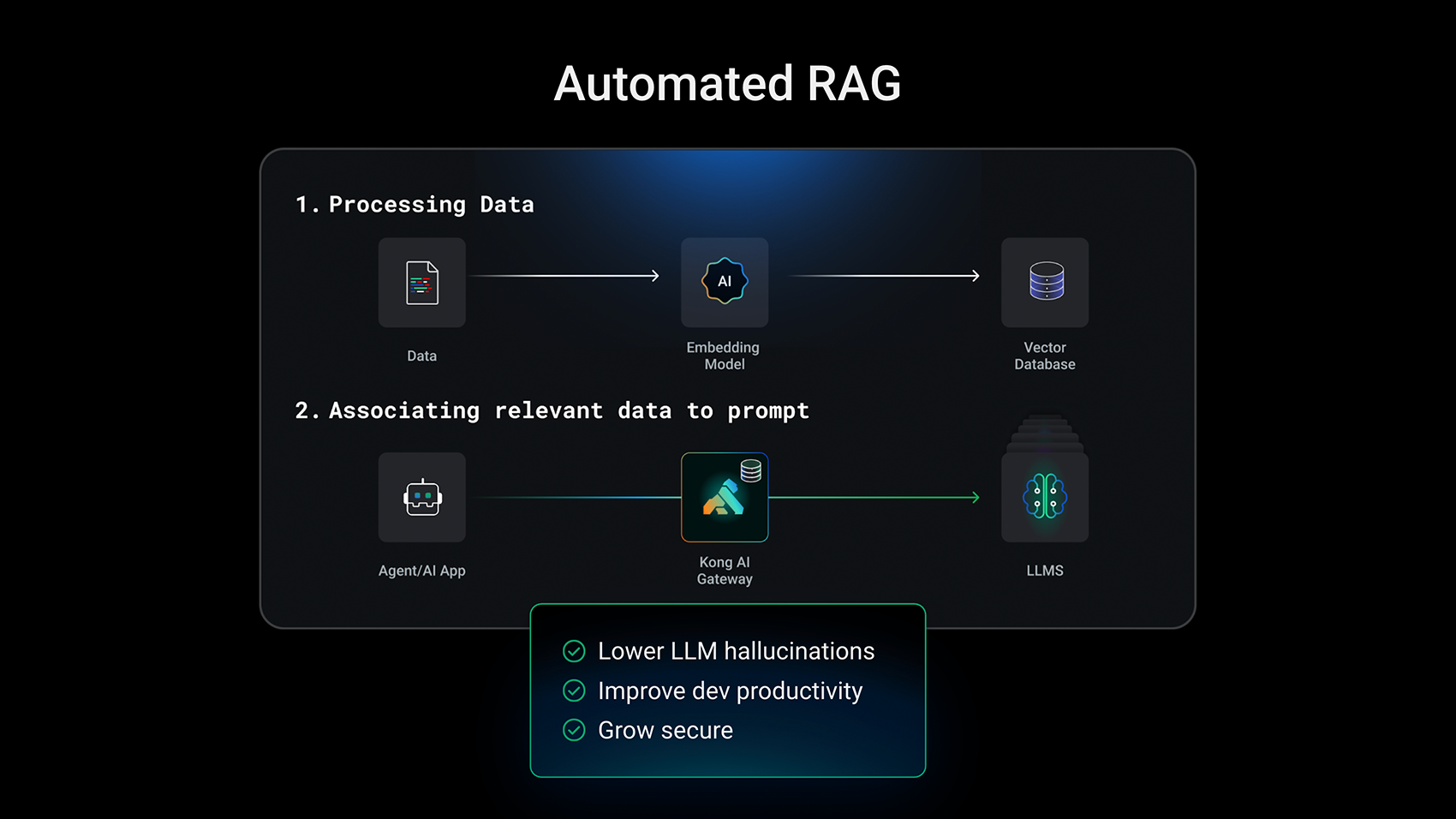

Let Kong implement RAG pipelines for you

- Automatically build RAG pipelines at the gateway layer without needing developer or AI agent intervention.

- Consistently implement RAG pipelines at scale to ensure higher quality LLM responses and reduce hallucinations.

- Enhance governance with the ability to easily configure and update RAG pipelines in a centralized manner.

L7 observability on AI traffic for cost monitoring and tuning

- Track AI consumption as API requests and token usage.

- Optimize AI usage and cost with predictive consumption models.

- Debug AI exposure via logging, tracing, and more.

Ensure every LLM use case is covered

- Use Kong’s unified API interface to work with multiple different AI providers at the flip of a switch.

- Seamlessly switch between AI providers to unlock new use cases and ensure high availability in the event of downtime.

Accelerate AI development with no-code plugins

- Introduce AI inside of your organization without needing to write a single line of code.

- Easily augment, enrich, or transform API traffic using any LLM provider that Kong supports.

There is no AI without APIs.

AI relies on APIs to access data, take action, and integrate with real-world applications. Kong’s API platform provides the production-ready infrastructure needed to roll out your AI initiatives securely and efficiently.

Build AI applications today

Start building advanced AI applications with Kong’s semantic AI Gateway.