Change is the primary cause of service reliability issues for agile engineering teams. In this post, I’ll cover how you can limit the impact of a buggy change, making it past your quality gates with Kong Gateway and Spinnaker for canary deployment.

"What is the primary cause of service reliability issues that we see in Azure, other than small but common hardware failures? Change."

~Mark Russinovich, CTO of Azure, on Advancing safe deployment practices.

Canary Deployment

Canary deployment is a technique by which a new version of your software is only visible to a small subset of users—a canary cohort. It deploys the latest version of the software alongside the current stable version. It also splits traffic across these deployments. During a deployment, the key performance indicators—a combination of business and engineering metrics—are monitored across the system on both the canary and stable deployments.

Proceed once you’ve aligned on the metrics, and the deployment is safe. If you see any anomalies between canary and stable, then roll back the canary traffic so that the service can recover.

Kong Gateway at Razorpay

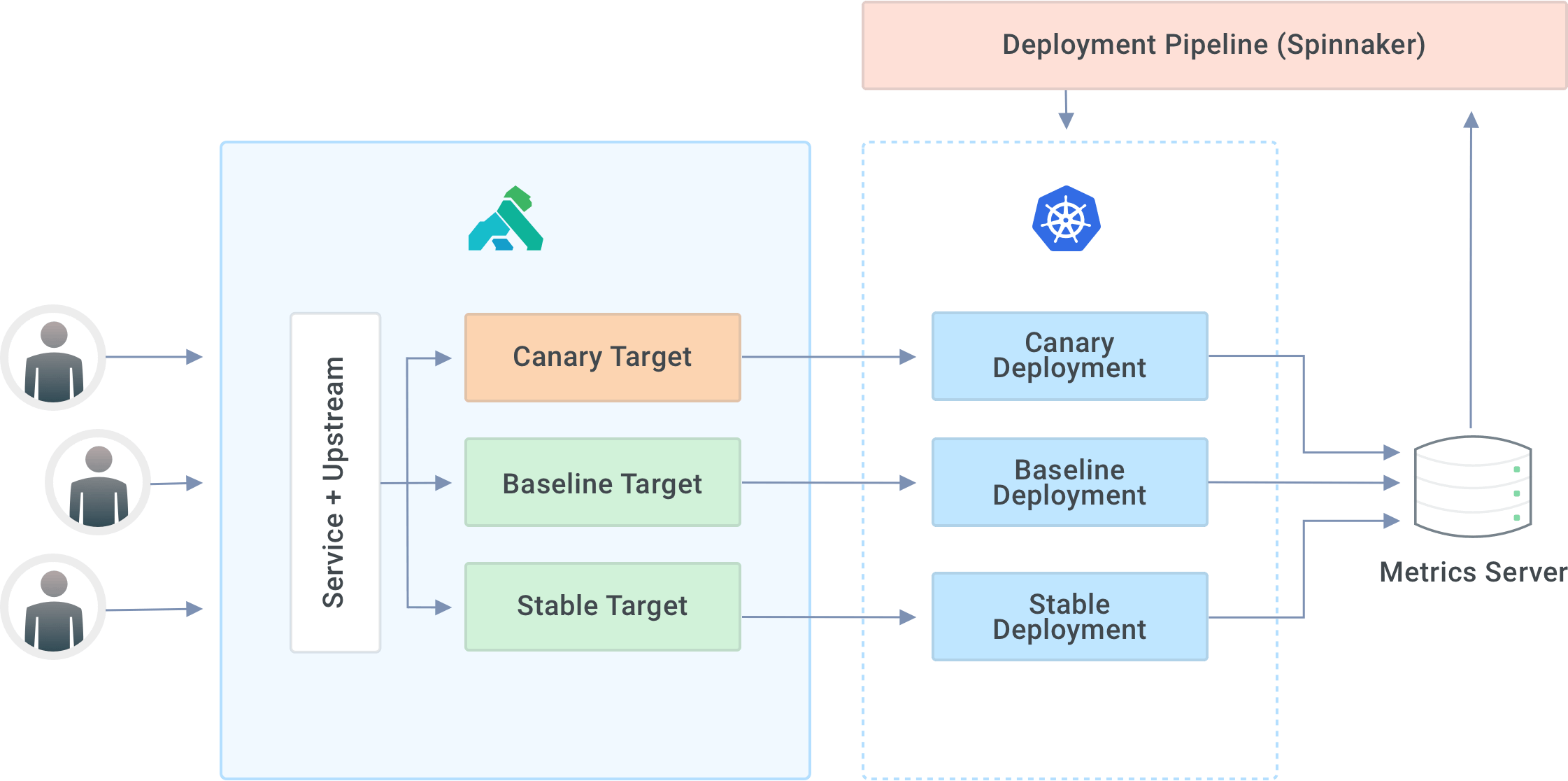

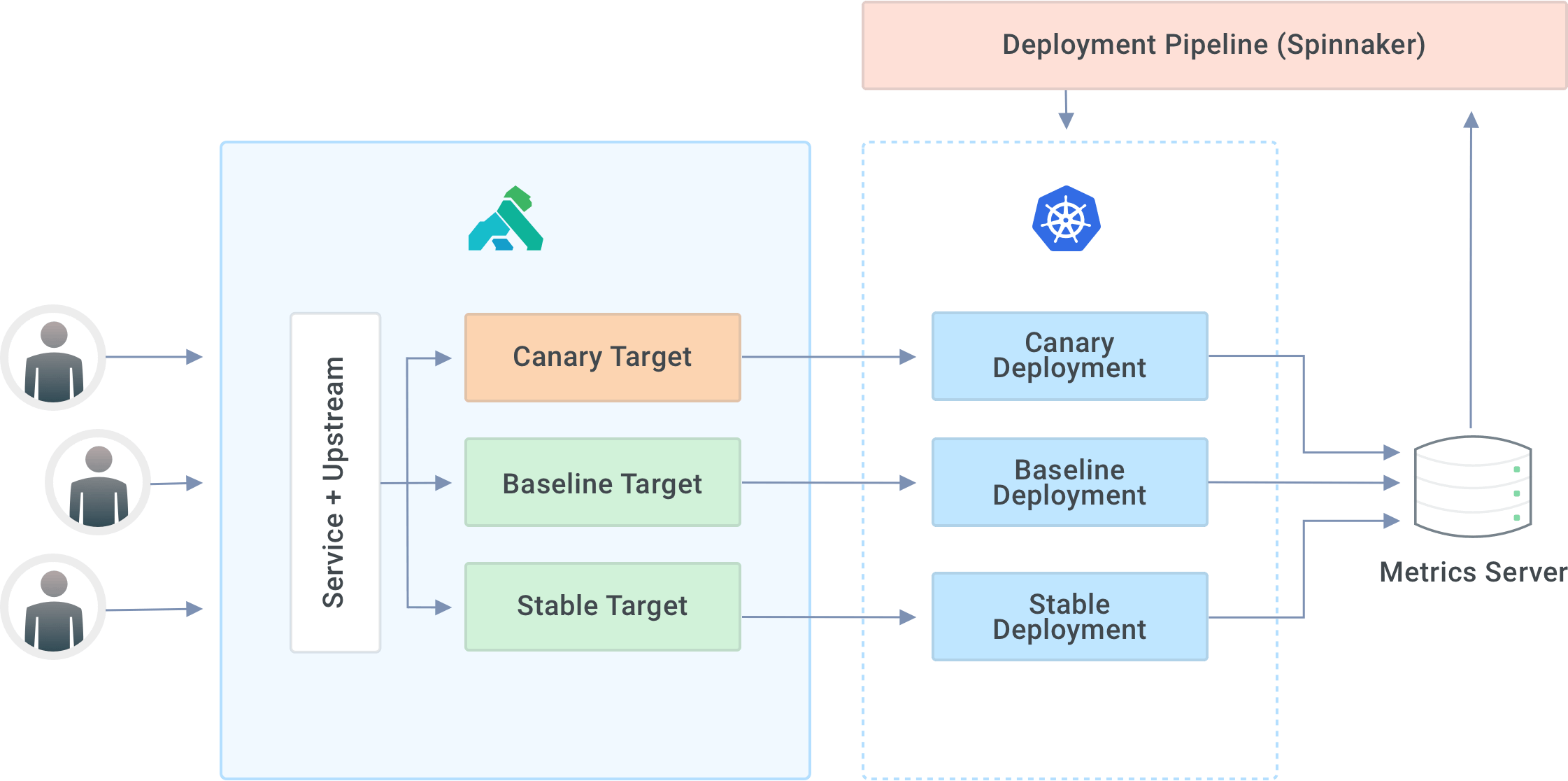

At Razorpay, we use Kong as our API gateway. We leverage its upstream construct to split traffic across the canary and stable deployments of upstream services. While you can compare the metrics across canary and stable deployment, you should have a third deployment baseline with the current stable code deployed.

When we deploy the v2 version of an upstream service, the pipeline will deploy the v2 version to canary and the v1 version to baseline. The short-lived baseline deployment ensures that your metrics will be free of any effects caused by a long-running process - for example, a memory leak that skews memory usage metrics.

We configure each service in the system with the corresponding service and upstream entities in Kong Gateway. Each upstream entity will, in turn, have three targets—canary, baseline and stable. That’s where traffic will ultimately get routed. Since we deploy our applications on Kubernetes, the targets are usually the corresponding service endpoints responsible for load balancing within the deployment.

Overview of the deployment + API gateway architecture

Automated Canary Analysis With Spinnaker

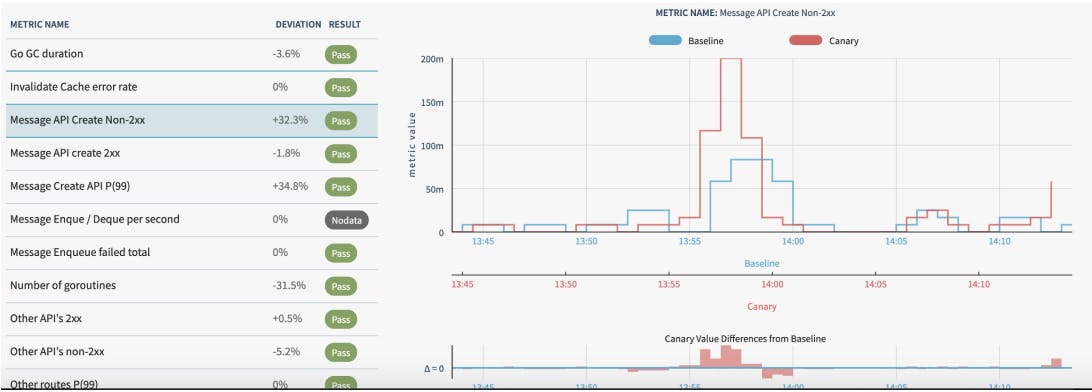

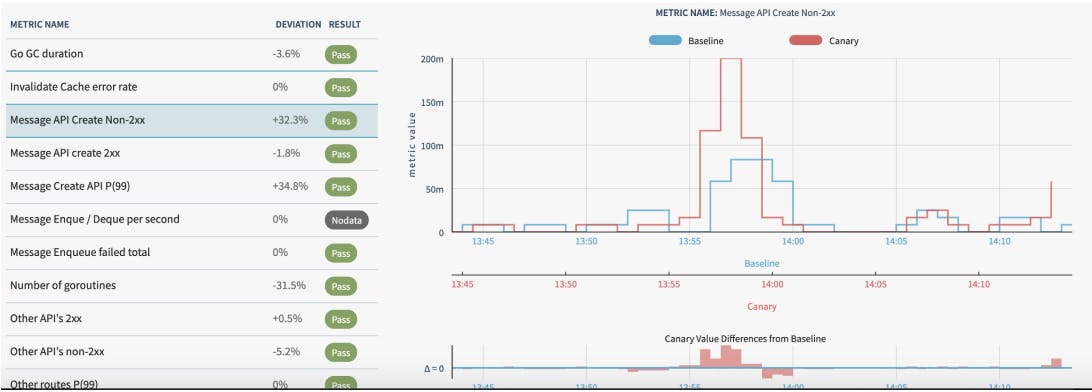

We use Netflix’s Spinnaker—an open source continuous delivery platform—along with Kayenta for automated canary analysis for managing the deployment pipelines. The Spinnaker pipeline, along with the Kayenta configuration, defines application deployment and what to check during canary analysis.

For example, we can configure it to deploy the canary stage. Then, the Kayenta configuration can define the analysis of error-rate and latency metrics. If the analysis fails, we can configure the pipeline to terminate traffic to canary targets and raise an alert.

With the Kong Admin API, terminating traffic to canary is as simple as a DELETE request to remove the corresponding target. Once you DELETE a target, traffic will stop getting routed there. Instead, traffic will be distributed among the other available targets—baseline and stable in our case.

Kayenta dashboard of a passing canary stage

Progressive Deployments Using Kong Gateway

In practice, deployment pipelines tend to be a bit more nuanced. For instance, we use Kong’s Admin API extensively to do progressive deployments—traffic on canary will start at 2.5%. It will gradually ramp up to 10% over time while concurrently performing canary analysis. With Kong’s Admin API, we can identify functional issues and performance regressions.

Canary release plugin

Kong Konnect also comes with a canary release plugin that can help you do progressive rollouts without depending on a separate CD platform. This plugin supports two operation modes:

- Static: You can specify the canary split in percentage, and Kong will route the traffic based on this percentage. You can do this by setting conf.percentage.

- Progressive: You can specify a duration during which traffic will be gradually ramped up on the new deployment. You can do this by setting conf.duration.

The plugin can also schedule a canary release at a specific time and control traffic split based on the ACL groups. For example, you might want to ensure that a particular customer’s traffic never goes to the canary deployment. You can do this by configuring conf.groups and setting conf.hash as deny.

Granular Control Over Traffic Split

Our choice of using Kong as the API gateway has paid off here. As mentioned previously, Kong Gateway allows us to specify traffic split to a high degree of precision. More importantly, it also handles the reload of the underlying OpenResty workers behind the scenes. It acts as a REST API call from our Spinnaker pipeline to re-route traffic between stable and canary deployments.

Intelligent Load Balancing

Kong Gateway also gives us flexibility with routing based on the authenticated user since we handle authentication at the API gateway itself. This allows us to specify our routing rules such that the traffic from a particular user will always go to a deterministic target. It uses consistent-hashing to distribute your traffic across upstreams based on the hashing field supplied in config. As a result, this comes with the usual caveats, and you need to ensure that the choice of hash key should have sufficient cardinality to avoid hotspots.

We can configure the upstream to hash_on different attributes of the request - headers, cookie, IP or consumer. In the context of Kong Gateway, a consumer has a one-to-one mapping to users. As long as we have an authentication plugin configured, Kong Gateway will resolve the consumer for every request based on the Authorization header and route traffic based on the authenticated user.

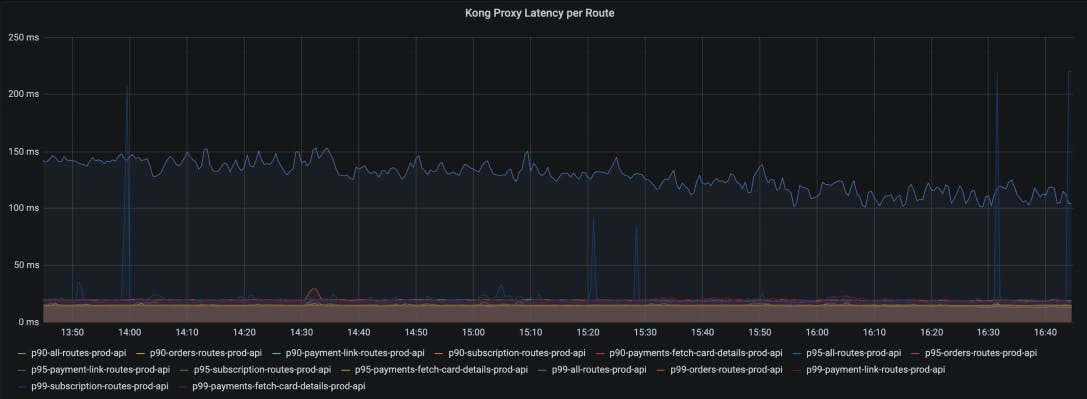

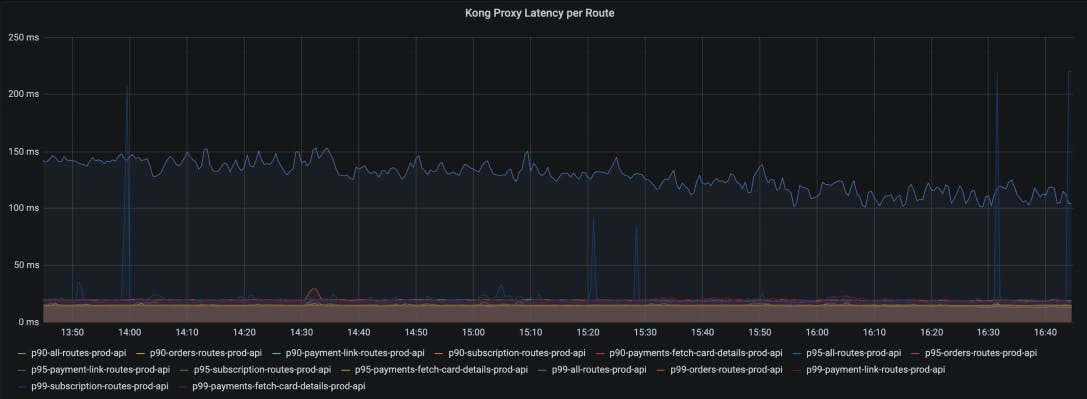

Kong Gateway's Prometheus Plugin for Additional Instrumentation

Kong Gateway comes bundled with a robust Prometheus plugin implementation which exposes metrics on status codes and latency histograms at a service and route level. This allows us to configure our canary analysis stage to look at P99 latencies and the rate of HTTP 5XX at the gateway level.

Since the plugin exposes metrics labeled by service and route, we can have more granular canary stages to evaluate metrics for specific routes over and above the analysis at a service level.

This request will configure the Prometheus plugin globally, which will expose latency and throughput metrics for both Kong and proxied upstreams. You can label the metrics with the service and route names. And you can use the official Grafana dashboard to visualise them.

A sample of latency metrics available at a per route granularity

If you have any additional questions, post them on Kong Nation.

To stay in touch, join the Kong Community.

Once you've successfully set up Kong Gateway and Spinnaker, you may find these other tutorials helpful: