The world’s most adopted

open source API gateway

Loved by developers. Trusted by enterprises.

Lightweight, fast, and flexible cloud native API gateway

Ultra-lightweight, infinitely scalable NGINX engine with 50K+ transactions per second per node.

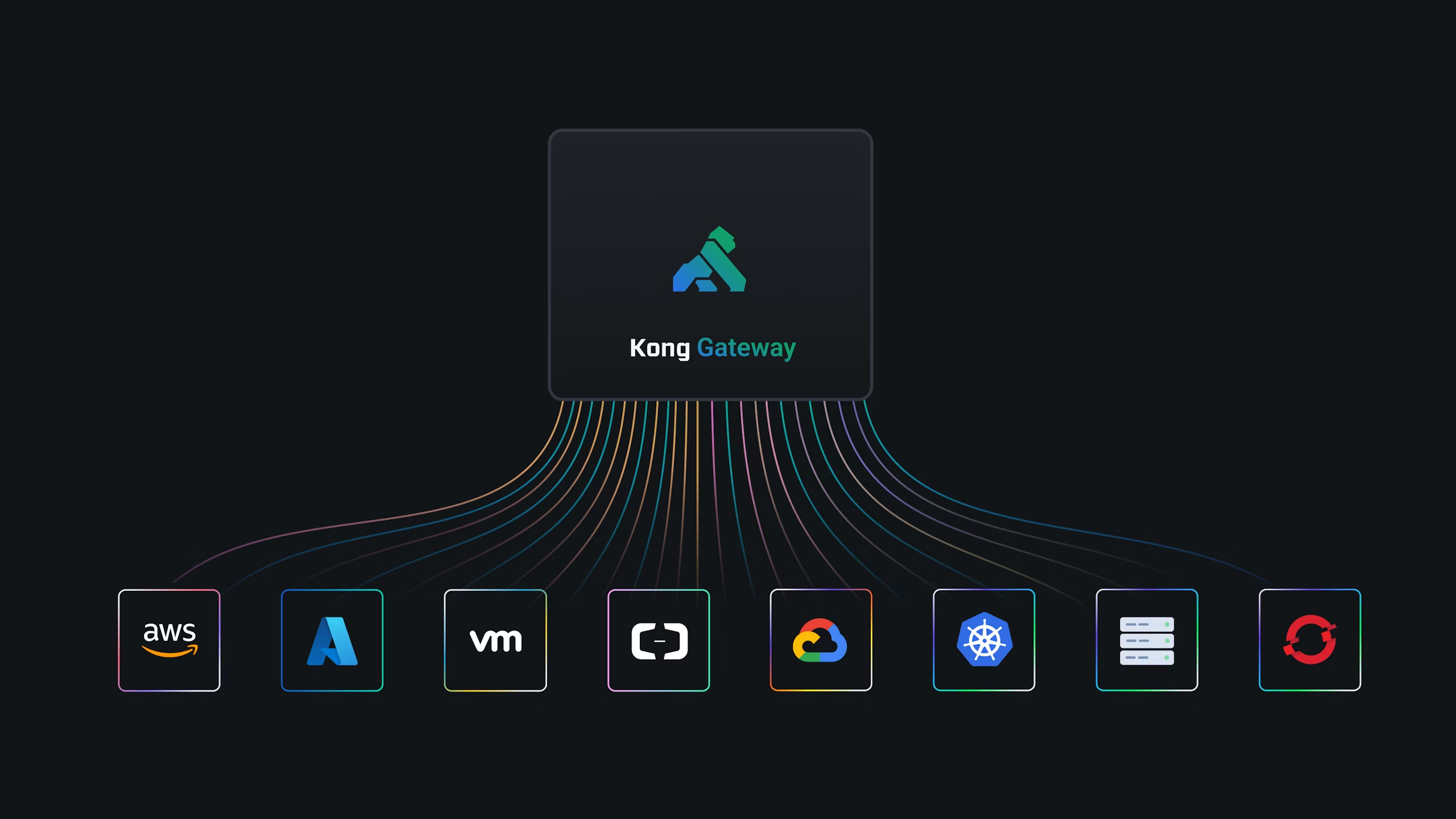

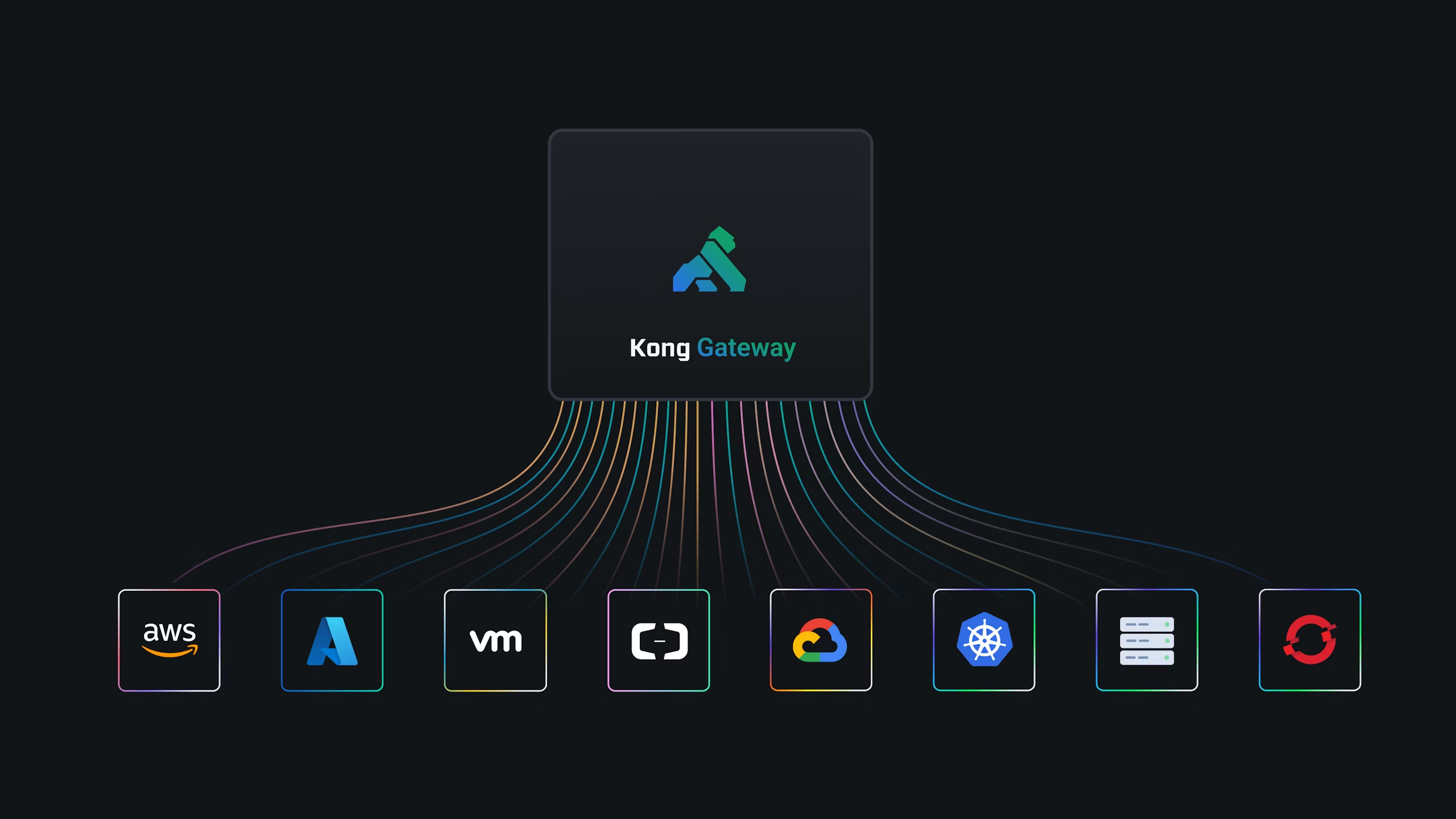

Kong Gateway delivers the architectural flexibility you need to operate across any cloud, platform, or protocol.

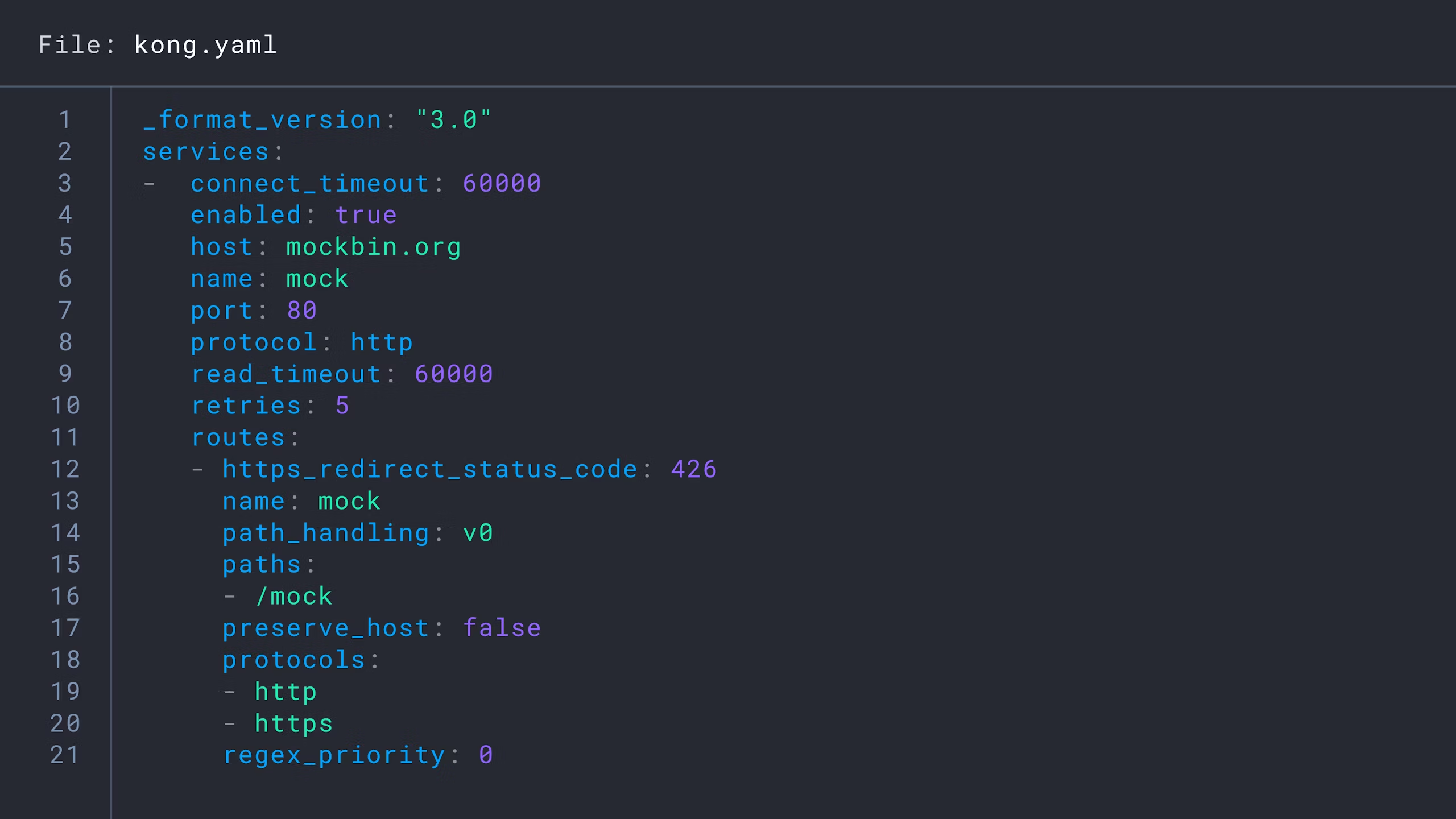

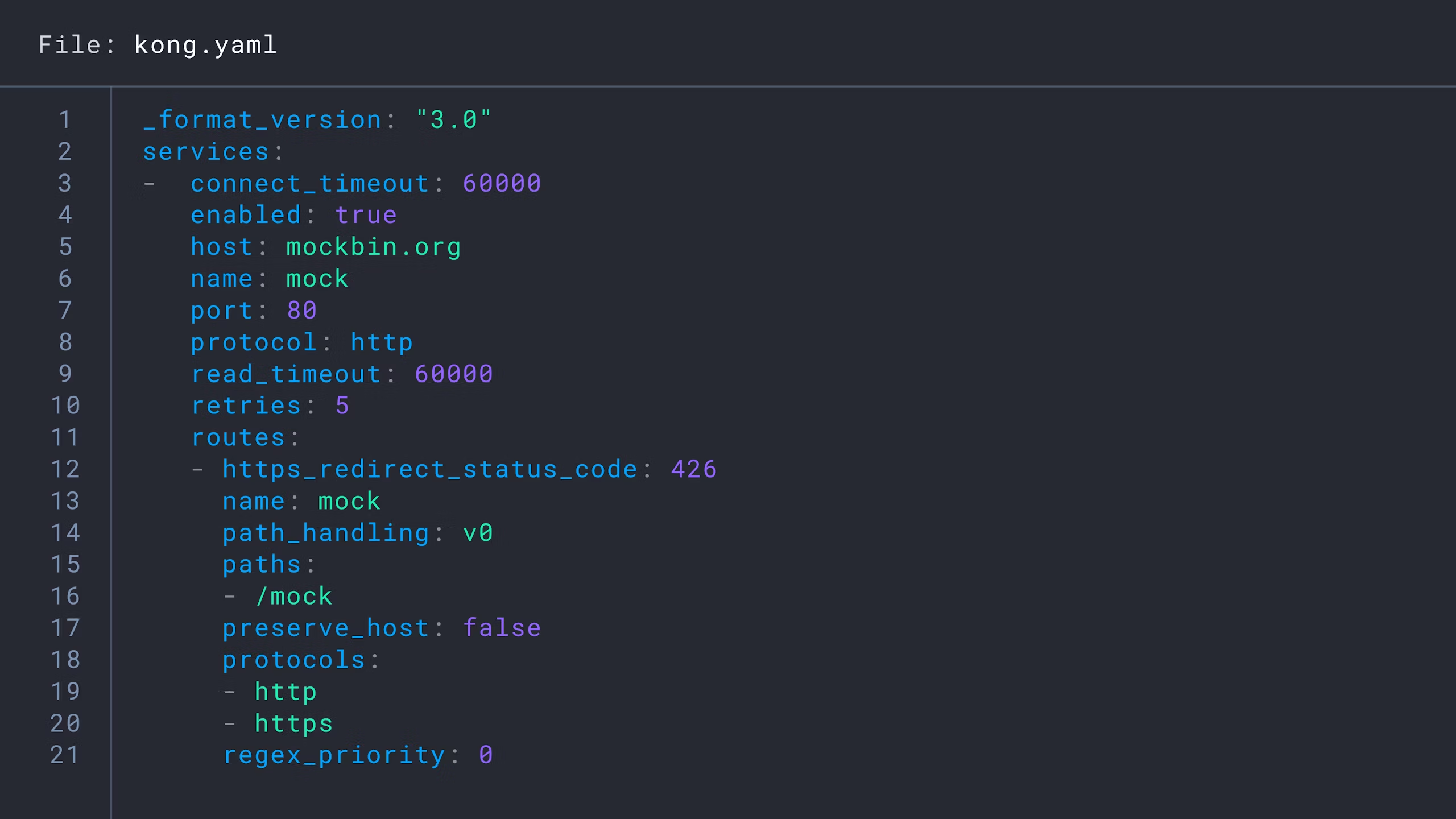

Configure Kong Gateway natively using an API, web UI, or declarative configuration to manage updates via CI/CD pipelines.

Why Kong Gateway?

Built for hybrid and multi-cloud, optimized for microservices and distributed architectures.

Fully automated

- Automate each phase of the API and microservices lifecycle through declarative configuration.

- Easily apply proven principles of DevOps and GitOps to bring applications online faster.

Deployment agnostic

- Run Kong Gateway in any environment, platform, and implementation pattern — from on-prem to cloud, Kubernetes, and serverless

- Flexibility to configure Kong Gateway with or without database, and in hybrid or cloud-hosted modes.

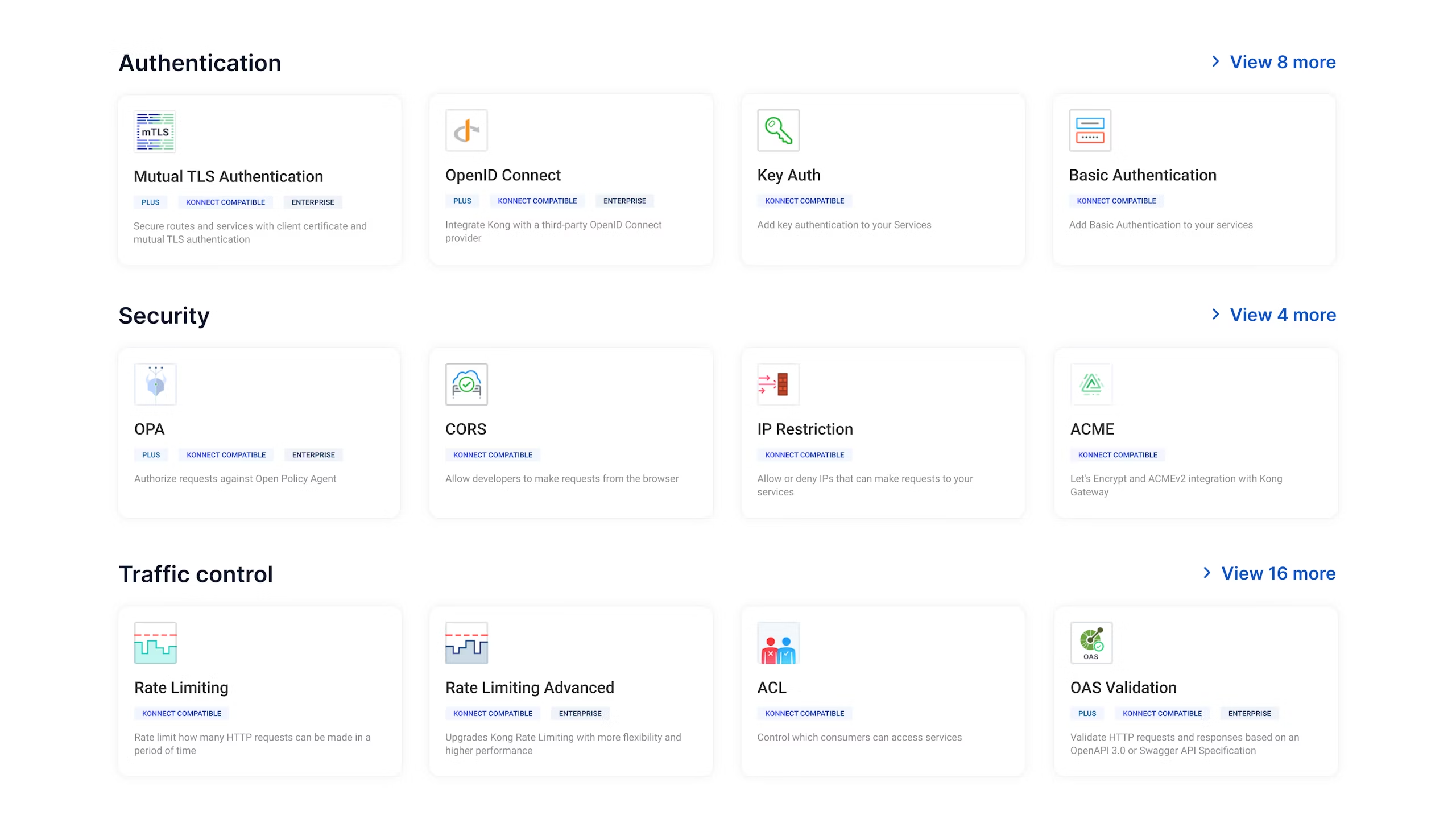

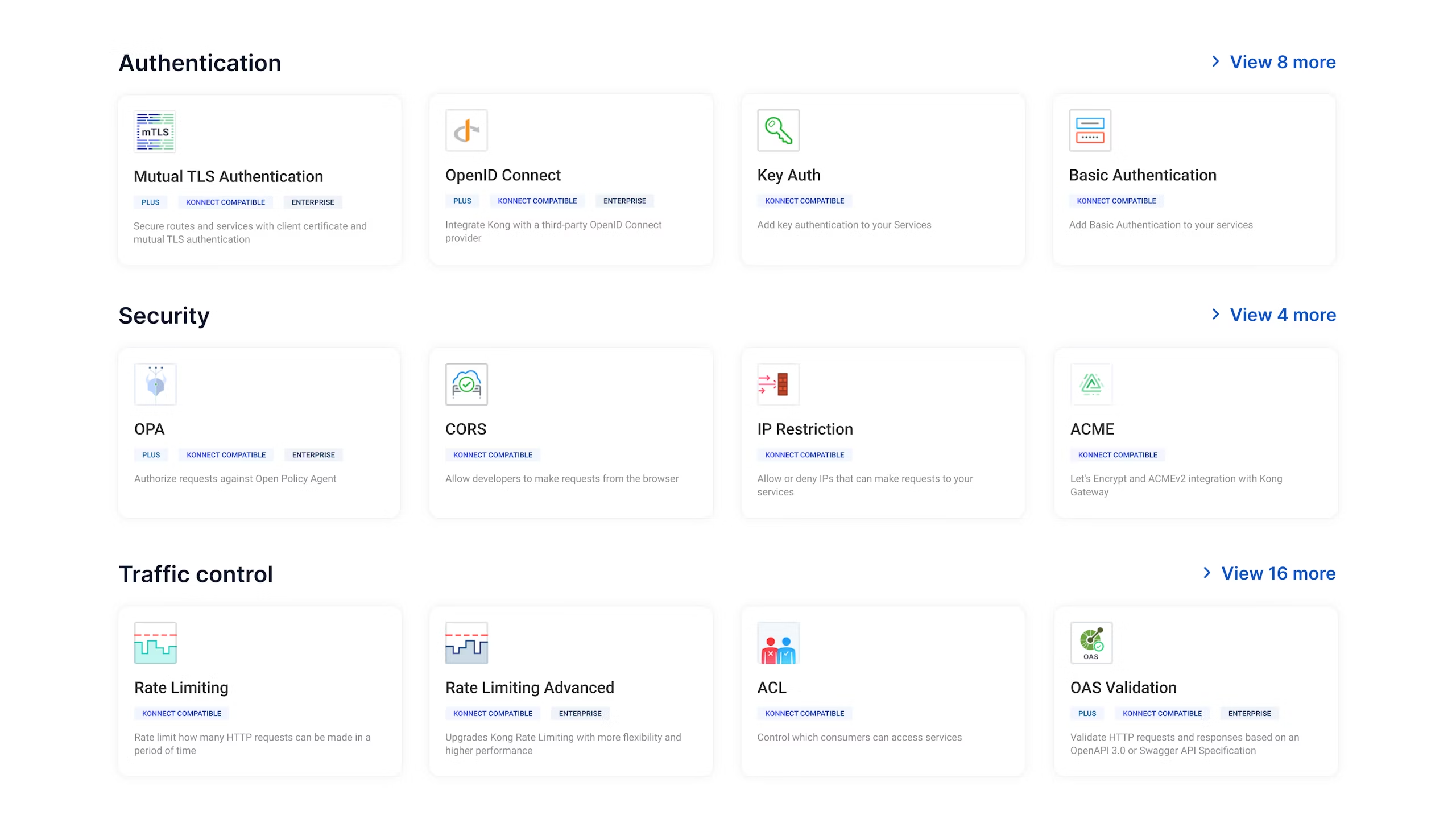

Highly extensible

- Exercise granular control over your API traffic with out-of-the-box security, authentication, transformation, and analytics plugins.

- Easily extend Kong Gateway to support unique use cases with custom plugins via Kong's Plugin Development Kit.

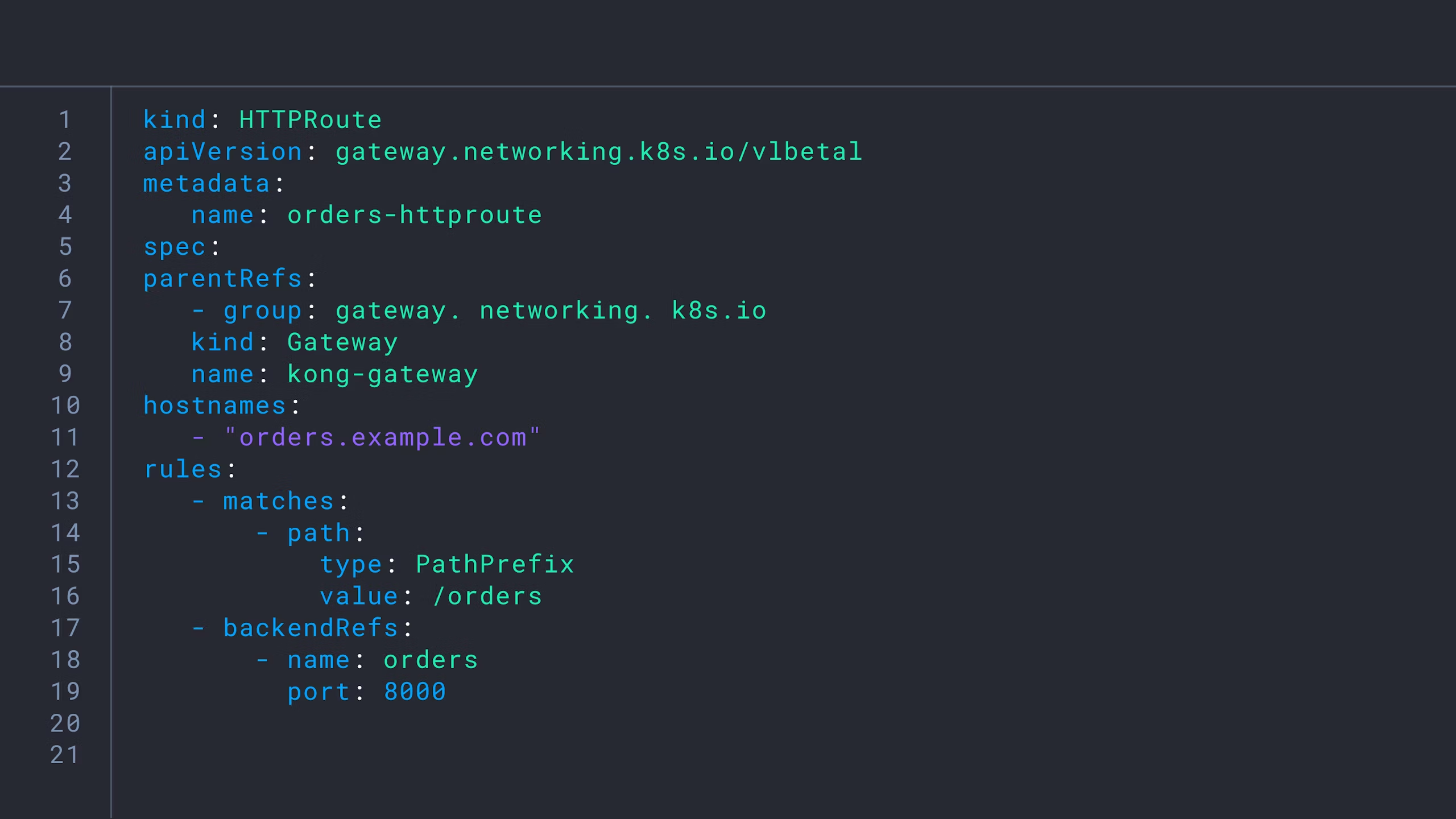

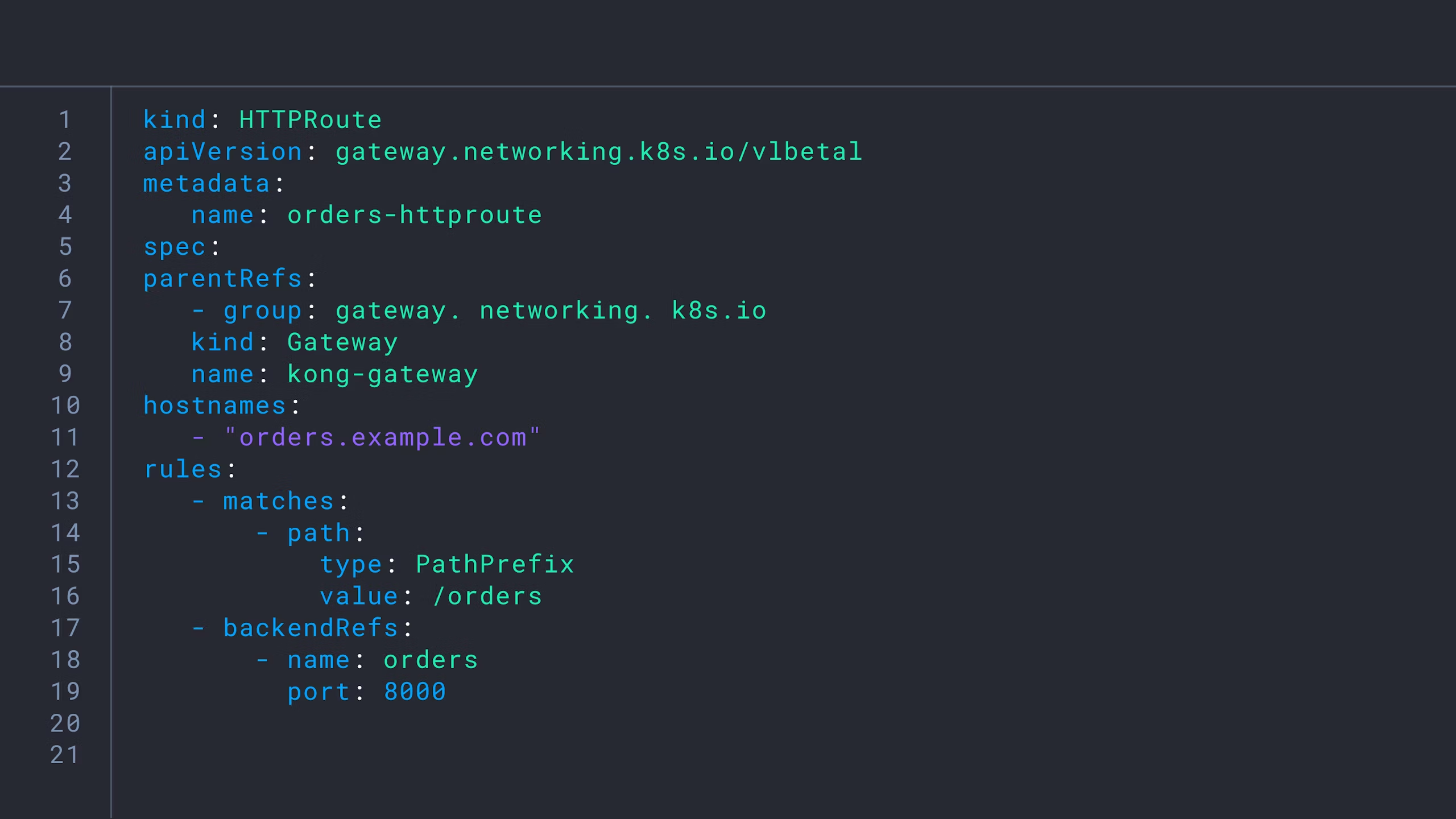

Kubernetes native

- Configure Kong Gateway the same way as Kubernetes with Kong Ingress Controller.

- Implement traffic management, transformations, and observability across Kubernetes clusters with zero downtime.

Incremental configuration updates (Coming soon)

- Higher performance and responsive customer experience through efficient propagation of configuration updates.

- Reduced memory and CPU consumption and smaller amount of data being transmitted.

Open source DNA

- Built on a trusted and widely adopted open source technology and driven by a massive developer community

- Share resources, knowledge, and best practices for building and deploying APIs

API calls a day

Downloads

GitHub stars

Kong Gateway for every stage

of your API journey

Kong Gateway | Kong Gateway | Kong Gateway | |

|---|---|---|---|

Fast, Lightweight, Cloud-Native | |||

End-to-End Automation | |||

Kubernetes Native | |||

Gateway Mocking | |||

Plugin Ordering | |||

Federated API Management | Workspaces provide some isolation | Control planes provide strong isolation | |

Data plane Resilience | |||

Consumer Groups |

Kong Gateway propels enterprise success

Kong is a natural fit for PEXA embarking on the modernization journey. It ticks all the boxes — scalability, security, and self-service — which takes the hassle of managing APIs

Reduction in costs

With Kong, we’ve taken a big leap forward in our ability to reduce operational overhead while efficiently scaling to support the increase in traffic requests from our rapidly growing customer base.

Developer

onboarding reduced

from days to hours

Mercedes-Benz decided to focus on Kong as their recommended choice because it's suitable for both small and large instances providing simple configuration, operation and monitoring.

APIs seamlessly

managed

Kong has produced an 85% reduction in gateway overhead compared to our previous proprietary solution, in addition to being 90% more resource efficient.

Reduction

operational overhead

Resources

Get started with Kong Gateway

Kong Konnect is the easiest way to get started with Kong Gateway. Experience the power of the world's most adopted API gateway in a SaaS offering.