AI & LLM Governance with Kong

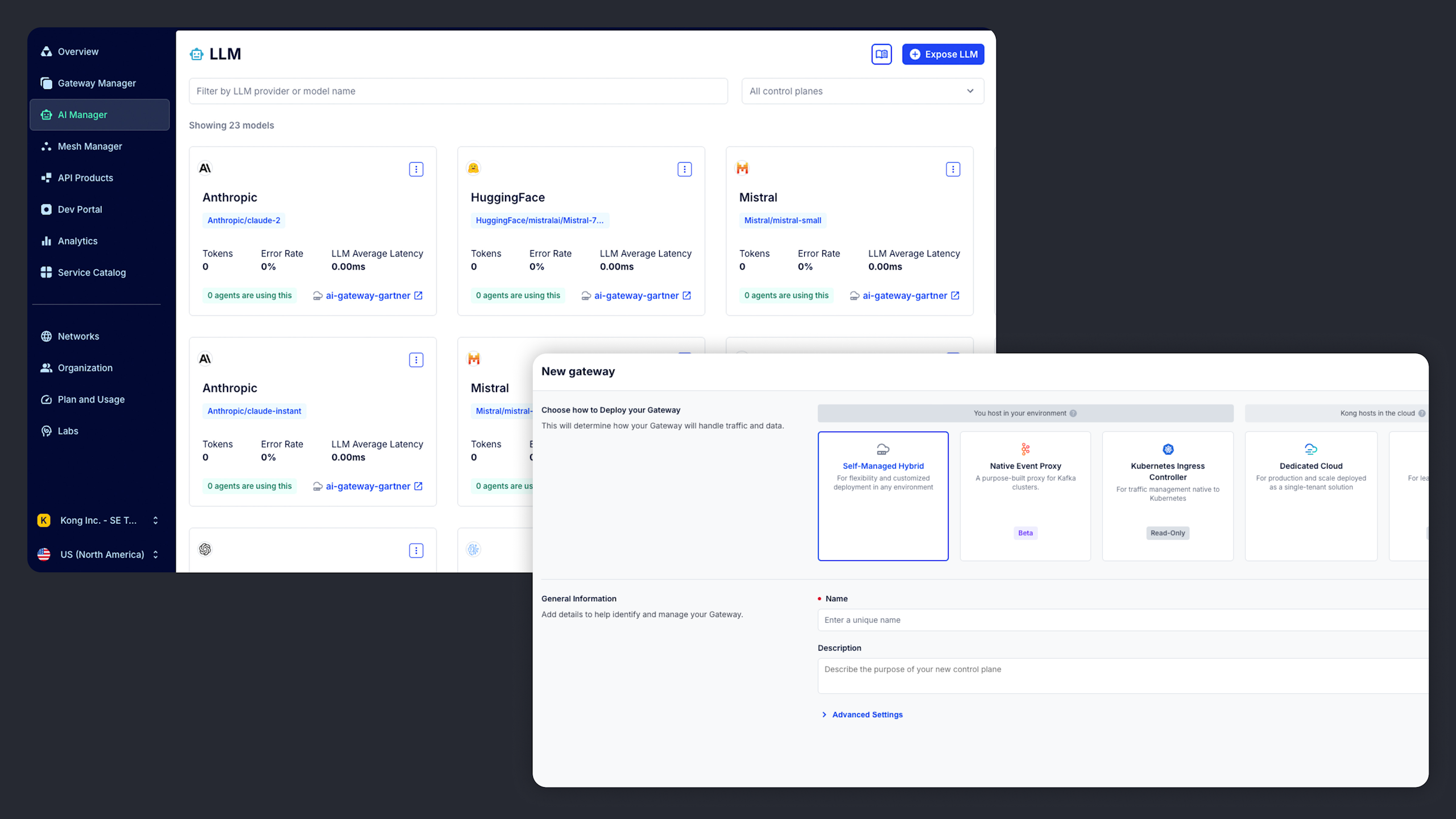

Expand governance and control beyond agentic workflows into the LLM and API layers.

Integrate AI applications with the APIs, LLMs, MCP servers, and real-time data that fuels them.

With strong API, eventing, and AI-coding practices in place, the need for traditional integrations becomes obsolete for all, from line-of-business "citizen" developers to IT developers who can now prompt their way to build the right connectivity in a fraction of the time, assuming reliable and discoverable APIs.

This presents a massive cost and developer productivity opportunity. And this shift will play a large role in your becoming ready for the agentic era. Will you keep up?

Layering AI coding and MCP on top of your backend API, microservice, and event data will eliminate the need to leverage bespoke, legacy IPaaS-style connectors for most application-to-application integrations. This means less IPaaS spend and less time debugging brittle connector-based integrations.

Compose intelligent MCP tools from APIs and event data. Generate MCP servers and run them on secure, scalable MCP runtime infrastructure. Protect the LLMs that leverage these tools with LLM runtime infrastructure. This is the future of AI integration. And it’s all in one platform.

Why not use your legacy API management solution? Because it can’t integrate AI with all of the AI fuel. Legacy API management can’t help you unify your approach to AI application integration with APIs, LLMs, MCP, and event data. Konnect can.

Take existing APIs and event data and turn them into lean, agent-and-LLM-ready MCP tools. These are then leveraged by agents and AI coding tools to automatically build AI connectivity and integrations.

Systems will consume data and business logic exposed as APIs and event streams, and this will all be exposed to agents and AI coding tools via MCP.

Only Kong gives you Konnect, a single platform for governing API, LLM, MCP, and eventing traffic through multi-protocol runtime infrastructure.

Regardless of where they are deployed, systems need to be integrated. Luckily, you can deploy and run Kong infrastructure in on-prem, hybrid, cloud, and multi-cloud environments.

Learn about Kong cloud offerings

Whether you want to monetize access to integration agents or govern the costs of agentic consumption of your APIs, LLMs, MCP servers, or event data, you can do it in Konnect.

Expand governance and control beyond agentic workflows into the LLM and API layers.

Make your AI & LLM initiatives secure, reliable, and cost-efficient by governing every step with the Kong AI Gateway.

Build an API strategy that’s AI-ready and roll out your projects with confidence.

Contact us today and tell us more about your configuration, and we can offer details about features, support, plans, and consulting.