About the examples below

The examples throughout this post use GitHub's MCP server as the backend, though the patterns apply to any MCP server.

The configurations use Kong AI Gateway with the ai-mcp-proxy plugin. Kong is configured declaratively using YAML files that define:

- Services - backend MCP servers to connect to

- Routes - URL paths that agents use to access services

- Plugins - policies applied to requests (authentication, transformation, ACLs)

- Consumer groups - categories of agents with different permissions

You can apply these patterns with other gateways, but the specific syntax will differ.

Kong consumer groups primer

Consumer groups are Kong's mechanism for categorizing API consumers. The key pattern:

- Identity first - Identify who's making the request (via JWT token validation)

- Map to group - Extract a claim from the token that specifies the consumer group

- Apply ACLs - Filter tools based on the consumer group's permissions

This means ACLs are attached to groups, not individual consumers. You define groups once, configure their tool access, then map any number of consumers to those groups via JWT claims.

Progressive security model

Kong's MCP gateway implements tool governance as a progressive security model with four layers:

Layer 1: Pass-through proxy

┌─────────┐ ┌─────────┐ ┌────────────┐

│ Agent │ ───► │ Gateway │ ───► │ MCP Server │

└─────────┘ └─────────┘ └────────────┘

│ │

└── Agent provides GitHub token ────┘

Gateway proxies requests to MCP servers. Agents still provide their own credentials. You get centralized logging and analytics but no access control yet.

Layer 2: Gateway-managed credentials

┌─────────┐ ┌─────────┐ ┌────────────┐

│ Agent │ ───► │ Gateway │ ───► │ MCP Server │

└─────────┘ └─────────┘ └────────────┘

│ │ │

│ └── Injects token ─┘

│ from vault

└── No credentials needed

Gateway injects backend credentials from a secrets vault. Agents never see the underlying tokens.

Layer 3: OAuth/OIDC authentication

┌─────────┐ ┌─────────┐ ┌────────────┐

│ Agent │ ───► │ Gateway │ ───► │ MCP Server │

└─────────┘ └─────────┘ └────────────┘

│ │ │

│ ├── Validates │

│ │ IDP token │

│ └── Injects GitHub ┘

│ token

└── Presents IDP token (Entra, Okta, etc.)

Agents must present a valid token from your identity provider before accessing any MCP endpoint.

Layer 3b: Hybrid authentication (gateway auth with pass-through MCP credentials)

┌─────────┐ ┌─────────────────────┐ ┌────────────┐

│ Agent │ ───► │ Gateway │ ───► │ MCP Server │

└─────────┘ └─────────────────────┘ └────────────┘

│ │ │

├── IDP token ────────┤ │

│ (validated) │ │

│ │ │

└── MCP credentials ──┼── Passed through ──────┘

(GitHub PAT, (gateway doesn't

Jira key, etc.) manage these)

Not every use case requires the gateway to manage MCP credentials. If your agents connect to multiple backend MCP servers—each with individual, user-specific access—you may want the gateway to handle authentication and governance while letting credentials pass through to the backend.

In this model:

- The gateway validates the agent's identity via JWT

- Tool-level ACLs still apply based on consumer group

- The agent provides its own credentials for the specific MCP server

- Credentials pass through to the backend unchanged

This is useful when users have varying access levels across different MCP servers, or when centralizing credential management isn't practical. The gateway still provides authentication, tool filtering, logging, and rate limiting—just not credential injection.

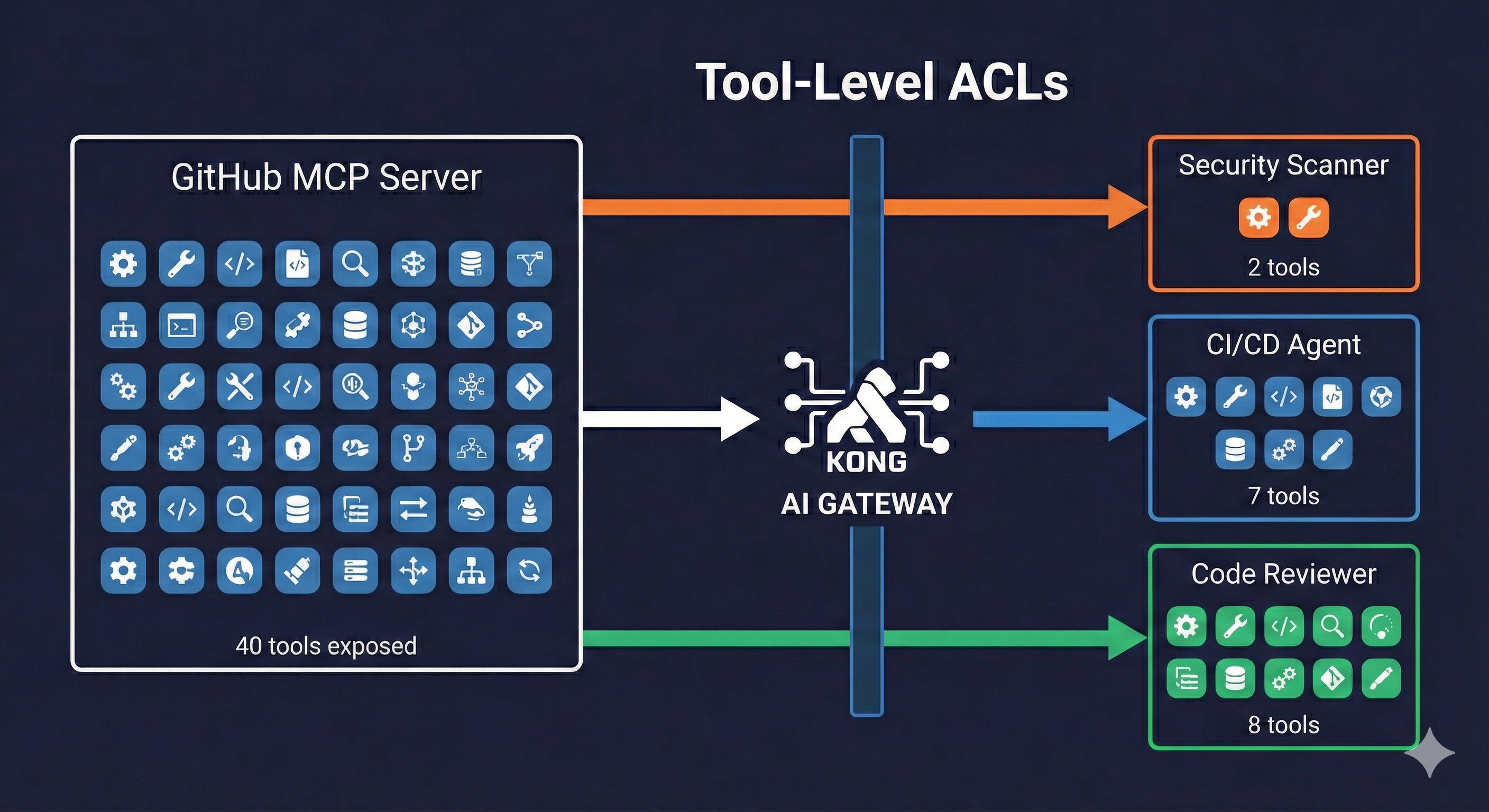

Layer 4: Tool-level ACLs

┌─────────┐ ┌─────────────────────┐ ┌────────────┐

│ Agent │ ───► │ Gateway │ ───► │ MCP Server │

└─────────┘ │ │ └────────────┘

│ │ 1. Validate token │ │

│ │ 2. Map to consumer │ │

│ │ group via claims │ │

│ │ 3. Filter tools │ │

│ │ by ACL │ │

│ └─────────────────────┘ │

│ │

└── Sees only allowed tools ──────────────────┘

(e.g., 2 tools instead of 40)

Based on JWT claims, the gateway maps agents to consumer groups and filters which tools each group can see.

Example JWT payload:

The github-mcp-access claim contains the consumer group name. When this token hits the gateway, the agent gets mapped to github-cicd-agents and sees only the tools allowed for that group.

Kong configuration:

The key insight: the tool list terminates at the gateway. The backend MCP server might expose 40 tools, but your security-scanner agent only sees 2.